Robots might be able to take to the Martian skies in just a few years.

Sensors and robotics are two exponential technologies that will disrupt a multitude of billion-dollar industries.

This post (part 3 of 4) is a quick look at how three industries — transportation, agriculture, and healthcare/elder care — will change this decade.

Before I dive into each of these industries, it’s important I mention that it’s the explosion of sensors that is fundamentally enabling much of what I describe below.

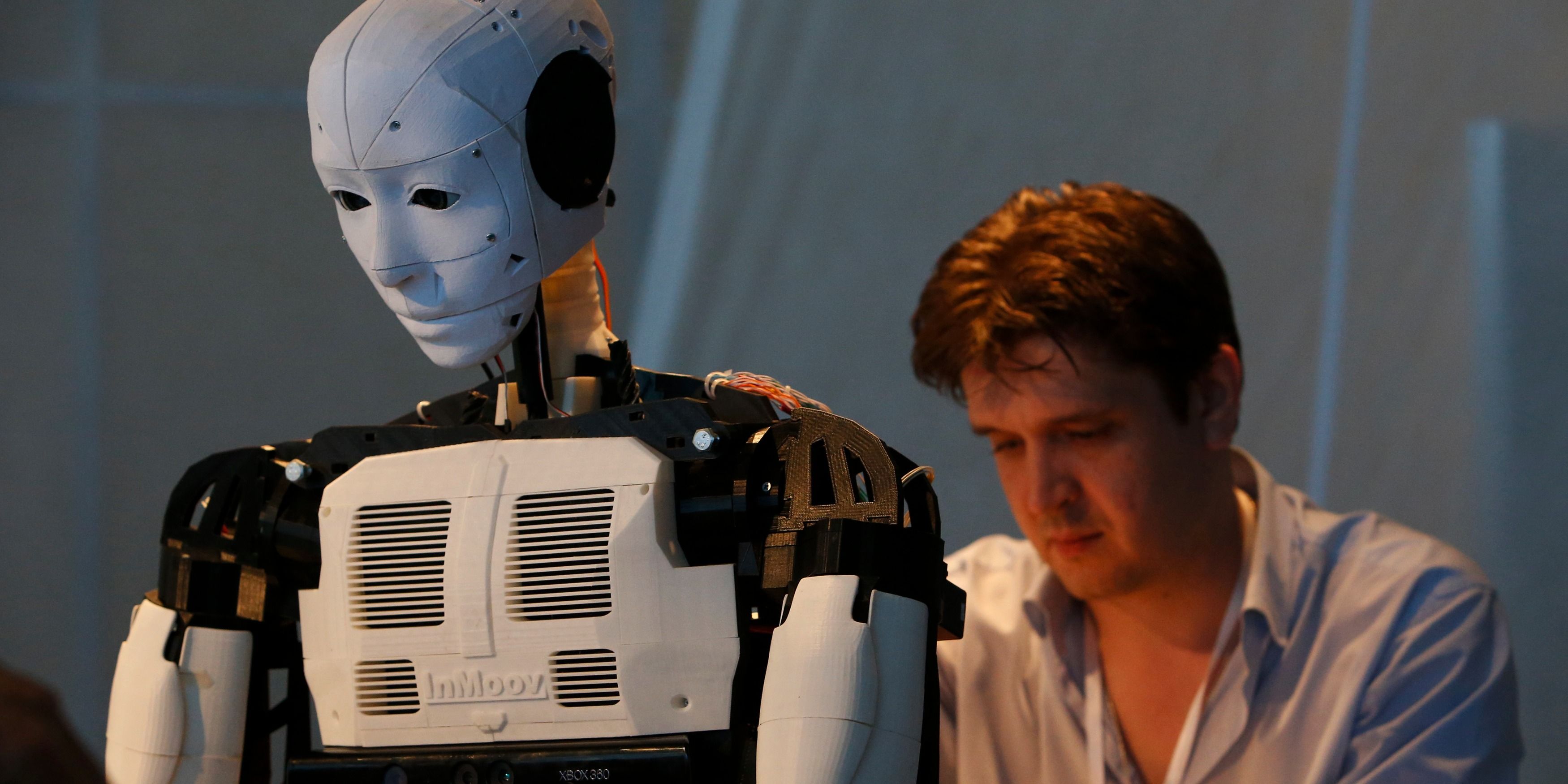

Because language is only part of human communication, IBM is using machine learning to teach robots social skills like gestures, eye movements, and voice intonations.

Artificial intelligence has come a long way. But as virtual digital assistants proliferate, they often need a non-digital assist.

Don’t get overly excited about computers and artificial intelligence replacing humans , at least not yet says Andrew Ng, chief scientist at the Chinese search giant Biadu. Computers are still in the “supervised learning” stage where human input is required to connect dots.

By Steve Gorman LOS ANGELES (Reuters) — A brain-to-computer technology that can translate thoughts into leg movements has enabled a man paralyzed from the waist down by a spinal cord injury to become the first such patient to walk without the use of robotics, doctors in Southern California reported on Wednesday. The slow, halting first steps of the 28-year-old paraplegic were documented in a preliminary study published in the British-based Journal of NeuroEngineering and Rehabilitation, along with a YouTube video. The feat was accomplished using a system allowing the brain to bypass the injured spinal cord and instead send messages through a computer algorithm to electrodes placed around the patient’s knees to trigger controlled leg muscle movements.

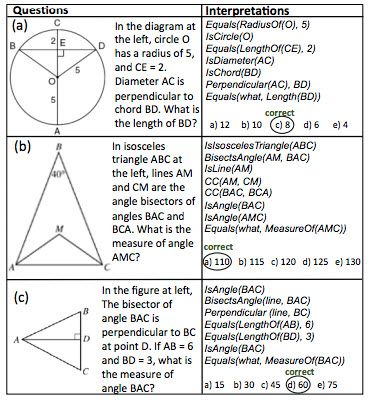

Examples of questions (left column) and interpretations (right column) derived by GEOS (credit: Minjoon Seo et al./Proceedings of EMNLP)

An AI system that can solve SAT geometry questions as well as the average American 11th-grade student has been developed by researchers at the Allen Institute for Artificial Intelligence (AI2) and University of Washington.

Have you hugged or told someone that you love them today? Maybe it wasn’t someone — maybe it was your smartphone that you gave an extra squeeze or gave an extra pat as you slipped it into your pocket. Humans have become increasingly invested in their devices, and a new era of emotional attachment to our devices and other AI seems to be upon us. But how does this work itself out on the other end — will or could AI ever respond to humans in an emotional fashion?

AI is broad, and clearly not all AI are meant to give and receive in an emotional capacity. Humans seem prone to respond to features that are similar to its own species, or to those to which it can relate to in some sort of communicative way. Most “emotional” or responsive algorithm-based capabilities have been programmed into robots that are in a humanoid – or at least a mammal-like – form.

Think androids in customer-service, entertainment, or companion-type roles. There are also robots like PARO, the baby harbor seal used for therapeutic interaction with those in assisted living and hospital environments.

In a 2003 paper published through the International Journal of Human-Computer Studies, Cynthia Breazeal quotes a study by Reeves and Nass (1996), whose research shows humans (whether computer experts, lay people, or computer critics) generally treat computers as they might treat other people.

Breazeal goes on to state that humanoid robots (and animated software agents) are particularly relevant, as a similar morphology promotes an intuitive bond based on similar communication modes, such as facial expression, body posture, gesture, gaze direction, and voice.

This in and of itself may not be a complete revelation, but how you get a robot to accomplish such emotional responses is far more complicated. When the Hanson Robotics’ team programs responses, a key objective is to build robots that are expressive and lifelike so that people can interact and feel comfortable with the emotional responses that they are receiving from a robot.

In the realm of emotions, there is a difference between robot ‘responses’ and robot ‘propensities’. Stephan Vladimir Bugaj, Creative Director at Hanson Robotics, separated the two during an interview with TechEmergence. “Propensities are much more interesting and are definitely more of the direction we’re going in the immediate long-term”, he says.

“An emotional model for a robot would be more along the lines of weighted sets of possible response spaces that the robot can go into based on a stimulus and choose a means of expression within that emotional space based on a bunch of factors.” In other words, a robot with propensities would consider a set of questions, such as “What do I think of the person? How did it act in the last minute? How am I feeling today?”. This how most humans function through reason, though it happens so habitually and quickly in the subconscious that we are hardly aware of the process.

Context of immediate stimulus would provide an emotional frame, allowing a robot to have a more complex response to each stimulus. The use of short-term memory would help the robot build a longer-term emotional model. “You think of it as layers, you can think of it as interconnected networks of weighted responses…as collections of neurons, there’s a lot of different ways of looking at it, but it basically comes down to stages of filtering and considering stimuli, starting with the input filter at the perceptual level.”

Similar to a human being, robots could have more than one response to a stimulus. An initial reaction or reflex might quickly give way to a more “considered response”, cause by stored and shared information in a neural-like network. Stephan describes a hypothetical scene in which a friend enters a room and begins taking swings at his or her friend. At first, the friend who is on the defense might react by immediately assuming a fighting stance; however, it might only take a few seconds for him or to realize that the other person is actually just “horsing around” and being a bit of an antagonist for sport.

This string of events provides a simple way to visualize emotional stages of reaction. Perception, context, and analysis all play a part in the responses of a complex entity, including advanced robots. Robots with such potential complex emotional models seem different from AI entities programmed to respond to human emotions.

These AI don’t necessarily need to take a human-like form (I’m thinking of the movie Her), as long as they can communicate in a language that humans understand. In the past few years, innovators have started to hit the IndieGogo market with domestic social robots such as Jibo and EmoSPARK, meant to enhance human wellbeing through intelligent response capabilities.

Patrick Levy Rosenthal, founder of EmoSpace, envisioned a device that connects to the various electronic objects in our homes, able to adjust their function to positively affect our emotional state. “For the last 20 years, I believe that robotics and artificial intelligence failed humans…we still see them as a bunch of silicon… we know that they don’t understand what we feel.”

Rosenthal set out to change this perception with EmoSPARK, a cube-like AI that calibrates with other objects in the user’s home, such as an mp3 music player. The device, according to Rosenthal, tracks over 180 points on a person’s face, as well as the relation between those points – if you’re smiling, your lips will be stretched and eyes more narrow. The device also detects movement and voice tonality for reading emotional cues. It can then respond to those cues with spoken prompts and suggestions for improving mood – for example, asking if its human user needs to hear a joke or a favorite song; it can also respond to and process spoken commands.

While robots that respond to humans’ emotionally-based states and requests may soon be available to the masses, robots that have their own emotional models – that can “laugh and cry” autonomously, so to speak – are still out of reach, for the time being.