Someday this could happen as well as US congress, Supreme Court, the UN, Nato, IAEA, WTO, World Bank, etc.

IBM’s AI researchers seem to favour recreational drug use, free university education and free healthcare.

Someday this could happen as well as US congress, Supreme Court, the UN, Nato, IAEA, WTO, World Bank, etc.

IBM’s AI researchers seem to favour recreational drug use, free university education and free healthcare.

Stanford University PhD candidate, Song Han, who works under advisor and networking pioneer, Dr. Bill Dally, responded in a most soft-spoken and thoughtful way to the question of whether the coupled software and hardware architecture he developed might change the world.

In fact, instead of answering the question directly, he pointed to the range of applications, both in the present and future, that will be driven by near real-time inference for complex deep neural networks—all a roundabout way of showing not just why what he is working toward is revolutionary, but why the missing pieces he is filling in have kept neural network-fed services at a relative constant.

There is one large barrier to that future Han considers imminent—one pushed by an existing range of neural network-driven applications powering all aspects of the consumer economy and, over time, the enterprise. And it’s less broadly technical than it is efficiency-driven. After all, considering the mode of service delivery of these applications, often lightweight, power-aware devices, how much computation can be effectively packed into the memory of such devices—and at what cost to battery life or overall power? Devices aside, these same concerns, at a grander level of scale, are even more pertinent at the datacenter where some bulk of the inference is handled.

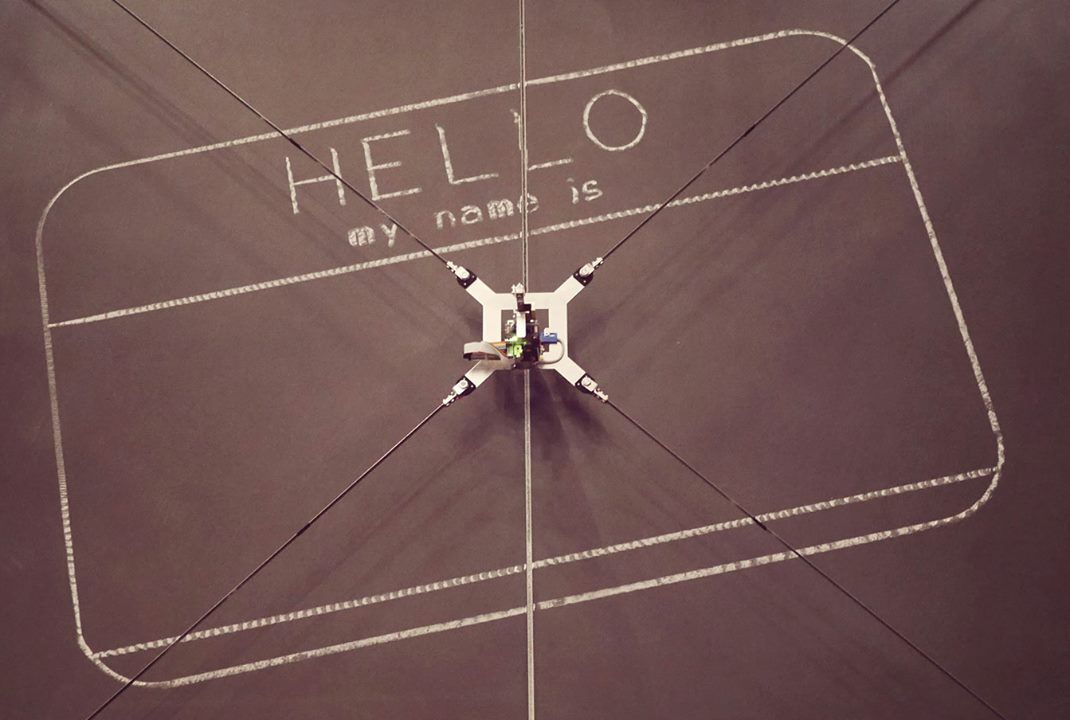

Meet Otto, the robot that draws.

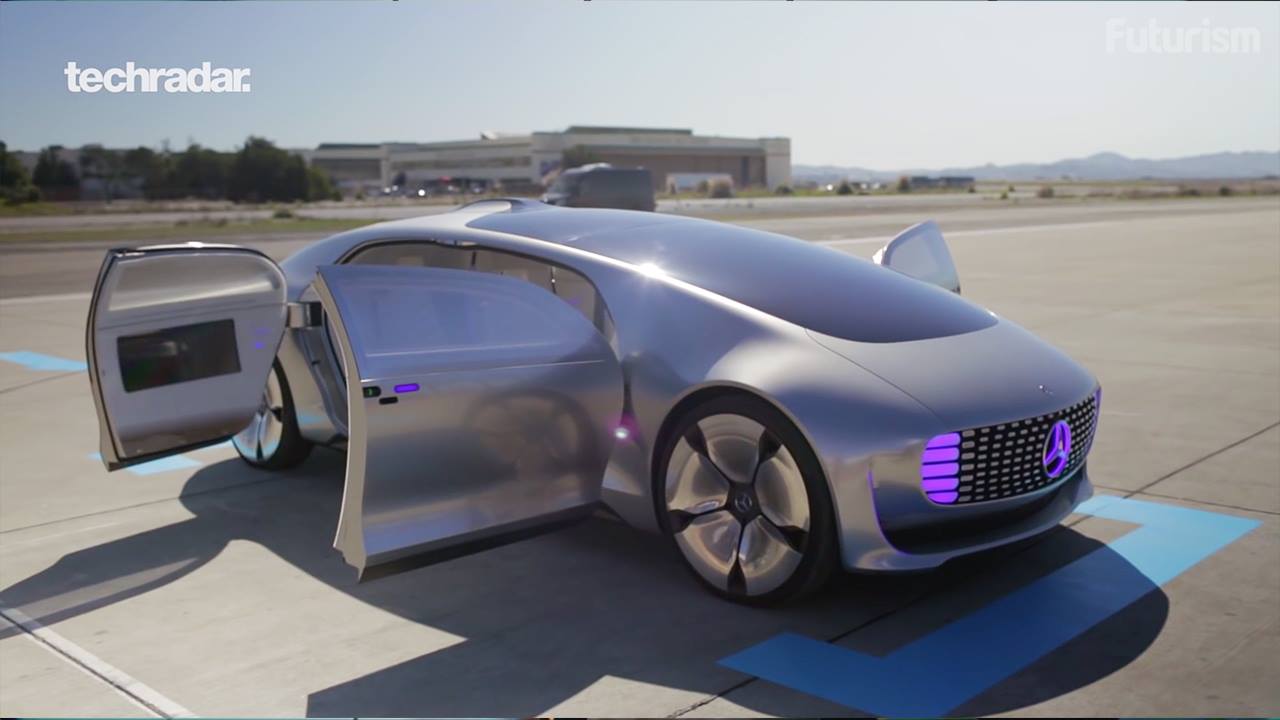

Autonomous cars have finally arrived, and they’re pretty remarkable. Here’s a look at the best on the line.

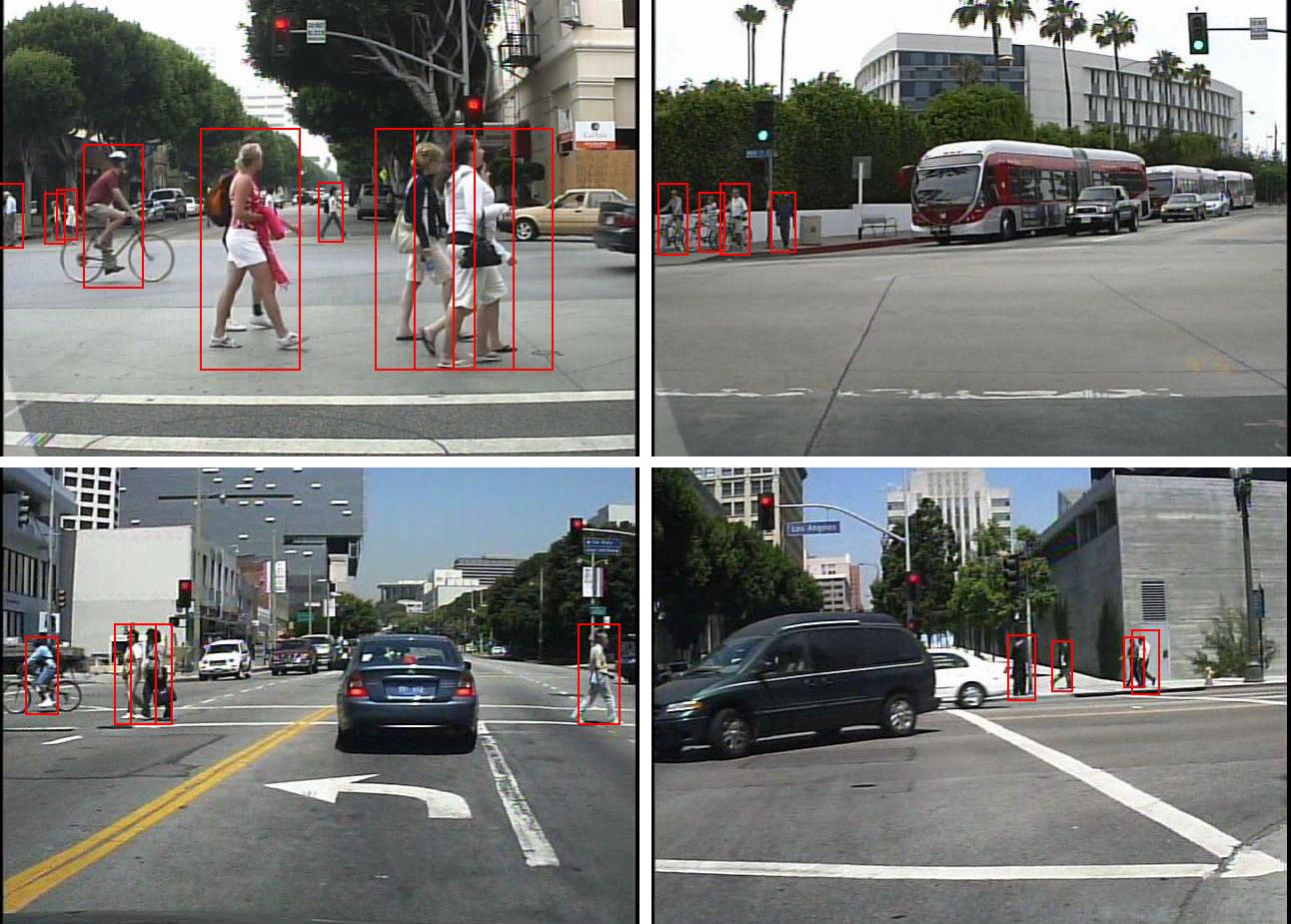

What if computers could recognize objects as well as the human brain could? Electrical engineers at the University of California, San Diego have taken an important step toward that goal by developing a pedestrian detection system that performs in near real-time (2−4 frames per second) and with higher accuracy (close to half the error) compared to existing systems. The technology, which incorporates deep learning models, could be used in “smart” vehicles, robotics and image and video search systems.

Microsoft’s (NASDAQ:MSFT) AI program XiaoIce, which was being tested on Chinese social media sites has shown positive results, a positive for Microsoft.

Around the world, cities are choking on smog. But a new AI system plans to analyze just how bad the situation is by aggregating data from smartphone pictures captured far and wide across cities.

The project, called AirTick, has been developed by researchers from Nanyang Technological University in Singapore, reports New Scientist. The reasoning is pretty simple: Deploying air sensors isn’t cheap and takes a long time, so why not make use of the sensors that everyone has in their pocket?

The result is an app which allows people to report smog levels by uploading an image tagged with time and location. Then, a machine learning algorithm chews through the data and compares it against official air-quality measurements where it can. Over time, the team hopes the software will slowly be able to predict air quality from smartphone images alone.

Me and one of my friends on LinkedIn both knew it was only a matter of time that AI & Quantum together would be announced. And, Google with D-Wave indeed would be leading this charge. BTW — once this pairing of technologies is done; get ready for some amazing AI technology including robotics to come out.

But there may not be any competitors for a while if Google’s “Ace of Spades” newbie performs as they predict. According to Hartmut Neven, head of its Quantum Al Lab, this baby can run:

“We found that for problem instances involving nearly 1,000 binary variables, quantum annealing significantly outperforms its classical counterpart, simulated annealing. It is more than 10 to the power of 8 times faster than simulated annealing running on a single core.”

In layperson’s lingo: this sucker will run 100 million times faster than the clunker on your desk. Problem is, it may not be on the production line for a while.

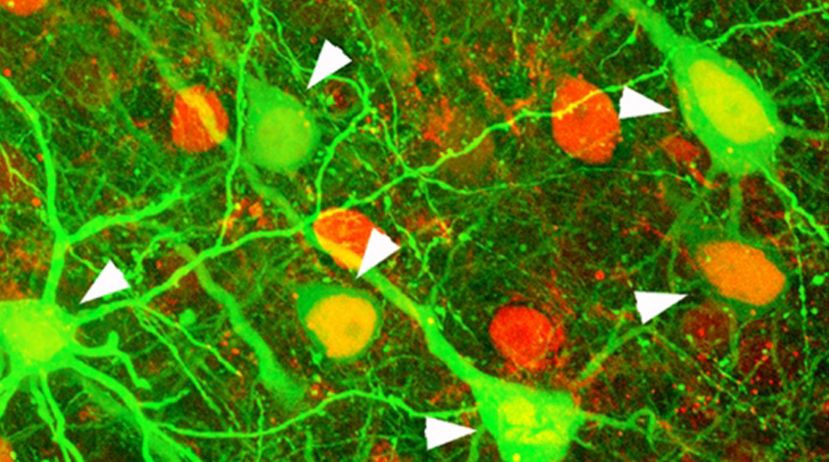

Carnegie Mellon University is embarking on a five-year, $12 million research effort to reverse-engineer the brain and “make computers think more like humans,” funded by the U.S. Intelligence Advanced Research Projects Activity (IARPA). The research is led by Tai Sing Lee, a professor in the Computer Science Department and the Center for the Neural Basis of Cognition (CNBC).

The research effort, through IARPA’s Machine Intelligence from Cortical Networks (MICrONS) research program, is part of the U.S. BRAIN Initiative to revolutionize the understanding of the human brain.

A “Human Genome Project” for the brain’s visual system

“MICrONS is similar in design and scope to the Human Genome Project, which first sequenced and mapped all human genes,” Lee said. “Its impact will likely be long-lasting and promises to be a game changer in neuroscience and artificial intelligence.”

Bon Appétit — could 3D Printers be coming to make your next 1st Class Meal on a flight, or in resturants with robot servers? The US Army believes it is the new way for them.

Beats the heck out of MREs.