A realistic article on AI — especially around AI being manipulated by others for their own gain which I have also identified as the real risks with AI.

Artificial intelligence (AI), once the seeming red-headed stepchild of the scientific community, has come a long way in the past two decades. Most of us have reconciled with the fact that we can’t live without our smartphones and Siri, and AI’s seemingly omnipotent nature has infiltrated the nearest and farthest corners of our lives, from robo-advisors on Wall Street and crime-spotting security cameras, to big data analysis by Google’s BigQuery and Watson’s entry into diagnostics in the medical field.

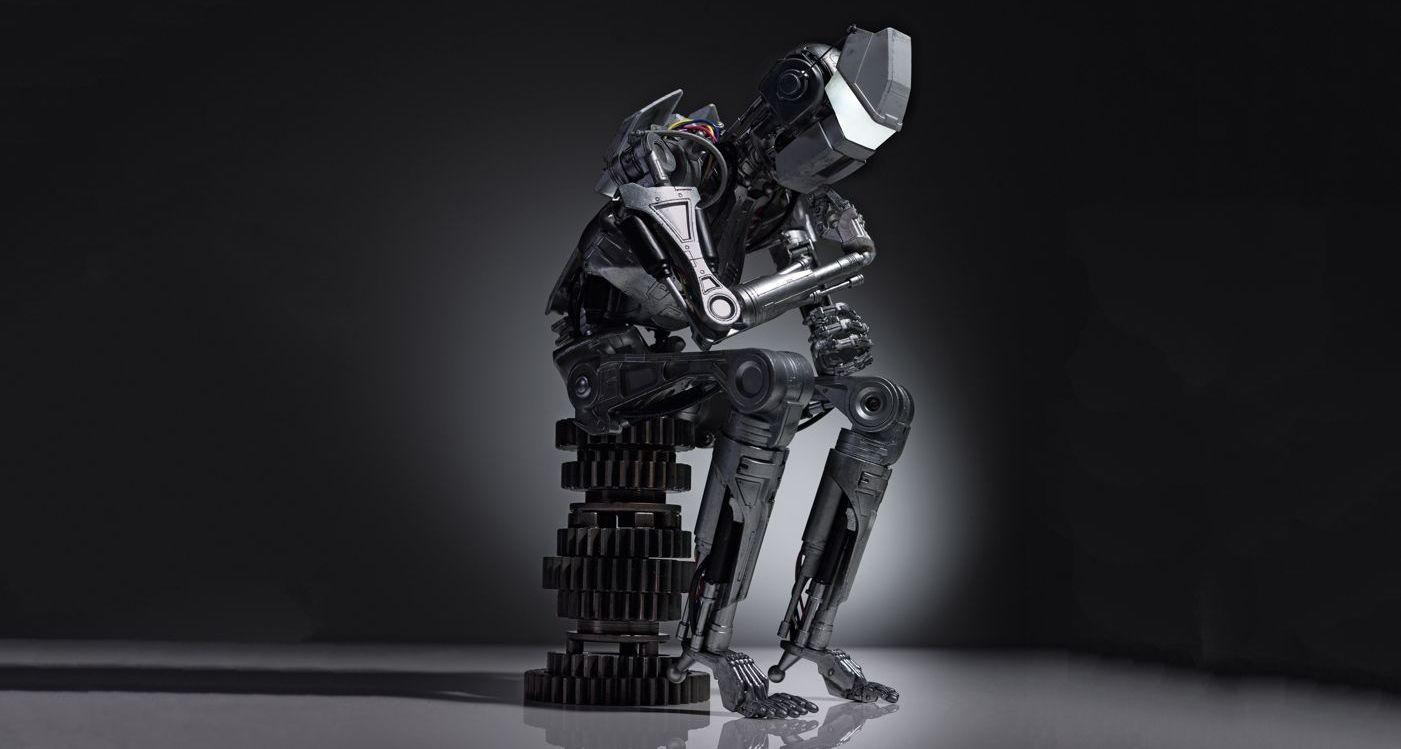

In many unforeseen ways, AI is helping to improve and make our lives more efficient, though the reverse degeneration of human economic and cultural structures is also a potential reality. The Future of Life Institute’s tagline sums it up in succinct fashion: “Technology is giving life the potential to flourish like never before…or to self-destruct.” Humans are the creators, but will we always have control of our revolutionary inventions?

To much of the general public, AI is AI is AI, but this is only part truth. Today, there are two primary strands of AI development — ANI (Artificial Narrow Intelligence) and AGI (Artificial General Intelligence). ANI is often termed “weak AI” and is “the expert” of the pair, using its intelligence to perform specific functions. Most of the technology with which we surround ourselves (including Siri) falls into the ANI bucket. AGI is the next generation of ANI, and it’s the type of AI behind dreams of building a machine that achieves human levels of consciousness.