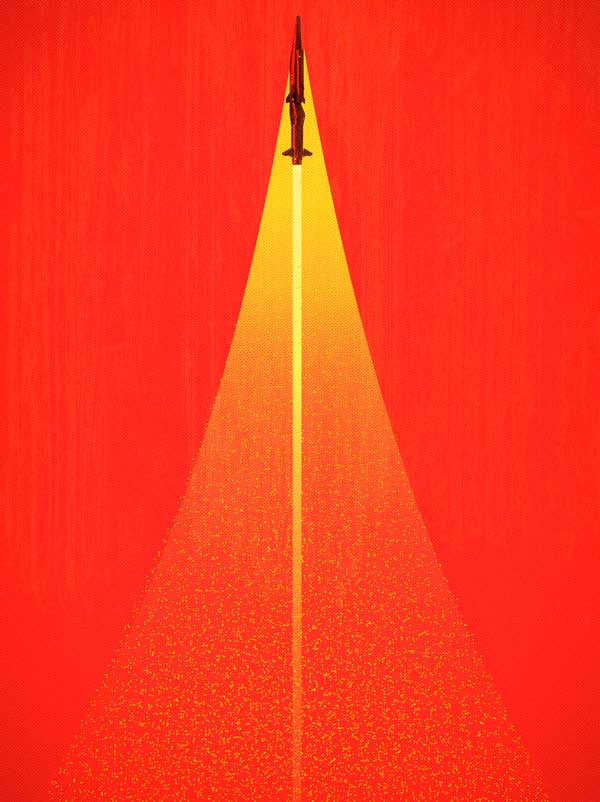

It seems some countries are now switching to drone swarms.

From Syria to Libya to Nagorno-Karabakh, this new method of military offense has been brutally effective. We are witnessing a revolution in the history of warfare, one that is causing panic, particularly in Europe.

In an analysis written for the European Council on Foreign Relations, Gustav Gressel, a senior policy fellow, argues that the extensive (and successful) use of military drones by Azerbaijan in its recent conflict with Armenia over Nagorno-Karabakh holds “distinct lessons for how well Europe can defend itself.”

Gressel warns that Europe would be doing itself a disservice if it simply dismissed the Nagorno-Karabakh fighting as “a minor war between poor countries.” In this, Gressel is correct – the military defeat inflicted on Armenia by Azerbaijan was not a fluke, but rather a manifestation of the perfection of the art of drone warfare by Baku’s major ally in the fighting, Turkey. Gressel’s conclusion – that “most of the [European Union’s] armies… would do as miserably as the Armenian Army” when faced by such a threat – is spot on.