Ask an Information Architect, CDO, Data Architect (Enterprise and non-Enterprise) they will tell you they have always known that information/ data is a basic staple like Electricity all along; and glad that folks are finally realizing it. So, the same view that we apply to utilities as core to our infrastructure & survival; we should also apply the same value and view about information. And, in fact, information in some areas can be even more important than electricity when you consider information can launch missals, cure diseases, make you poor or wealthy, take down a government or even a country.

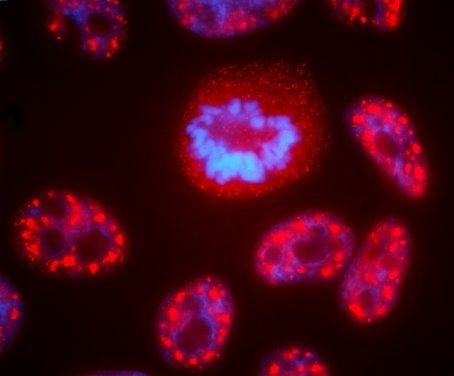

What is information? Is it energy, matter, or something completely different? Although we take this word for granted and without much thought in today’s world of fast Internet and digital media, this was not the case in 1948 when Claude Shannon laid the foundations of information theory. His landmark paper interpreted information in purely mathematical terms, a decision that dematerialized information forever more. Not surprisingly, there are many nowadays that claim — rather unthinkingly — that human consciousness can be expressed as “pure information”, i.e. as something immaterial graced with digital immortality. And yet there is something fundamentally materialistic about information that we often ignore, although it stares us — literally — in the eye: the hardware that makes information happen.

As users we constantly interact with information via a machine of some kind, such as our laptop, smartphone or wearable. As developers or programmers we code via a computer terminal. As computer or network engineers we often have to wade through the sheltering heat of a server farm, or deal with the material properties of optical fibre or copper in our designs. Hardware and software are the fundamental ingredients of our digital world, both necessary not only in engineering information systems but in interacting with them as well. But this status quo is about to be massively disrupted by Artificial Intelligence.

A decade from now the postmillennial youngsters of the late 2020s will find it hard to believe that once upon a time the world was full of computers, smartphones and tablets. And that people had to interact with these machines in order to access information, or build information systems. For them information would be more like electricity: it will always be there, and always available to power whatever you want to do. And this will be possible because artificial intelligence systems will be able to manage information complexity so effectively that it will be possible to deliver the right information at the right person at the right time, almost at an instant. So let’s see what that would mean, and how different it would be from what we have today.

Read more