But, given the rapidly evolving quantum computing landscape, that may not matter.

And that’s where physicists are getting stuck.

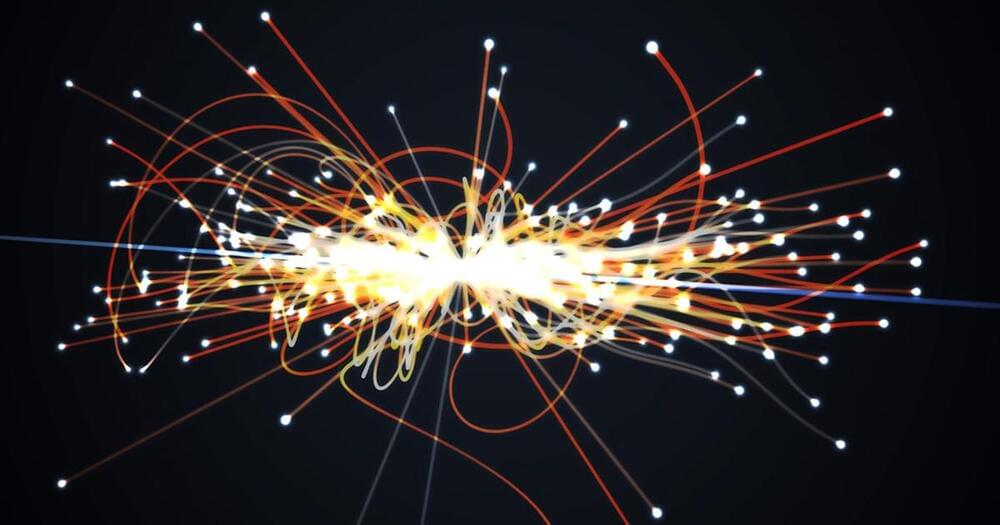

Zooming in to that hidden center involves virtual particles — quantum fluctuations that subtly influence each interaction’s outcome. The fleeting existence of the quark pair above, like many virtual events, is represented by a Feynman diagram with a closed “loop.” Loops confound physicists — they’re black boxes that introduce additional layers of infinite scenarios. To tally the possibilities implied by a loop, theorists must turn to a summing operation known as an integral. These integrals take on monstrous proportions in multi-loop Feynman diagrams, which come into play as researchers march down the line and fold in more complicated virtual interactions.

Physicists have algorithms to compute the probabilities of no-loop and one-loop scenarios, but many two-loop collisions bring computers to their knees. This imposes a ceiling on predictive precision — and on how well physicists can understand what quantum theory says.

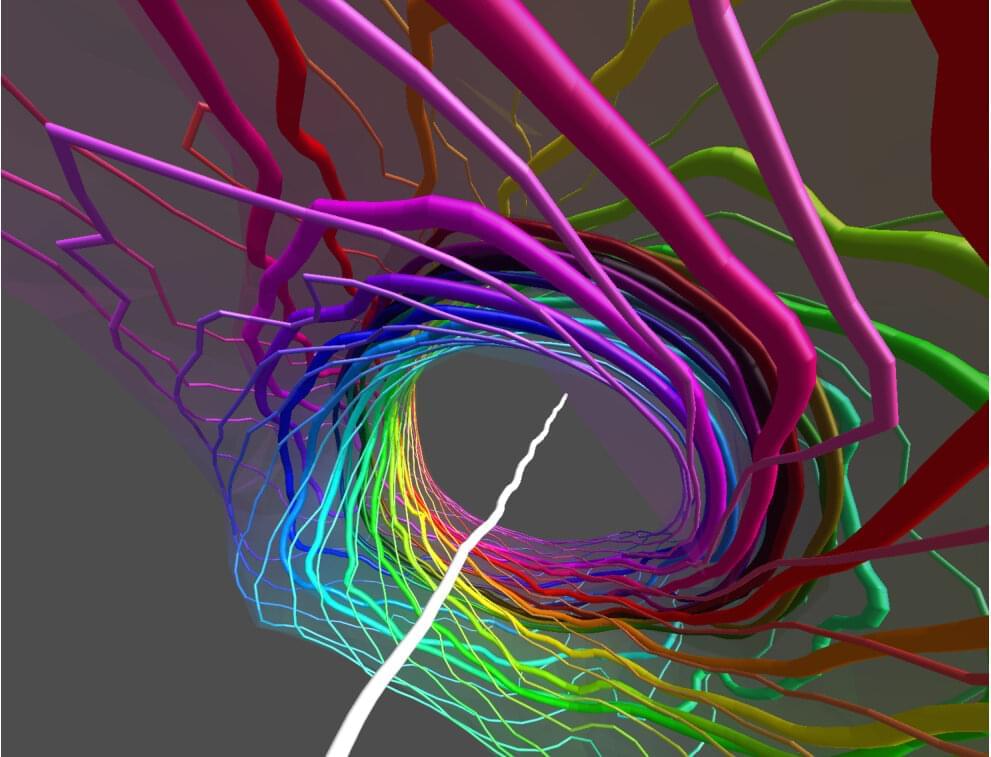

Scientists at the University of Birmingham have succeeded in creating an experimental model of an elusive kind of fundamental particle called a skyrmion in a beam of light.

The breakthrough provides physicists with a real system demonstrating the behavior of skyrmions, first proposed 60 years ago by a University of Birmingham mathematical physicist, Professor Tony Skyrme.

Skyrme’s idea used the structure of spheres in 4-dimensional space to guarantee the indivisible nature of a skyrmion particle in 3 dimensions. 3D particle-like skyrmions are theorized to tell us about the early origins of the Universe, or about the physics of exotic materials or cold atoms. However, despite being investigated for over 50 years, 3D skyrmions have been seen very rarely in experiments. The most current research into skyrmions focuses on 2D analogs, which shows promise for new technologies.

Want AI that can do 10 trillion operations using just one watt? Do the math using analog circuits instead of digital.

There’s no argument in the astronomical community—rocket-propelled spacecraft can take us only so far. The SLS will likely take us to Mars, and future rockets might be able to help us reach even more distant points in the solar system. But Voyager 1 only just left the solar system, and it was launched in 1977. The problem is clear: we cannot reach other stars with rocket fuel. We need something new.

“We will never reach even the nearest stars with our current propulsion technology in even 10 millennium,” writes Physics Professor Philip Lubin of the University of California Santa Barbara in a research paper titled A Roadmap to Interstellar Flight. “We have to radically rethink our strategy or give up our dreams of reaching the stars, or wait for technology that does not exist.”

Lubin received funding from NASA last year to study the possibility of using photonic laser thrust, a technology that does exist, as a new system to propel spacecraft to relativistic speeds, allowing them to travel farther than ever before. The project is called DEEP IN, or Directed Propulsion for Interstellar Exploration, and the technology could send a 100-kg (220-pound) probe to Mars in just three days, if research models are correct. A much heavier, crewed spacecraft could reach the red planet in a month—about a fifth of the time predicted for the SLS.

Signup for your FREE TRIAL to The GREAT COURSES PLUS here: http://ow.ly/5KMw30qK17T. Until 350 years ago, there was a distinction between what people saw on earth and what they saw in the sky. There did not seem to be any connection.

Then Isaac Newton in 1,687 showed that planets move due to the same forces we experience here on earth. If things could be explained with mathematics, to many people this called into question the need for a God.

But in the late 20th century, arguments for God were resurrected. The standard model of particle physics and general relativity is accurate. But there are constants in these equations that do not have an explanation. They have to be measured. Many of them seem to be very fine tuned.

Scientists point out for example, the mass of a neutrino is 2X10^-37kg. It has been shown that if this mass was off by just one decimal point, life would not exist because if the mass was too high, the additional gravity would cause the universe to collapse. If the mass was too low, galaxies could not form because the universe would have expanded too fast.

On closer examination, it has some problems. The argument exaggerates the idea of fine tuning by using misleading units of measurement, to make fine tuning seem much more unlikely than it may be. The mass of neutrinos is expressed in Kg. Using kilograms to measure something this small is the equivalent of measuring a person’s height in light years. A better measurement for the neutrino would be electron volts or picograms.

Another point is that most of the constants could not really be any arbitrary number. They are going to hover around some value close to what they actually are. The value of the mass of a neutrino could not be the mass of a bowling ball. Such massive particles with the property of a neutrino could not have been created during the Big Bang.

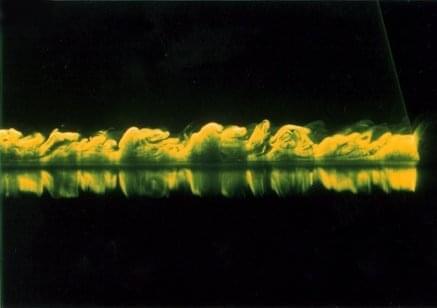

Turbulence makes many people uneasy or downright queasy. And it’s given researchers a headache, too. Mathematicians have been trying for a century or more to understand the turbulence that arises when a flow interacts with a boundary, but a formulation has proven elusive.

Now an international team of mathematicians, led by UC Santa Barbara professor Björn Birnir and the University of Oslo professor Luiza Angheluta, has published a complete description of boundary layer turbulence. The paper appears in Physical Review Research, and synthesizes decades of work on the topic. The theory unites empirical observations with the Navier-Stokes equation—the mathematical foundation of fluid dynamics—into a mathematical formula.

This phenomenon was first described around 1920 by Hungarian physicist Theodore von Kármán and German physicist Ludwig Prandtl, two luminaries in fluid dynamics. “They were honing in on what’s called boundary layer turbulence,” said Birnir, director of the Center for Complex and Nonlinear Science. This is turbulence caused when a flow interacts with a boundary, such as the fluid’s surface, a pipe wall, the surface of the Earth and so forth.

As robots are introduced in an increasing number of real-world settings, it is important for them to be able to effectively cooperate with human users. In addition to communicating with humans and assisting them in everyday tasks, it might thus be useful for robots to autonomously determine whether their help is needed or not.

Researchers at Franklin & Marshall College have recently been trying to develop computational tools that could enhance the performance of socially assistive robots, by allowing them to process social cues given by humans and respond accordingly. In a paper pre-published on arXiv and presented at the AI-HRI symposium 2021 last week, they introduced a new technique that allows robots to autonomously detect when it is appropriate for them to step in and help users.

“I am interested in designing robots that help people with everyday tasks, such as cooking dinner, learning math, or assembling Ikea furniture,” Jason R. Wilson, one of the researchers who carried out the study, told TechXplore. “I’m not looking to replace people that help with these tasks. Instead, I want robots to be able to supplement human assistance, especially in cases where we do not have enough people to help.”

Have you ever seen the popular movie called The Matrix? In it, the main character Neo realizes that he and everyone else he had ever known had been living in a computer-simulated reality. But even after taking the red pill and waking up from his virtual world, how can he be so sure that this new reality is the real one? Could it be that this new reality of his is also a simulation? In fact, how can anyone tell the difference between simulated reality and a non-simulated one? The short answer is, we cannot. Today we are looking at the simulation hypothesis which suggests that we all might be living in a simulation designed by an advanced civilization with computing power far superior to ours.

The simulation hypothesis was popularized by Nick Bostrum, a philosopher at the University of Oxford, in 2003. He proposed that members of an advanced civilization with enormous computing power may run simulations of their ancestors. Perhaps to learn about their culture and history. If this is the case he reasoned, then they may have run many simulations making a vast majority of minds simulated rather than original. So, there is a high chance that you and everyone you know might be just a simulation. Do not buy it? There is more!

According to Elon Musk, if we look at games just a few decades ago like Pong, it consisted of only two rectangles and a dot. But today, games have become very realistic with 3D modeling and are only improving further. So, with virtual reality and other advancements, it seems likely that we will be able to simulate every detail of our minds and bodies very accurately in a few thousand years if we don’t go extinct by then. So games will become indistinguishable from reality with an enormous number of these games. And if this is the case he argues, “then the odds that we are in base reality are 1 in billions”.

There are other reasons to think we might be in a simulation. For example, the more we learn about the universe, the more it appears to be based on mathematical laws. Max Tegmark, a cosmologist at MIT argues that our universe is exactly like a computer game which is defined by mathematical laws. So for him, we may be just characters in a computer game discovering the rules of our own universe.

With our current understanding of the universe, it seems impossible to simulate the entire universe given a potentially infinite number of things within it. But would we even need to? All we need to simulate is the actual minds that are occupying the simulated reality and their immediate surroundings. For example, when playing a game, new environments render as the player approaches them. There is no need for those environments to exist prior to the character approaching them since this can save a lot of computing power. This can be especially true of simulations that are as big as our universe. So, it could be argued that distant galaxies, atoms, and anything that we are actively not observing simply does not exist. These things render into existence once someone starts to observe them.

On his podcast StarTalk, astrophysicist Neil deGrasse Tyson and comedian Chuck Nice discussed the simulation hypothesis. Nice suggested that maybe there is a finite limit to the speed of light because if there wasn’t, we would be able to reach other galaxies very quickly. Tyson was surprised by this statement and further added that the programmer put in this limit to make sure we cannot get too far away places before the programmer has the time to program them.

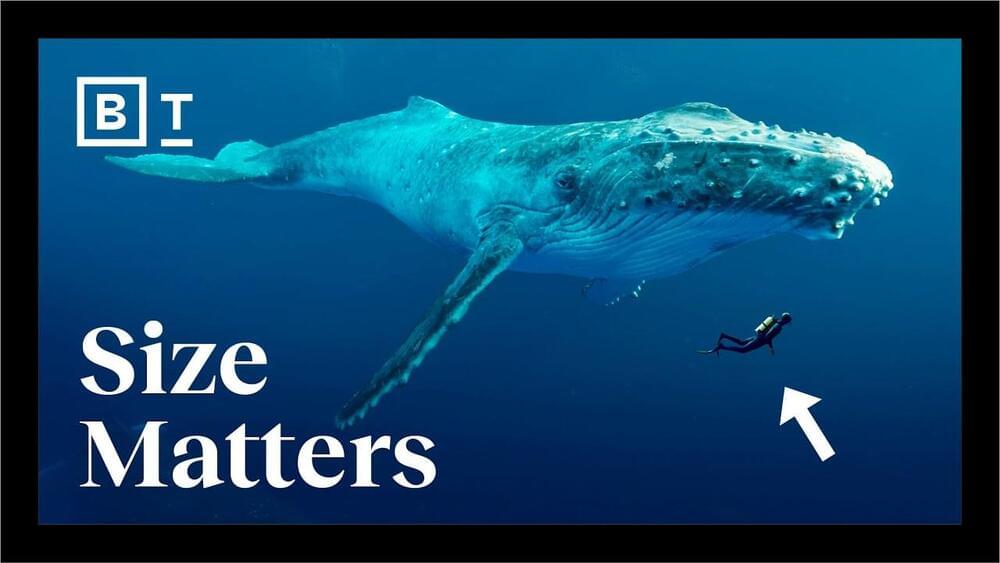

It’s one of the most fascinating aspects of the natural world: shapes repeat over and over. The branches of a tree extending into the sky look much the same as blood vessels extending through a human lung, if upside-down. The largest mammal, the whale, is a scaled-up version of the smallest, the shrew. Recent research even suggests the structure of the human brain resembles that of the entire universe. It’s everywhere you look, really. Nature reuses its most successful shapes.

Theoretical physicist Geoffrey West of the Santa Fe Institute in New Mexico is concerned with fundamental questions in physics, and there are few more fundamental than this one: why does nature continually reuse the same non-linear shapes and structures from the smallest scale to the very largest? In a new Big Think video (see above), West explains that the scaling laws at work are nothing less than “the generic universal mathematical and physical properties of the multiple networks that make an organism viable and allow it to develop and grow.”

“I think it’s one of the more remarkable properties of life, actually,” West added.

Learning science is about understanding complex systems and interactions among their entities. Telescopes are for observing objects that are far away, and microscopes are for exploring the tiniest objects. But what tools do we have for visualizing general patterns, processes, or relationships that can be defined in terms of compact mathematical models? Visualizing the unseeable can be a powerful teaching tool.

SETI Institute affiliate Dr. Mojgan Haganikar has written a book, Visualizing Dynamic Systems, that categorizes the visualization skills needed for various types of scientific problems. With the emergence of new technologies, we have more powerful tools to visualize invisible concepts, complex systems, and large datasets by revealing patterns and inter-relations in new ways. Join the SETI Institute’s Pamela Harman as she explores what is possible with Haganikar.

If you like science, support the SETI Institute! We’re a non-profit research institution whose focus is understanding the nature and origins of life in the universe. Donate here: https://seti.org/donate.

Learn more about the SETI Institute and stay up-to-date on awesome science:

- Subscribe to our YouTube channel at https://www.youtube.com/c/SETIInstitute/

- Watch our streams over on Twitch at https://www.twitch.tv/setiinstitute.

- Listen to our podcast, Big Picture Science http://www.bigpicturescience.org/

- Subscribe to our newsletter https://seti.org/signup.

- Buy merchandise from Chop Shop https://www.chopshopstore.com/collections/seti-institute/SETI

Don’t forget to like and subscribe! Ring the bell for notifications of when we go live.