Created as an analogy for Quantum Electrodynamics (QED) — which describes the interactions due to the electromagnetic force carried by photons — Quantum Chromodynamics (QCD) is the theory of physics that explains the interactions mediated by the strong force — one of the four fundamental forces of nature.

A new collection of papers published in The European Physical Journal Special Topics and edited by Diogo Boito, Instituto de Fisica de Sao Carlos, Universidade de Sao Paulo, Brazil, and Irinel Caprini, Horia Hulubei National Institute for Physics and Nuclear Engineering, Bucharest, Romania, brings together recent developments in the investigation of QCD.

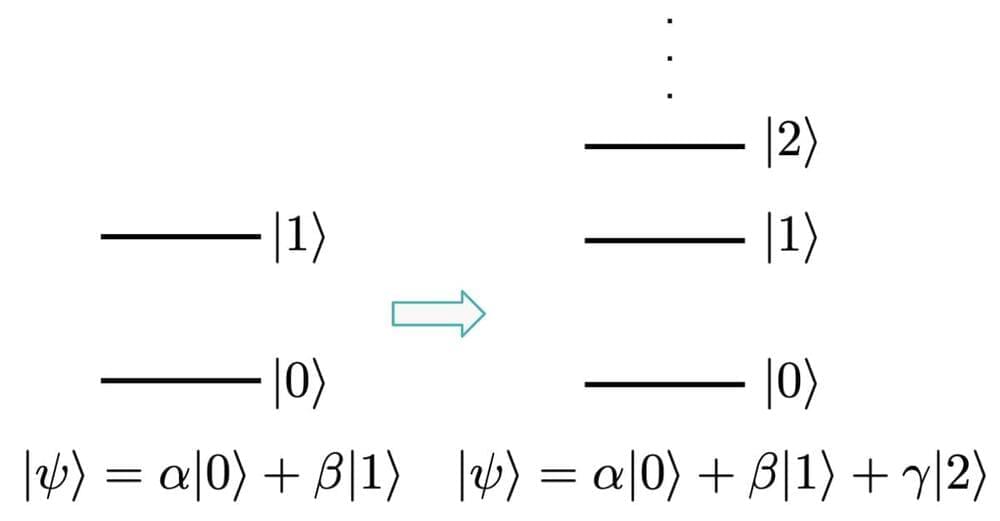

The editors explain in a special introduction to the collection that due to a much stronger coupling in the strong force — carried by gluons between quarks, forming the fundamental building blocks of matter — described by QCD, than the electromagnetic force, the divergence of perturbation expansions in the mathematical descriptions of a system can have important physical consequences. The editors point out that this has become increasingly relevant with recent high-precision calculations in QCD, due to advances in the so-called higher-order loop computations.