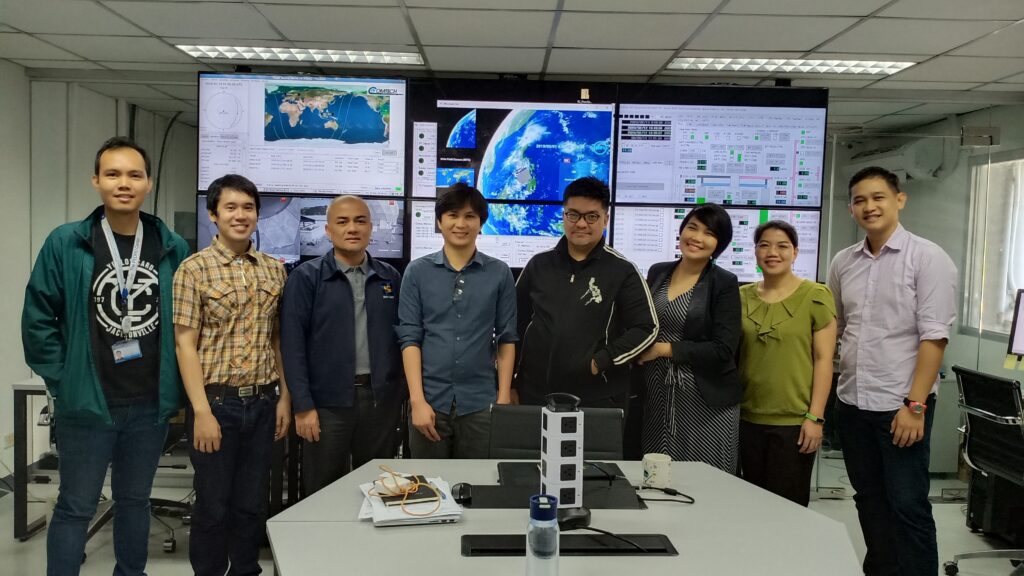

MANILA, Philippines — A dengue case forecasting system using space data made by Philippine developers won the 2019 National Aeronautics and Space Administration’s International Space Apps Challenge. Over 29,000 participating globally in 71 countries, this solution made it as one of the six winners in the best use of data, the solution that best makes space data accessible, or leverages it to a unique application.

Dengue fever is a viral, infectious tropical disease spread primarily by Aedes aegypti female mosquitoes. With 271,480 cases resulting in 1,107 deaths reported from January 1 to August 31, 2019 by the World Health Organization, Dominic Vincent D. Ligot, Mark Toledo, Frances Claire Tayco, and Jansen Dumaliang Lopez from CirroLytix developed a forecasting model of dengue cases using climate and digital data, and pinpointing possible hotspots from satellite data.

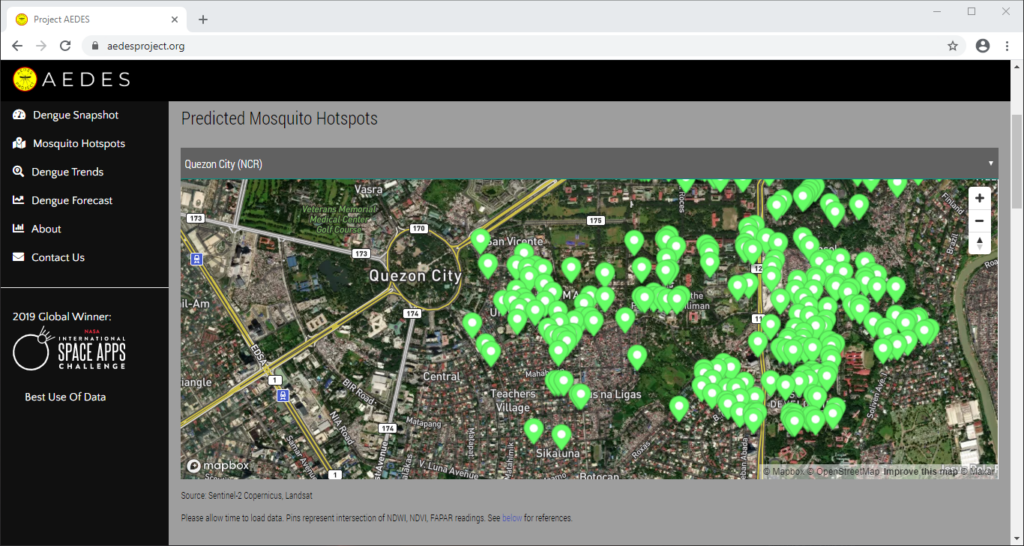

Correlating information from Sentinel-2 Copernicus and Landsat 8 satellites, climate data from the Philippine Atmospheric, Geophysical and Astronomical Services Administration of the Department of Science and Technology (DOST-PAGASA) and trends from Google search engines, potential dengue hotspots will be shown in a web interface.

Using satellite spectral bands like green, red, and near-infrared (NIR), indices like Fraction of Absorbed Photosynthetically Active Radiation (FAPAR) and Normalized Difference Vegetation Index (NDVI) are calculated in identifying areas with green vegetation while Normalized Difference Water Index (NDWI) identifies areas with water. Combining these indices reveal potential areas of stagnant water capable of being breeding grounds for mosquitoes, extracted as coordinates through a free and open-source cross-platform desktop geographic information system QGIS.

https://www.youtube.com/watch?v=uzpI775XoY0

Check out the website here: http://aedesproject.org/

“AEDES aims to improve public health response against dengue fever in the Philippines by pinpointing possible hotspots using Earth observations,” Dr. Argyro Kavvada of NASA Earth Science and Booz Allen Hamilton explained.

The DOST-Philippine Council for Industry, Energy and Emerging Technology Research and Development (DOST-PCIEERD) deputy executive director Engr. Raul C. Sabularse said that the winning solution “benefits the community especially those countries suffering from malaria and dengue, just like the Philippines. I think it has a global impact. This is the new science to know the potential areas where dengue might occur. It is a good app.”

“It is very relevant to the Philippines and other countries which usually having problems with dengue. The team was able to show that it’s not really difficult to have all the data you need and integrate all of them and make them accessible to everyone for them to be able to use it. It’s a working model,” according to Monchito B. Ibrahim, industry development committee chairman of the Analytics Association of the Philippines and former undersecretary of the Department of Information and Communications Technology.

The leader of the Space Apps global organizing team Dr. Paula S. Bontempi, acting deputy director of the Earth Science Mission, NASA’s Science Mission Directorate remembers the pitch of the winning team when she led the hackathon in Manila. “They were terrific. Well deserved!” she said.

“I am very happy we landed in the winning circle. This would be a big help particularly in addressing our health-related problems. One of the Sustainable Development Goals (SDGs) is on Good Health and Well Being and the problem they are trying to address is analysis related to dengue,“ said Science and Technology secretary Fortunato T. de la Peña. Rex Lor from the United Nations Development Programme (UNDP) in the Philippines explained that the winning solution showcases the “pivotal role of cutting-edge digital technologies in the creation of strategies for sustainable development in the face of evolving development issues.”

U.S Public Affairs counselor Philip W. Roskamp and PLDT Enterprise Core Business Solutions vice president and head Joseph Ian G. Gendrano congratulates the next group of Pinoy winners.

Sec. de la Peña is also very happy on this second time victory for the Philippines on the global competition of NASA. The first winning solution ISDApp uses “data analysis, particularly NASA data, to be able to help our fishermen make decisions on when is the best time to catch fish.” It is currently being incubated by Animo Labs, the technology business incubator and Fab Lab of De La Salle University in partnership with DOST-PCIEERD. Project AEDES will be incubated by Animo Labs too.

University president Br. Raymundo B. Suplido FSC hopes that NASA Space Apps would “encourage our young Filipino researchers and scientists to create ideas and startups based on space science and technology, and pave the way for the promotion and awareness of the programs of our own Philippine space agency.”

Philippine vice president Leni Robredo recognized Space Apps as a platform “where some of our country’s brightest minds can collaborate in finding and creating solutions to our most pressing problems, not just in space, but more importantly here on Earth.”

“Space Apps is a community of scientists and engineers, artists and hackers coming together to address key issues here on Earth. At the heart of Space Apps are data that come to us from spacecraft flying around Earth and are looking at our world,” explained by Dr. Thomas Zurbuchen, NASA associate administrator for science.

“Personally, I’m more interested in supporting the startups that are coming out of the Space Apps Challenge,” according to DOST-PCIEERD executive director Dr. Enrico C. Paringit.

In the Philippines, Space Apps is a NASA-led initiative organized in collaboration with De La Salle University, Animo Labs, DOST-PCIEERD, PLDT InnoLab, American Corner Manila, U.S. Embassy, software developer Michael Lance M. Domagas, and celebrates the Design Week Philippines with the Design Center of the Philippines of the Department of Trade and Industry. It is globally organized by Booz Allen Hamilton, Mindgrub, and SecondMuse.

Space Apps is a NASA incubator innovation program. The next hackathon will be on October 2–4, 2020.

#SpaceApps #SpaceAppsPH