Signup for your FREE TRIAL to The GREAT COURSES PLUS here: http://ow.ly/5KMw30qK17T. Until 350 years ago, there was a distinction between what people saw on earth and what they saw in the sky. There did not seem to be any connection.

Then Isaac Newton in 1,687 showed that planets move due to the same forces we experience here on earth. If things could be explained with mathematics, to many people this called into question the need for a God.

But in the late 20th century, arguments for God were resurrected. The standard model of particle physics and general relativity is accurate. But there are constants in these equations that do not have an explanation. They have to be measured. Many of them seem to be very fine tuned.

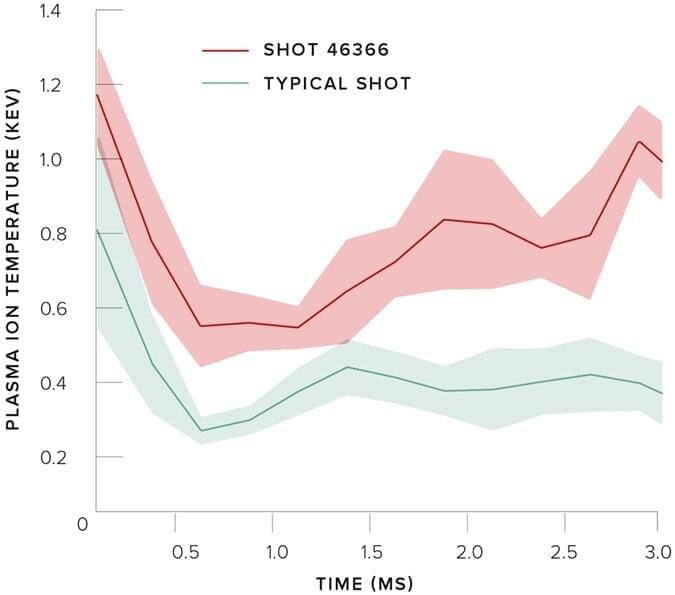

Scientists point out for example, the mass of a neutrino is 2X10^-37kg. It has been shown that if this mass was off by just one decimal point, life would not exist because if the mass was too high, the additional gravity would cause the universe to collapse. If the mass was too low, galaxies could not form because the universe would have expanded too fast.

On closer examination, it has some problems. The argument exaggerates the idea of fine tuning by using misleading units of measurement, to make fine tuning seem much more unlikely than it may be. The mass of neutrinos is expressed in Kg. Using kilograms to measure something this small is the equivalent of measuring a person’s height in light years. A better measurement for the neutrino would be electron volts or picograms.

Another point is that most of the constants could not really be any arbitrary number. They are going to hover around some value close to what they actually are. The value of the mass of a neutrino could not be the mass of a bowling ball. Such massive particles with the property of a neutrino could not have been created during the Big Bang.