✅ Instagram: https://www.instagram.com/pro_robots.

You are on the PRO Robots channel and in this video we will talk about artificial intelligence. Repeating brain structure, mutual understanding and mutual assistance, self-learning and rethinking of biological life forms, replacing people in various jobs and cheating. What have neural networks learned lately? All new skills and superpowers of artificial intelligence-based systems in one video!

0:00 In this video.

0:26 Isomorphic Labs.

1:14 Artificial intelligence trains robots.

2:01 MIT researchers’ algorithm teaches robots social skills.

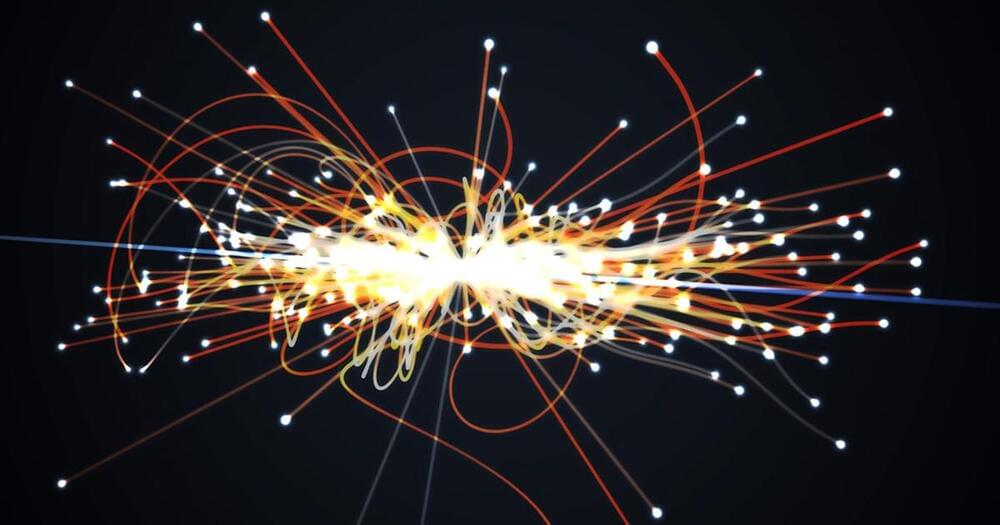

2:45 AI adopts brain structure.

3:28 Revealing cause and effect relationships.

4:40 Miami Herald replaces fired journalist with bot.

5:26 Nvidia unveiled a neural network that creates animated 3D face models based on voice.

5:55 Sber presented code generation model based on ruGPT-3 neural network.

6:50 ruDALL-E multimodal neural network.

7:16 Cristofari Neo supercomputer for neural network training.

#prorobots #robots #robot #future technologies #robotics.

More interesting and useful content:

✅ Elon Musk Innovation https://www.youtube.com/playlist?list=PLcyYMmVvkTuQ-8LO6CwGWbSCpWI2jJqCQ

✅Future Technologies Reviews https://www.youtube.com/playlist?list=PLcyYMmVvkTuTgL98RdT8-z-9a2CGeoBQF

✅ Technology news.