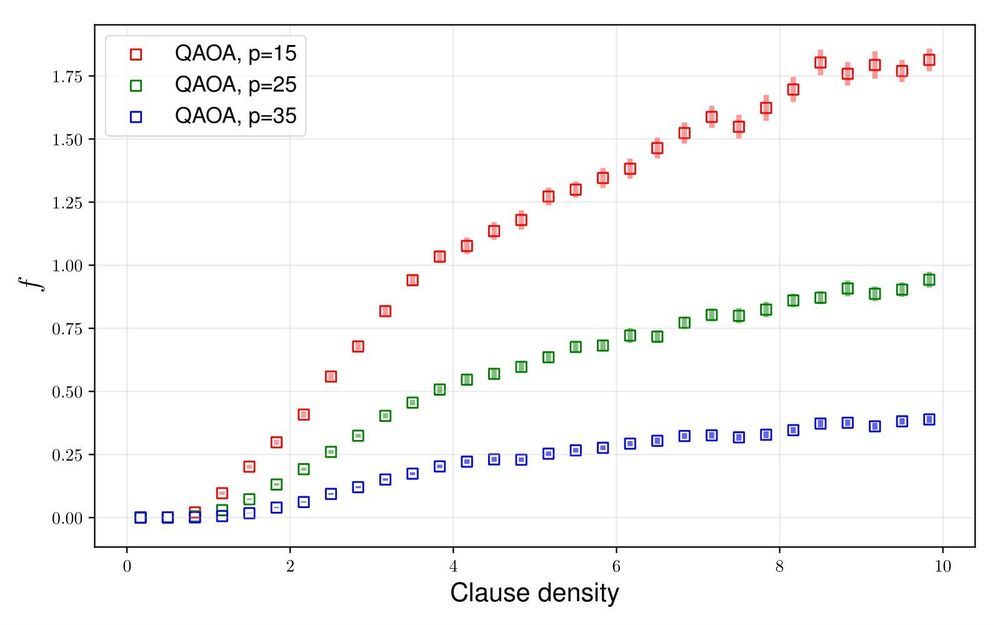

Clause density is something new to me but seems interesting as I know shores algorithm is the only thing that can hack systems.

Google is racing to develop quantum-enhanced processors that utilize quantum mechanical effects to one day dramatically increase the speed at which data can be processed.

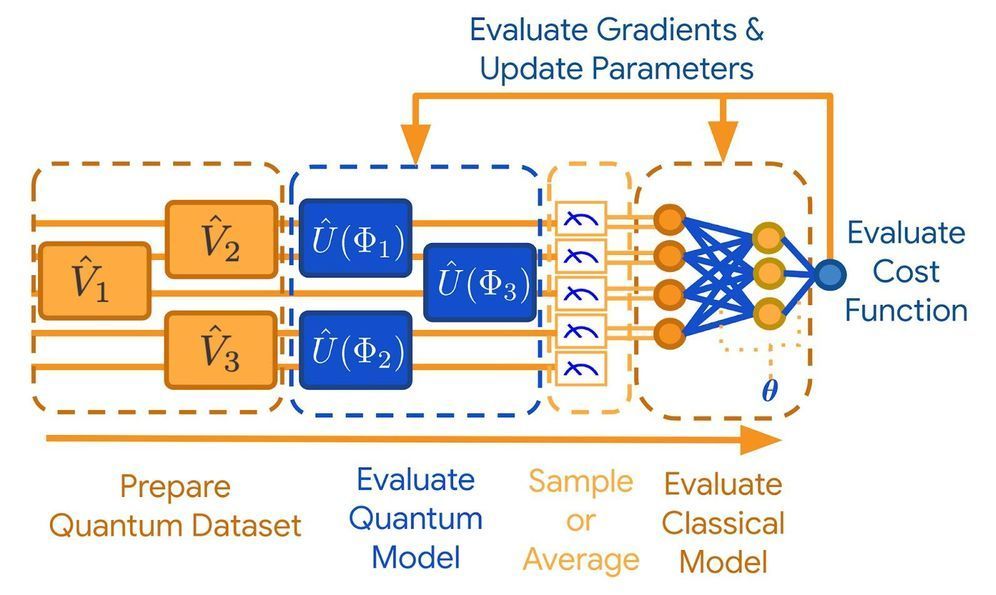

In the near term, Google has devised new quantum-enhanced algorithms that operate in the presence of realistic noise. The so-called quantum approximate optimization algorithm, or QAOA for short, is the cornerstone of a modern drive towards noise-tolerant quantum-enhanced algorithm development.

The celebrated approach taken by Google in QAOA has sparked vast commercial interest and ignited a global research community to explore novel applications. Yet, little actually remains known about the ultimate performance limitations of Google’s QAOA algorithm.