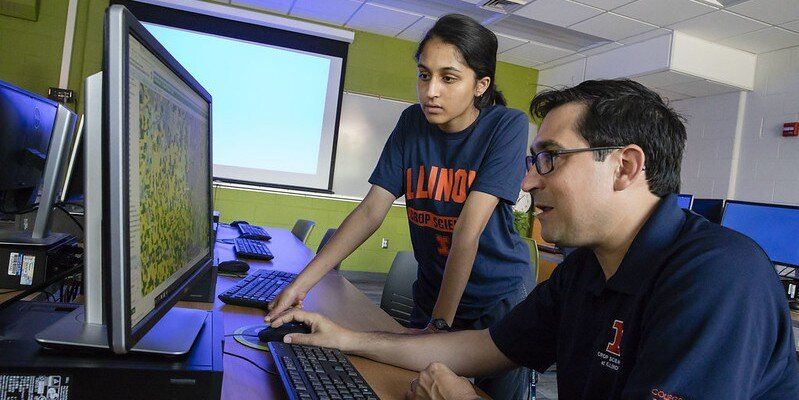

The sophistication of autonomous systems currently being developed across various domains and industries has markedly increased in recent years, due in large part to advances in computing, modeling, sensing, and other technologies. While much of the technology that has enabled this technical revolution has moved forward expeditiously, formal safety assurances for these systems still lag behind. This is largely due to their reliance on data-driven machine learning (ML) technologies, which are inherently unpredictable and lack the necessary mathematical framework to provide guarantees on correctness. Without assurances, trust in any learning enabled cyber physical system’s (LE-CPS’s) safety and correct operation is limited, impeding their broad deployment and adoption for critical defense situations or capabilities.

To address this challenge, DARPA’s Assured Autonomy program is working to provide continual assurance of an LE-CPS’s safety and functional correctness, both at the time of its design and while operational. The program is developing mathematically verifiable approaches and tools that can be applied to different types and applications of data-driven ML algorithms in these systems to enhance their autonomy and assure they are achieving an acceptable level of safety. To help ground the research objectives, the program is prioritizing challenge problems in the defense-relevant autonomous vehicle space, specifically related to air, land, and underwater platforms.

The first phase of the Assured Autonomy program recently concluded. To assess the technologies in development, research teams integrated them into a small number of autonomous demonstration systems and evaluated each against various defense-relevant challenges. After 18 months of research and development on the assurance methods, tools, and learning enabled capabilities (LECs), the program is exhibiting early signs of progress.