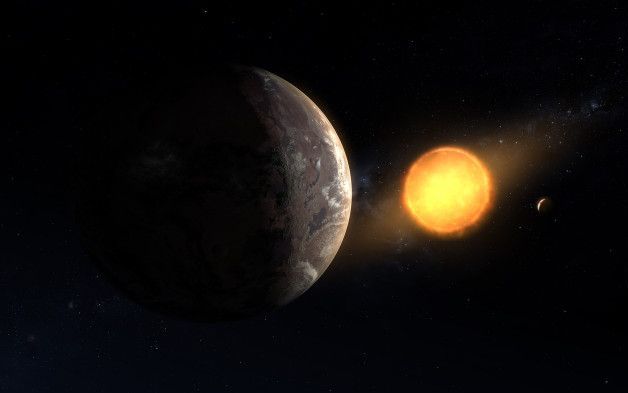

Researchers have discovered a new Earth-sized planet orbiting a star outside our solar system. The planet, called Kepler-1649c, is only around 1.06 times larger than Earth, making it very similar to our own planet in terms of physical dimensions. It’s also quite close to its star, orbiting at a distance that means it gets around 75% of the light we do from the Sun.

The planet’s star is a red dwarf, which is more prone to the kind of flares that might make it difficult for life to have evolved on its rocky satellite’s surface, unlike here in our own neighborhood. It orbits so closely to its star, too, that one year is just 19.5 of our days — but the star puts out significantly less heat than the Sun, so that’s actually right in the proper region to allow for the presence of liquid water.

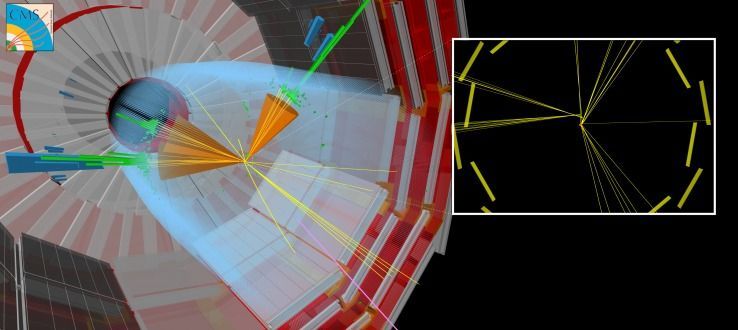

Kepler-1649c was found by scientists digging into existing observations gathered by the Kepler space telescope before its retirement from operational status in 2018. An algorithm that was developed to go through the troves of data collected by the telescope and identify potential planets for further study failed to properly ID this one, but researchers noticed it when reviewing the information.