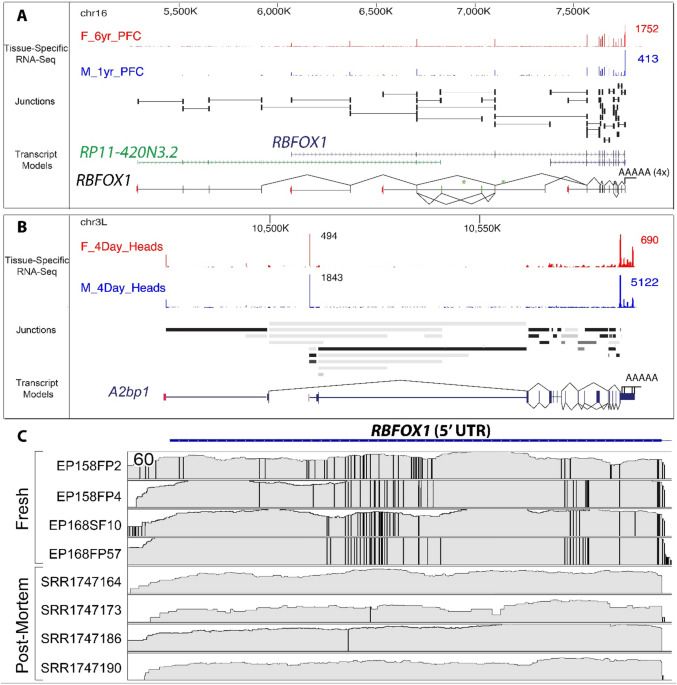

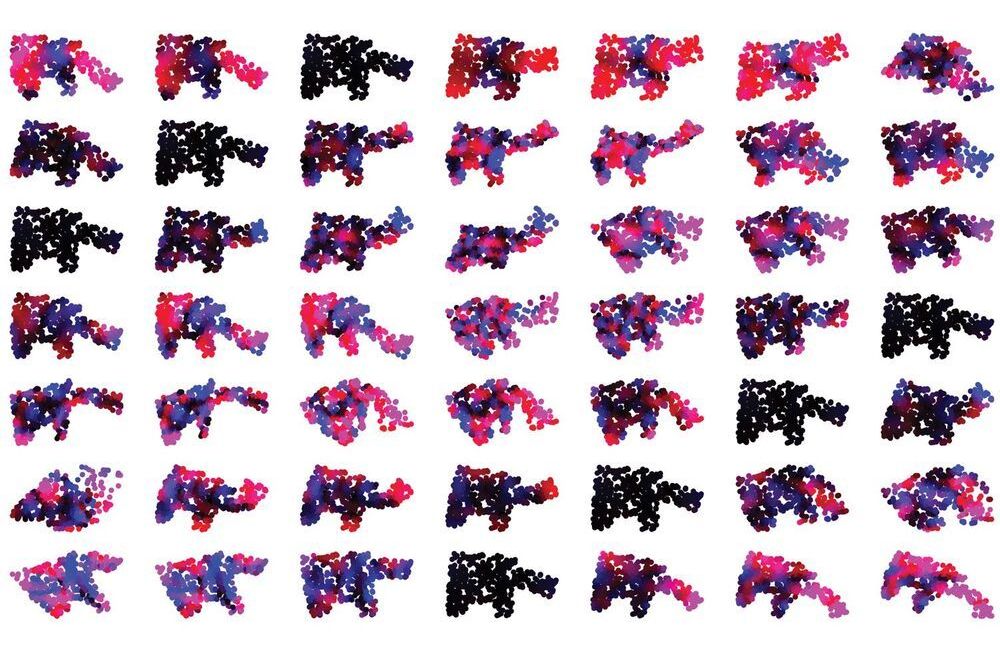

As brain activity-dependent human genes are of great importance in human neuropsychiatric disorders we also examined the expression of these genes to postmortem RNAseq databases from patients suffering from various neurological and psychiatric disorders (Table 1). Datasets were chosen based on similarities in tissue processing and RNAseq methodology to our own protocol. We performed a PCA (Principal Component Analysis) of our fresh brain compared to postmortem brain from healthy, Parkinson’s, Schizophrenia, Huntington’s, and Autism brains for the top 500 brain activity-dependent genes that showed the greatest reduction in the healthy postmortem samples. The PCA revealed a significant separation between the 4 fresh samples and the postmortem samples, independent of whether or not the fresh tissue was from epileptic (high activity, H) or non-epileptic (low activity, L) brain regions (Fig. 2 J). This further demonstrates a selective reduction of activity-dependent genes in postmortem brain independent of whether the underlying tissue is electrically active or not.

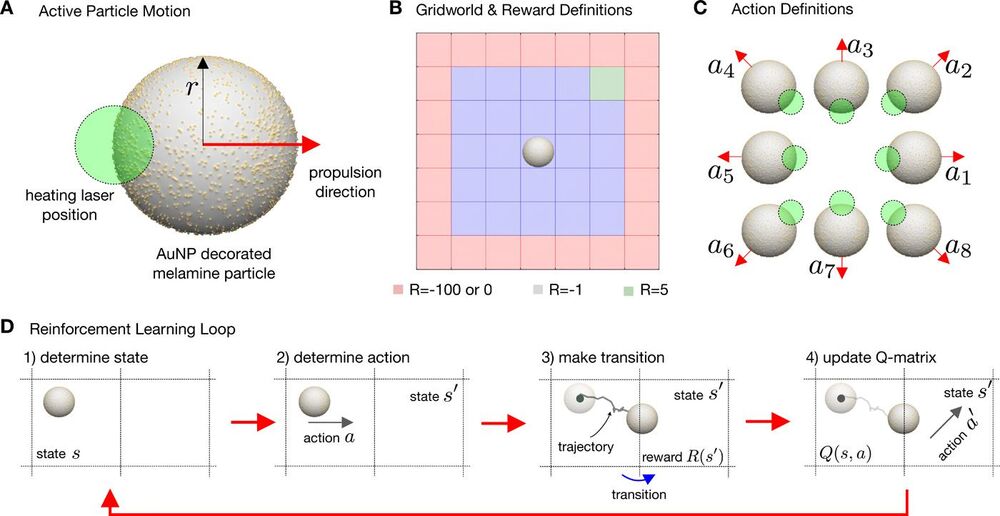

The sudden removal of brain tissue from a living person in many ways mimics a catastrophic event that occurs with a hypoxic brain injury or a traumatic death with exsanguination. The human brain has high energy needs, estimated to be 10 times that of other tissues21. As a means to understand how the postmortem interval selectively affects some genes and not others in human neocortex, we performed RNAseq and histological analyses in cortical brain tissue as a function of time from 0–24 h at 24 °C in order to simulate a postmortem interval. Neuropathological examination of the tissue used for this study showed a normal-appearing cortical pattern with no histopathologic abnormalities. RNAseq analysis showed a loss of brain activity-dependent genes that were 3-times more prone to be degraded than expected by chance compared to more stable housekeeping genes (Table 2). The threshold to detect activity-dependent genes was related to the probability of being affected by the PMI. The higher the relative expression of the brain activity gene, the more it was enriched in the population of genes affected by the PMI. These findings confirm that genes involved in brain activity are more prone to degradation during the PMI.

One possible explanation for the selective loss of activity-dependent genes could relate to the stability of various cell populations during the simulated PMI. As a means to implicate specific cell populations that could be responsible for the reduction of genes during the simulated PMI we used a clustering algorithm as we have previously described9. We found that 1427 genes (71% known brain activity-dependent genes) could be clustered across the seven time points of the simulated PMI. For these clusters, we used AllegroMcode to identify two main clusters. One cluster of 317 rapidly declining genes was predicted to be neuronal and strongly overlapped with the activity-dependent genes. A second cluster of 474 genes was predicted to be glial, including astrocytes and microglia (Fig. 3A). Remarkably, as the neuronal cell cluster rapidly fell, there was a reciprocal and dramatic increase in the expression of the glial cell cluster (Fig. 3B).