What if we could find one single equation that explains every force in the universe? Professor Michio Kaku explores how physics could potentially shrink the science of the big bang into an equation as small as E=mc².

AI good for internal back office and some limited front office activities; however, still need to see more adoption of QC in the Net and infrastructure in companies to expose their services and information to the public net & infrastructure.

Deep learning, as explained by tech journalist Michael Copeland on Blogs.nvidia.com, is the newest and most powerful computational development thus far. It combines all prior research in artificial intelligence (AI) and machine learning. At its most fundamental level, Copeland explains, deep learning uses algorithms to peruse massive amounts of data, and then learn from that data to make decisions or predictions. The Defense Agency Advanced Project Research (DARPA), as Wired reports, calls this method “probabilistic programming.”

Mimicking the human brain’s billions of neural connections by creating artificial neural networks was thought to be the path to AI in the early days, but it was too “computationally intensive.” It was the invention of Nvidia’s powerful graphics processing unit (GPU), that allowed Andre Ng, a scientist at Google, to create algorithms by “building massive artificial neural networks” loosely inspired by connections in the human brain. This was the breakthrough that changed everything. Now, according to Thenextweb.com, Google’s Deep Mind platform has been proven to teach itself, without any human input.

In fact, earlier this year an AI named AlphaGO, developed by Google’s Deep Mind division, beat Lee Sedol, a world master of the 3000 year-old Chinese game GO, described as the most complex game known to exist. AlphaGO’s creators and followers now say this Deep Learning AI proves that machines can learn and may possibly demonstrate intuition. This AI victory has changed our world forever.

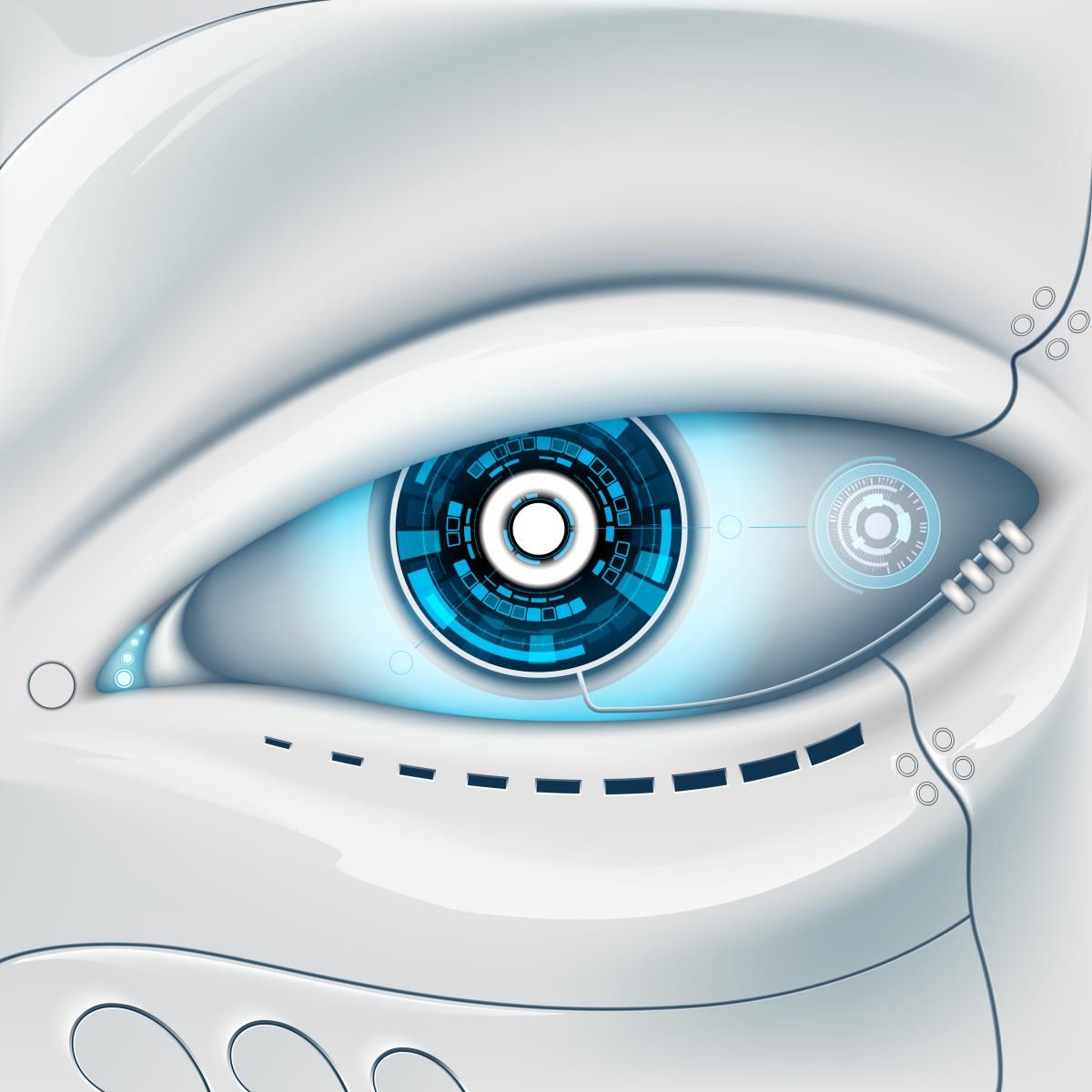

We’re supposed to be building robots and AI for the good of humankind, but scientists at MIT have pretty much been doing the opposite — they’ve built a new kind of AI with the sole purpose of generating the most frightening images ever.

Just in time for Halloween, the aptly named Nightmare Machine uses an algorithm that ‘learns’ what humans find scary, sinister, or just downright unnerving, and generates images based on what it thinks will freak us out the most.

“There have been a rising number of intellectuals, including Elon Musk and Stephen Hawking, raising alarms about the potential threat of superintelligent AI on humanity,” one of the team, Pinar Yanardag Delul, told Digital Trends.

Story just in time for Halloween.

The prospect of artificial intelligence is scary enough for some, but Manuel Cebrian Ramos at CSIRO’s Data61 is teaching machines how to terrify humans on purpose.

Dr Cebrian and his colleagues Pinar Yanardag and Iyad Rahwan at the Massachusetts Institute of Technology have developed the Nightmare Machine.

This is an artificial intelligence algorithm that is teaching a new generation of computers not only what terrifies human beings, but also how to create new images to scare us.

Fortifying cybersecurity is on everyone’s mind after the massive DDoS attack from last week. However, it’s not an easy task as the number of hackers evolves the same as security. What if your machine can learn how to protect itself from prying eyes? Researchers from Google Brain, Google’s deep Learning project, has shown that neural networks can learn to create their own form of encryption.

According to a research paper, Martín Abadi and David Andersen assigned Google’s AI to work out how to use a simple encryption technique. Using machine learning, those machines could easily create their own form of encrypted message, though they didn’t learn specific cryptographic algorithms. Albeit, compared to the current human-designed system, that was pretty basic, but an interesting step for neural networks.

To find out whether artificial intelligence could learn to encrypt on its own or not, the Google Brain team built an encryption game with its three different entities: Alice, Bob and Eve, powered by deep learning neural networks. Alice’s task was to send an encrypted message to Bob, Bob’s task was to decode that message, and Eve’s job was to figure out how to eavesdrop and decode the message Alice sent herself.

Anyone who does not have QC as part of their 5+Yr Roadmap for IT are truly exposing their company as well as shareholders and customers. China, Russia, Cartels, DarkNet, etc. will use the technology to extort victims, destroy companies, economies, and complete countries where folks have not planned, budget, skilled up, and prep for full replacement of their infrastructure and Net access. Not to mention companies who have this infrastructure will provide better services/ CCE to svc. consumers.

In a recent article, we highlighted a smart beta ETF called the “Sprott BUZZ Social Media Insights ETF” that uses artificial intelligence (AI) to select and weight stocks. If we stop and think about that for a moment, that’s a pretty cool use of AI that seems well ahead of its time. Now we’re not saying that you should go out and buy this smart beta ETF right away. It uses social media data. We know that on social media, everyone’s an expert and many of the opinions that are stated are just that, opinions. However some of the signals may be legitimate. Someone who just bought Apple is likely to go on telling everyone how bullish they are on Apple shares. Bullish behavior is often accompanied by bullish rhetoric. And maybe that’s exactly the point, but the extent to which we’re actually using artificial intelligence here is not that meaningful. Simple scripting tools go out and scrape all this public data and then we use natural language processing (NLP) algorithms to determine if the data artifacts have a positive or negative sentiment. That’s not that intelligent, is it? This made us start to think about what it would take to create a truly “intelligent” smart beta ETF.

What is Smart Beta?

We have talked before about how people that work in finance love to obfuscate the simplicity of what they do with obscure acronyms and terminology. Complex nomenclature is suited for sophisticated scientific domains like synthetic biology or quantum computing but such language is hardly merited for use in the world of finance. We told you before what beta is. Smart beta is just another way of saying “rules based investing” which has in fact been around for centuries, but of course we act like it’s new and start publishing all kinds of research papers on it. In fact, a poll offered up by S&P Capital IQ shows that even 1 out of 4 finance professionals recognizes the term “smart beta” to be little more than a marketing gimmick:

Quantum theory is strange and counterintuitive, but it’s very precise. Lots of analogies and broad concepts are presented in popular science trying to give an accurate description of quantum behavior, but if you really want to understand how quantum theory (or any other theory) works, you need to look at the mathematical details. It’s only the mathematics that shows us what’s truly going on.

Mathematically, a quantum object is described by a function of complex numbers governed by the Schrödinger equation. This function is known as the wavefunction, and it allows you to determine quantum behavior. The wavefunction represents the state of the system, which tells you the probability of various outcomes to a particular experiment (observation). To find the probability, you simply multiply the wavefunction by its complex conjugate. This is how quantum objects can have wavelike properties (the wavefunction) and particle properties (the probable outcome).

No, wait. Actually a quantum object is described by a mathematical quantity known as a matrix. As Werner Heisenberg showed, each type of quantity you could observe (position, momentum, energy) is represented by a matrix as well (known as an operator). By multiplying the operator and the quantum state matrix in a particular way, you get the probability of a particular outcome. The wavelike behavior is a result of the multiple connections between states within the matrix.

NEWS ANALYSIS: The confluence of big data, massively powerful computing resources and advanced algorithms is bringing new artificial intelligence capabilities to scientific research.

WASHINGTON, DC—Massively parallel supercomputing hardware along with advanced artificial intelligence algorithms are being harnessed to deliver powerful new research tools in science and medicine, according to Dr. France A. Córdova, Director of the National Science Foundation.

Córdova spoke Oct. 26 at GPU Technology Conference organized by Nvidia, a company that got its start making video cards for PCs and gaming systems, that now manufactures advanced graphics processor for high-performance servers and supercomputers.

An artificial intelligence method developed by University College London computer scientists and associates has predicted the judicial decisions of the European Court of Human Rights (ECtHR) with 79% accuracy, according to a paper published today (Monday, Oct. 24) in PeerJ Computer Science.

The method is the first to predict the outcomes of a major international court by automatically analyzing case text using a machine-learning algorithm.*.