Dear Ray;

I’ve written a book about the future of software. While writing it, I came to the conclusion that your dates are way off. I talk mostly about free software and Linux, but it has implications for things like how we can have driverless cars and other amazing things faster. I believe that we could have had all the benefits of the singularity years ago if we had done things like started Wikipedia in 1991 instead of 2001. There is no technology in 2001 that we didn’t have in 1991, it was simply a matter of starting an effort that allowed people to work together.

Proprietary software and a lack of cooperation among our software scientists has been terrible for the computer industry and the world, and its greater use has implications for every aspect of science. Free software is better for the free market than proprietary software, and there are many opportunities for programmers to make money using and writing free software. I often use the analogy that law libraries are filled with millions of freely available documents, and no one claims this has decreased the motivation to become a lawyer. In fact, lawyers would say that it would be impossible to do their job without all of these resources.

My book is a full description of the issues but I’ve also written some posts on this blog, and this is probably the one most relevant for you to read: https://lifeboat.com/blog/2010/06/h-conference-and-faster-singularity

Once you understand this, you can apply your fame towards getting more people to use free software and Python. The reason so many know Linus Torvalds’s name is because he released his code as GPL, which is a license whose viral nature encourages people to work together. Proprietary software makes as much sense as a proprietary Wikipedia.

I would be happy to discuss any of this further.

Regards,

-Keith

—————–

Response from Ray Kurzweil 11/3/2010:

I agree with you that open source software is a vital part of our world allowing everyone to contribute. Ultimately software will provide everything we need when we can turn software entities into physical products with desktop nanofactories (there is already a vibrant 3D printer industry and the scale of key features is shrinking by a factor of a hundred in 3D volume each decade). It will also provide the keys to health and greatly extended longevity as we reprogram the outdated software of life. I believe we will achieve the original goals of communism (“from each according to their ability, to each according to their need”) which forced collectivism failed so miserably to achieve. We will do this through a combination of the open source movement and the law of accelerating returns (which states that the price-performance and capacity of all information technologies grows exponentially over time). But proprietary software has an important role to play as well. Why do you think it persists? If open source forms of information met all of our needs why would people still purchase proprietary forms of information. There is open source music but people still download music from iTunes, and so on. Ultimately the economy will be dominated by forms of information that have value and these two sources of information – open source and proprietary – will coexist.

———

Response back from Keith:

Free versus proprietary isn’t a question about whether only certain things have value. A Linux DVD has 10 billion dollars worth of software. Proprietary software exists for a similar reason that ignorance and starvation exist, a lack of better systems. The best thing my former employer Microsoft has going for it is ignorance about the benefits of free software. Free software gets better only as more people use it. Proprietary software is an inferior development model and an anathema to science because it hinders people’s ability to work together. It has infected many corporations, and I’ve found that PhDs who work for public institutions often write proprietary software.

Here is a paragraph from my writings I will copy here:

I start the AI chapter of my book with the following question: Imagine 1,000 people, broken up into groups of five, working on two hundred separate encyclopedias, versus that same number of people working on one encyclopedia? Which one will be the best? This sounds like a silly analogy when described in the context of an encyclopedia, but it is exactly what is going on in artificial intelligence (AI) research today.

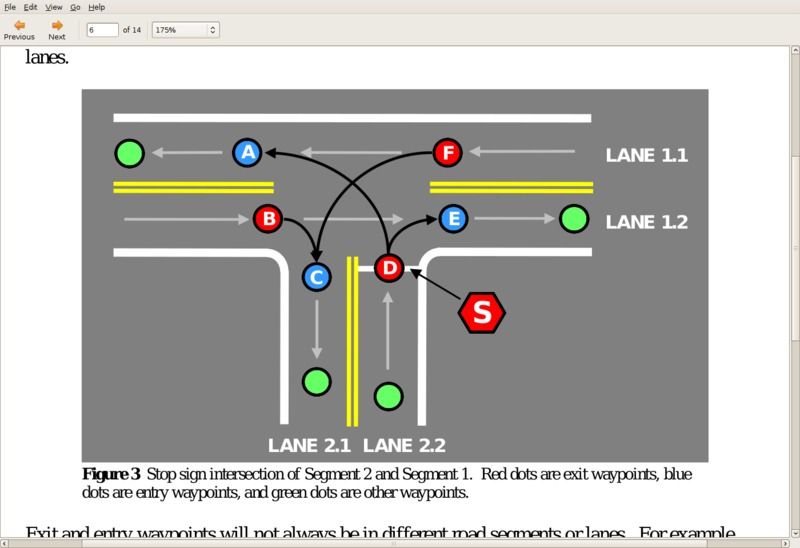

Today, the research community has not adopted free software and shared codebases sufficiently. For example, I believe there are more than enough PhDs today working on computer vision, but there are 200+ different codebases plus countless proprietary ones. Simply put, there is no computer vision codebase with critical mass.

We’ve known approximately what a neural network should look like for many decades. We need “places” for people to work together to hash out the details. A free software repository provides such a place. We need free software, and for people to work in “official” free software repositories.

“Open source forms of information” I have found is a separate topic from the software issue. Software always reads, modifies, and writes data, state which lives beyond the execution of the software, and there can be an interesting discussion about the licenses of the data. But movies and music aren’t science and so it doesn’t matter for most of them. Someone can only sell or give away a song after the software is written and on their computer in the first place. Some of this content can be free and some can be protected, and this is an interesting question, but mostly this is a separate topic. The important thing to share is scientific knowledge and software.

It is true that software always needs data to be useful: configuration parameters, test files, documentation, etc. A computer vision engine will have lots of data, even though most of it is used only for testing purposes and little used at runtime. (Perhaps it has learned the letters of the alphabet, state which it caches between executions.) Software begets data, and data begets software; people write code to analyze the Wikipedia corpus. But you can’t truly have a discussion of sharing information unless you’ve got a shared codebase in the first place.

I agree that proprietary software is and should be allowed in a free market. If someone wants to sell something useful that another person finds value in and wants to pay for, I have no problem with that. But free software is a better development model and we should be encouraging / demanding it. I’ll end with a quote from Linus Torvalds:

Science may take a few hundred years to figure out how the world works, but it does actually get there, exactly because people can build on each others’ knowledge, and it evolves over time. In contrast, witchcraft/alchemy may be about smart people, but the knowledge body never “accumulates” anywhere. It might be passed down to an apprentice, but the hiding of information basically means that it can never really become any better than what a single person/company can understand.

And that’s exactly the same issue with open source (free) vs proprietary products. The proprietary people can design something that is smart, but it eventually becomes too complicated for a single entity (even a large company) to really understand and drive, and the company politics and the goals of that company will always limit it.

The world is screwed because while we have things like Wikipedia and Linux, we don’t have places for computer vision and lots of other scientific knowledge to accumulate. To get driverless cars, we don’t need any more hardware, we don’t need any more programmers, we just need 100 scientists to work together in SciPy and GPL ASAP!

Regards,

-Keith

The summer 2010 “

The summer 2010 “ Also speaking at the H+ Summit @ Harvard is

Also speaking at the H+ Summit @ Harvard is