Because of the election cycle, the United States Congress and Presidency has a tendency to be short-sighted. Therefore it is a welcome relief when an organization such as the U.S. National Intelligence Council gathers many smart people from around the world to do some serious thinking more than a decade into the future. But while the authors of the NIC report Global Trends 2025: A Transformed World[1] understood the political situations of countries around the world extremely well, their report lacked two things:

1. Sufficient knowledge about technology (especially productive nanosystems) and their second order effects.

2. A clear and specific understanding of Islam and the fundamental cause of its problems. More generally, an understanding of the relationship between its theology, technological progress, and cultural success.

These two gaps need to be filled, and this white paper attempts to do so.

Technology

Christine Peterson, the co-founder and vice-president of the Foresight Nanotech Institute, has said “If you’re looking ahead long-term, and what you see looks like science fiction, it might be wrong. But if it doesn’t look like science fiction, it’s definitely wrong.” None of Global Trends 2025 predictions look like science fiction, though perhaps 15 years from now is not long-term (on the other hand, 15 years is not short-term either).

The authors of Global Trends 2025 are wise in the same way that Socrates was wise: They admit to possibly not knowing enough about technology: “Many stress the role of technology in bringing about radical change and there is no question it has been a major driver. We—as others—have oftentimes underestimated its impact. (p. 5).”

Predicting the development and total impact of technology more than a few years into the future is exceedingly difficult. For example, of all the science fiction writers who correctly predicted a landing on the Moon, only one obscure writer predicted that it would be televised world-wide. Nobody would have believed, much less predicted, that we wouldn’t return for more than 40 years (and counting).

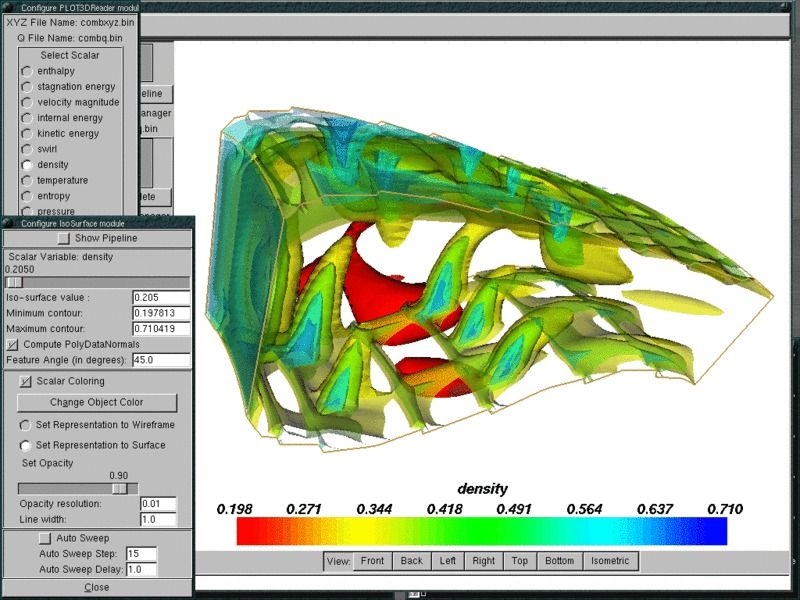

Other than orbital mechanics and demographics, there has been nothing more certain in the past two centuries than technological progress.[2] So it is perplexing that the report claims (correctly) that “[t]he pace of technology will be key [in providing solutions to energy, food, and water constraints],” (p. iv) but it then does not adequately examine the solutions pouring out of labs all over the world. To the authors’ credit, they foresaw that nanofibers and nanoparticles will increase the supply of clean water. In addition, they foresaw that nuclear bombs and bioweapons will become easier to manufacture. However, the static nanostructures they briefly discuss are only the first of four phases of nanotechnology maturation—they will be followed by active nanodevices, then nanomachines, and finally productive nanosystems. Ignoring this maturation of nanotechnology will lead to significant under-estimates of future capabilities.

If the pace of technological development is key, then on what factors does it depend?

The value of history is that it helps us predict the future. We should therefore consider the following questions while looking backwards as far as we wish to look forward:

Where were thumb drives 15 years ago? My twenty dollar 8GB thumb drive would have cost $20,000 and certainly wouldn’t have fit on my keychain. How powerful will my cell phone be 15 years from now? What are the secondary impacts of throwaway supercomputers?

In 1995 the Internet had six million hosts. There are now over 567 million hosts and 1.4 billion users. At this linear rate, in 15 years there will be a trillion users, most of them automated machines, and many of them mobile.

In 1995 there were over 10 million cell phone users in the USA; now there are around 250 million. Globally, the explosion was significantly larger, with over 2.4 billion current cell phone users. What will the effect be of a continuation of smart, mobile interconnectedness?

The World Wide Web was born in 1993 with the release of the Mosaic browser. Where was Google in 1995? Three years in the future. What else can we have besides the world’s information at our fingertips?

The problem with using recent history to guide predictions about the future is that the pace of technological development is not linear but exponential—and exponential growth is often surprising: recall the pedagogical examples of the doubling grains of rice (from India[3] and China[4]) or lily pads on the pond (from France[5]). In exponential growth, the early portion of the curve is fairly flat, while the latter portion is very steep.

Therefore, to predict technological development accurately, we should probably look back more than 15 years; perhaps we should be looking back 150 years. Exactly how far we should look back farther is difficult to determine—some metrics have not changed at all despite technological advances. For example, the speed limit is still 65 MPH, and there are no flying cars commercially available. On the other hand, cross-country airline flights are still the same price they were thirty years ago, despite inflation. Moore’s Law of electronics has had a doubling time of about 18 months, but some technologies have grown much slower. Others, such as molecular biology, have progressed significantly faster.

More important would be qualitative changes that are difficult to quantify. For example, the audio communication of telephones has a measurable bit rate greater than that of the telegraph system, but the increased level of understanding communicated by the emotion in people’s voices is much greater than can be quantified by bit rate. Similarly, search engines have qualitatively increased the value of the Internet’s TC/IP data communication capabilities. Some innovators have pushed Web 2.0 in different directions, but it’s not clear what the qualitative benefits might be, other than better-defined relationships between pieces of data. What happens with Web 3.0? Cloud computing? How many generations of innovation will it take to get to wisdom, or distributed sentience? It may be interesting to speculate about these matters, but since it often involves new science (or even new metaphysics), it is not possible to predict events with any accuracy.

Inventor and author Ray Kurzweil has made a living out of correctly timing his inventions. Among other things, he correctly predicted the growth of the Internet when it was still in its infancy. His method is simple: he plots data on a logarithmic graph, and if he gets a straight line, then he has discovered something that grows exponentially. His critics claim that his data is cherry-picked, but there are too many examples in a wide variety of technologies. The important point is why Kurzweil’s “law of accelerated returns” works, and what its limitations are: it applies to technologies for which information is an essential component. This phenomenon, made possible because information does not follow many of the rules of physics (i.e. lack of mass, negligible energy and copying costs, etc.) partially explains Moore’s Law in electronics, and also the exponential progress in molecular biology that began to occur once we understood enough of its informational basis.

Technology Breakthroughs

The “Technology Breakthroughs by 2025″ foldout matrix in the NIC report (pp. 47–49) is a great start on addressing the impact of technology, but barely a start. It is woefully conservative–some of the items listed in the report have already been proven in labs. For example, “Energy Storage” (in terms of batteries) has already been improved by ten-fold[6] (Caveat: the authors correctly point out that there is a delay between invention and wide adoption; usually about a decade for non-information based product—but 2019 is still considerably before 2025.) Hardly any other nanotech-enhanced products were examined, and they should have been.[7]

The ten specific technologies represented, and their drivers, barriers, and impact were well considered, but there were no clear criteria for picking these ten technologies. The report should have made clear that the most important technologies are those that can destroy or reboot the world’s economy or ecosystem. Almost as important are technologies that have profound effects on government, education, transportation, and family life. Past examples of such technologies include the nuclear bomb, the automobile, the telephone, the birth control pill, the personal computer, the internet, and search engines.

Though there were no clear criteria for choosing critical technology; however the report correctly included the world-changing technologies of ubiquitous computing, clean water, energy storage, biogerontechnology (life extension/age amelioration), and service robotics.

The inclusion of clean coal and biofuels is understandable given a linear projection of current trends. However, trends are not always linear—especially in information-dependent fields. Coal-based energy generation is dependent on the well-understood Carnot cycle, and is currently close to the theoretical maximum. Therefore, new knowledge about coal or the Carnot cycle will not help us in any significant way—especially since no new coal is being made. In contrast, photovoltaic solar power is currently expensive, inefficient, and underused. This is partially because of our lack of detailed understanding of the physics of photon capture and electron transfer, and partially because of our current inability to control the nanostructures that can perform those operations. As we develop more powerful scientific tools at the nanoscale, and as our nanomanufacturing capabilities grows, the price of solar power will drop significantly. This is why global solar power has resulted in exponential growth (with a two-year doubling time) for the past decade or so. This also means that in the next five years, we will likely reach a point at which it will be obvious that no other energy source can match photovoltaic solar power.

It is puzzling why exoskeleton human strength augmentation made the report’s list. First, we already commercialized compact fork-lifts and powered wheelchairs, so further improvements (in the form of exoskeletons) will necessarily be incremental and therefore will have little impact. Second, an exoskeleton is simply a sophisticated fork-lift/wheelchair and not true human strength augmentation, so it will not elicit the revulsion that might be generated by injecting extra IGF-1 genes or implanting electro-bionic actuators.

While being smarter is certainly a desirable condition, many forms of human cognitive augmentation elicit fear and loathing in many people (as the report recognizes). In terms of potential game-changing potential, it certainly deserves to be included as a disruptive technology. But this is a prediction of new science, not new engineering, and as such, should be labeled as “barely plausible.” If human cognitive augmentation is included, so should other, very high impact but very highly unlikely scenarios such as “gray goo” (i.e. out-of-control self-replicating nanobots), alien invasion, and human-directed meteor strikes.

What should have made the list are many forms of productive nanosystems, especially DNA Origami,[8] Bis-proteins,[9] Patterned Atomic Layer Epitaxy,[10] and Diamondoid Mechanosynthesis.[11],[12],[13]. Other technologies that should have been on the list include replicating 3D printers (such as Rep-Rap[14]), the weather machine,[15] Solar Power Satellites (which DoD is currently investigating[16]), Utility Fog,[17] and the Space Pier.[18]

Technologically Sophisticated Terrorism

The report correctly notes that the diffusion of technologies and scientific knowledge will increase the chance that terrorist or other malevolent groups might acquire and employ biological agents or nuclear devices (p. ix). But this danger is seriously underestimated, given the exponential growth of technology. Also underestimated is the future ability to clean up hazardous wastes of all types (including actinides, most notably uranium and plutonium) using nanomembranes and highly selective adsorbents. This is significant, especially in the case of Self-Assembled Monolayers on Mesoporous Supports (SAMMS) developed at Pacific Northwest National Labs,[19] because anything that can remove parts per billion concentrations of plutonium and uranium from water can also concentrate it. As the price drops for this filtration technology, and for nuclear enrichment tools,[20],[21] eventually small groups and even individuals will be able to collect enough fissile material for nuclear weapons.

The partial good news is that while these concentrating technologies are being developed, medical technology will also be progressing, making severe radiation exposure significantly more survivable. Unfortunately, the end result is an increasing likelihood that nuclear weapons will be used as “ordinary” tactical weapons.

The Distribution of Technology

While it is true that in the energy sector it has taken “an average of 25 years for a new production technology to become widespread,” (p. viii) there are a few things to keep in mind:

Informational technologies spread much faster than non-informational technologies. The explosion of the internet, web browsers, and the companies that depend on them have occurred in just a few years, if not months. Even now, for example, updates for the Firefox Mozilla browser are spread worldwide in days. This increase in distribution will occur because productive nanosystems will make atoms as easy to manipulate as bits.

Reducing monopolies and their attended inefficiencies is necessary. Even sufficiently powerful technologies have trouble emerging in the face of monopolies. The report mentions “selling energy back to the grid,” but understates the value that such a distributed energy network would have on increasing our nation’s security. The best part about building such a robust energy system is that it does not require large amounts of government investment — only the placement of an innovation-friendly policy that mandates that utilities buy energy at fair rates.

Mandating Gasoline/Ethanol/Methanol-flexibility (GEM) and/or electric hybrid flexibility in automobiles could break the oil cartel.[22] This simple governmental mandate would have huge political implications with little cost impact on consumers (a GEM requirement would only raise the cost of cars by $100-$300).

Miscellaneous Technology Observations

The 2025 report states that “Unprecedented economic growth, coupled with 1.5 billion more people, will put pressure on resources—particularly energy, food, and water—raising the specter of scarcities emerging as demand outstrips supply (p. iv).”

This claim is not necessarily true. The carrying capacity of an arbitrary volume of biome is dependent on technology—increased wealth can pay for advanced technologies. However, war, injustice, and ignorance drastically raise the effort required to avoid scarcities.

The report listed climate change as a possible key factor (p. v) and stated that “Climate change is expected to exacerbate resource scarcities” (p. viii). But even the most pessimistic predictions don’t expect much to happen by 2025. And there is evidence that by 2025, we will almost certainly have the power to stop it with trivial effort.[23], [24]

The Foresight Nanotech Institute and Lux Research have also identified clean water as being one of the areas in which technology will have a major impact. There are a number of different nanomembranes that are very promising, and the Global Trends 2025 recognizes them as being probable successes.

The Global Trends 2025 report identified Ubiquitous Computing, RFID (Radio Frequency Identification), and the “Internet of Things” as improving efficiency in supply chains, but more importantly, as possibly integrating closed societies into the global community (p. 47). SCADA (Supervisory Control And Data Acquisition) which is used to run everything from water treatment plants to nuclear power plants, is a harbinger of the “Internet of Things”, but the news is not always good. An “Internet of Things” will simply give more opportunities for hackers and terrorists to do harm. (SCADA manuals have been found in Al-Qaeda safe houses.)

Wealth depends on Technology

The 2025 report predicts that “the unprecedented transfer of wealth roughly from West to East now under way will continue for the foreseeable future… First, increases in oil and commodity prices have generated windfall profits for the Gulf states and Russia. Second, lower costs combined with government policies have shifted the locus of manufacturing and some service industries to Asia.”(p. vi)

But why would that transfer continue? If the current exponential growth of solar power continues, then within five years it will be obvious that oil is dead. Some of the more astute Arab leaders understand this; one Saudi prince said, “The Stone Age didn’t end because we ran out of stones, and the oil age won’t end because we run out of oil.”

China and India have gained a lion’s share of the world’s manufacturing, but is there any reason to believe that this will continue? Actually, there is one reason it might: most of the graduate students at most American Universities are foreign-born, and manufacturing underlies a vital part of the real wealth of a society; this in turn depends on its access to science and engineering. On the other hand, many of those foreign graduate students remain in the United States to become U.S. citizens. Even those who return to their home countries maintain personal relationship with American citizens, and generally spread positive stories about their experiences in the U.S., leading to more graduate students coming to the United States to settle.

The prediction that the United States will become a less dominant power is a sobering one for Americans. However, of the reasons listed in the report (advances by other countries in Science and Technology (S&T), expanded adoption of irregular warfare tactics, proliferation of long-range precision weapons, and growing use of cyber warfare attacks) the only significant item is S&T (Science and Technology). This is not only because S&T is the foundation for the other reasons listed, but also because it can often provide a basis for defending against new threats.

S&T is not only the foundation of military might, more importantly it is a foundation of economic might. However our economy rests not only on S&T, but also on economic policy. And unfortunately, everyone’s crystal ball is cloudy in this area. Historically , our regulated capitalism seems to be the basis for much of our wealth, and has been partially responsible for funding S&T. This is important because while human intelligence and ingenuity are scattered relatively evenly among the human race,[25] successful inventions are not. This is because it generally requires money to turn money into knowledge—that is research. After the research is done, the process of innovation—turning knowledge into money—begins, and is very dependent on the surrounding economic and political environment. At any rate, the relationship between the technology and economics is not clear, and certainly needs closer examination.

Wealth depends on Technology depends on Theology

The 2025 report contained some unspecified assumptions regarding economics, without defining what real wealth is, and on what it depends. At first glance, wealth is stored human labor—this was Marx’s assumption, and is slightly correct. However, one skilled person can do significantly more with good tools, hence the conclusion that tools are the lever of riches (hence Mokyr’s book of the same name[26]).

But tools are not enough. As Zhao (Peter) Xiao, a former Communist Party member and adviser to the Chinese Central Committee, put it:

“From the ancient time till now everybody wants to make more money. But from history we see only Christians have a continuous nonstop creative spirit and the spirit for innovation… The strong U.S. economy is just on the surface. The backbone is the moral foundation.” [27]

He goes on to explain that we are all made in the image and likeness of God, and are therefore His children, this means that:

The Rule of Law is not just something to cleverly avoid, but the means to happiness.

There is a constraint on unbridled and unjust capitalism.

People become rich by working hard to create real wealth, not by gaming the system—which creates waste and inefficiency. [28]

Xiao does not believe in “prosperity gospel” (i.e. send a televangelist $20 and God will make you rich). He understands that a economic system works more efficiently without false signals and other corruption—i.e. a nation will only have a prosperous economy if it has enough moral, law-abiding citizens. In addition, he may be hinting that the idea of Imago Dei (“Image of God”) explains how human intelligence drives Moore’s Law in the first place—if God is infinite, then it makes sense that His images will be able to endlessly do more with less.

Islam

The 2025 report mentions Islam fairly often but does not analyze it in depth. Oddly enough, the United States has been at war with Islamic nations longer than any other; starting with the Barbary pirates. So it behooves us to understand Islam to see if there are any fundamental issues that might be the root cause of some of these wars. Many Americans have denigrated Islam as a barbaric 6th century relic, not realizing the Judeao-Christian roots of this nation go back even farther (and are just as barbaric at times). Peter Kreeft has done an excellent job of examining the strengths of Islam, exhorting readers to learn from the followers of Mohammed.[29] But the purpose of this white paper is to investigate how Islamic beliefs hurt Muslims—and us.

There is no question that most Islamic nations have serious economic problems. Islamabad columnist Farrukh Saleem writes:

Muslims are 22 percent of the world population and produce less than five percent of global GDP. Even more worrying is that the Muslim countries’ GDP as a percent of the global GDP is going down over time. The Arabs, it seems, are particularly worse off. According to the United Nations’ Arab Development Report: ‘Half of Arab women cannot read; One in five Arabs live on less than $2 per day; Only 1 percent of the Arab population has a personal computer, and only half of 1 percent use the Internet; Fifteen percent of the Arab workforce is unemployed, and this number could double by 2010; The average growth rate of the per capita income during the preceding 20 years in the Arab world was only one-half of 1 percent per annum, worse than anywhere but sub-Saharan Africa.‘[30]

There are two possible reasons for the high rate of poverty in the Muslim world:

Diagnosis 1: Muslims are poor, illiterate, and weak because they have “abandoned the divine heritage of Islam”. Prescription: They must return to their real or imagined past, as defined by the Qur’an.

Diagnosis 2: Muslims are poor, illiterate, and weak because they have refused to change with time. Prescription: They must modernize technologically, governmentally, and culturally (i.e. start ignoring the Qur’an).[31]

Different Muslims will make different diagnosis, resulting in a continuation of the simultaneous rise of both secularized and fundamentalist Islam. This is the unexplained reason behind the 2025 report’s prediction that “the radical Salafi trend of Islam is likely to gain traction (p. ix).” While it is true that economics is an important causal factor, we must remember that economics are filtered through human psychology, which is filtered through human assumptions about reality (i.e. metaphysics and religion). The important question about Islam and nanotechnology is this: How will exponential increases in technology affect the answers of individual Muslims to the question raised above? One relatively easy prediction is that it will drive Muslims even more forcefully into both secularism and fundamentalism—with fewer adherents between them.

We must also address the underlying question: What is it about Islam beliefs that causes poverty? Global Trends 2025 points out that there is a significant correlation between the poverty of a nation and female literacy rates (p. 16). But the connection goes deeper than that.

A few hundred years ago, the Islam world was significantly ahead of Europe–technologically and culturally—but then Islamic leaders declared as heretics their greatest philosophers, especially Averroes (Ibn Rushd) who tried to reconcile faith and reason. Christianity struggled with the same tension between faith and reason, but ended up declaring as saints their greatest philosophers, most notably Thomas Aquinas. In addition, Christianity declared heretical those who derided reason, such as Tertulian, who mocked philosophy by asking “What does Athens have to do with Jerusalem”. Reason is vital to science and technology. But the divorce between faith and reason in Islam is not a historical accident; just as it is not an accident in Christianity that the two are joined—these results are due to their respective theologies.

In Islam, the relationship between Allah and humans is a master/slave relationship, and this is reflected in everything–most painfully in the Islam concept of marriage and how women are treated as a result (hence the link between poverty and female literacy). This belief is rooted in more fundamental dogma regarding the absolute transcendence of Allah, which is also manifested in the Islamic attitude towards science. The practical result, as pointed out earlier, is economic poverty (documented in Mokyr’s The Lever to Riches, and recognized in the 2025 report (p. 13) where it points out that science and technology is related to economic growth). Pope Benedict pointed out that If Allah is completely transcendent, then there is no rational order in His creation[32]—therefore there would be little incentive trying to discover it. This is the same reason that paganism did not develop science and technology. Aristotle started science by counterbalancing Plato’s rationalism with empiricism, but they (and Socrates) had to jettison most of their pagan beliefs in order to lay these foundations of science. And it still required many centuries to get to Bacon and the scientific method.

The trouble with most Americans is that we have no sense of history. Islam has been at war (mostly with Judaism and Christianity) for millennia (the pagans in their path didn’t last long enough to make any difference). There is little indication that anything will change by 2025. Israel and its Arab neighbors have hated each other ever since Isaac and Ishmael, almost 4000 years ago (if the Qur’an is to be believed in Sura 19:54). The probability that the enmity between these ancient enemies will cool in the next 15 years is infinitesimally small. To make matters worse, extracts of statements by Osama Bin Laden indicate that the 9/11 attack occurred because:

America is the great Satan. Actually, many Christian Evangelicals and traditional Catholics and Jews sympathize with Bin Laden’s accusation in this case (while deploring his methods), noting our cultural promotion of pornography, abortion, and homosexuality.

American bases are stationed in Saudi Arabia (the home of Mecca), which many Muslims see as a blasphemy. It is difficult for Americans to understand why this is so bad—we even protect the right to burn and desecrate our own flag.

Our support for Israel. Since Israel is one of the few democracies in the Mideast, and since it’s culture doesn’t raise suicide bombers, it seems quite reasonable that we should support it—it’s the right thing to do. As an appeal to self-interest, we can always remember that over the past 105 years, 1.4 billion Muslims have produced only eight Nobel Laureates while a mere 14 million Jews have produced 167 Nobel Laureates.

Given the history of Islam’s relationship with all other belief systems, the outlook looks gloomy. If the past 1400 years are any guide, Islam will continue to be at war with Paganism, Atheism, Hinduism, Judaism, and Christianity—both in hot wars of conquest and in psychological battles for the hearts and minds of the world.[33]

Muslim Demographics

The 2025 report made a wise decision in covering demographic issues, since they are predictable. But it did not investigate the causal sources (personal and cultural beliefs) of crucial demographic trends. The report writes that “the radical Salafi trend of Islam is likely to gain traction” in “those countries that are likely to struggle with youth bulges and weak economic underpinnings. (Page ix)”

This is certainly an accurate prediction. But what human beliefs lead to behavior that leads to youth bulges and weak economies? The answer is quite complex, partially because the Quran is not crystal clear on this issue. But generally “Muslim religiosity and support for Shari’a Law are associated with higher fertility” and that better education, higher wealth, and urbanization do not reduce Muslim fertility (as it does with other religions). The result is that while religious fundamentalism in Islam does not boost fertility as much as it does for Jewish traditionalists in Israel, it is still true that “fertility dynamics could power increased religiosity and Islamism in the Muslim world in the twenty-first century.“[34]

Other Practical Aspects of Islam Theology

One of the reasons the Western world is at odds with Islam is because of different views on freedom and virtue. Americans generally value freedom over virtue. In Islam, however, virtue is far more important than freedom, despite the fact that virtue requires an act of free will. In other words, Muslims don’t seem to realize that if good behavior is forced, then it is not really virtuous. Meanwhile, here in the USA we seem to have forgotten that vices enslave us—as demonstrated by addictions to drugs, gambling, and sex; we have forgotten that true freedom requires us to be virtuous—that we must bridle our passions in order to be truly free.

A disturbing facet of Islam is that it requires the death of an apostate. Theologically, this is because Allah is master, not father or spouse (as most often portrayed in the Bible), and submission to Allah is mandatory in Islam. While it is true that Christianity authorized the secular authorities to burn a few thousand heretics over two thousand years, these were in extreme situations of maximum irrationality that were fixed fairly quickly hundreds of years ago (often a single thoughtful bishop or priest stopped an outbreak). In contrast, fatwahs demanding the death penalty for apostates and heretics are still common in Islamic countries.[35]

Theology, Technological Progress, and Cultural Success

Religions do not make people stupid or cowardly. President Bush may have called the 9/11 Islamic terrorists cowardly, but they were not. They went to their deaths as bravely as any American soldier. Nor were they stupid—otherwise they never would have been able to pull off the most devastating terrorist attack on the U.S. in our relatively short history, cleverly devising a way to use our open society and our technology to maximal effect. But as individuals they were deluded, and their culture could not design or build jumbo jets; hence they used ours. This means that Islamic terrorists will be glad to use nanotechnological weapons as eagerly as nuclear ones—once they get their hands on them. The problem, of course, is that nano-enhanced weapons will be much easier to develop than nuclear ones.

Conclusion

Ever since the time of the Pilgrims, Americans have considered themselves citizens of a “bright, shining city on the hill” and much of the world agreed, with immigrants pouring in for three centuries to build the most powerful nation in history. Our representative democracy and loosely-regulated capitalism, regulated by individual consciences based on a Judeo-Christian foundation of rights and responsibilities, has been copied all over the world (at least superficially). But will this shining city endure?

It is the task of the U.S. National Intelligence Council to make sure that it does, and their effort to understand the future is an important step in that direction. Hopefully they will examine more closely the impact that technology, especially productive nanosystems, will have on political structures. In addition, they need to understand the theological underpinnings of Islam, and how it will affect the technological capabilities of Muslim nations.

Addendum

For a better government-sponsored report on how technology will affect us, see Toffler Associates’ Technology and Innovation 2025 at http://www.toffler.com/images/Toffler_TechAndInnRep1-09.pdf.

——————————————————————————–

[1] National Intelligence Council, Global Trends 2025: A Transformed World http://www.dni.gov/nic/PDF_2025/2025_Global_Trends_Final_Report.pdf and www.dni.gov/nic/NIC_2025_project.html

[2] Earlier exceptions are rare, though technology has been lost occasionally—most notably 5th century Europe after the fall of the Roman Empire, and 15th century China after the last voyage of Admiral Zeng He’s Treasure Fleet of the Dragon Throne.

[3] Singularity Symposium, Exponential Growth and the Legend of Paal Paysam. http://www.singularitysymposium.com/exponential-growth.html

[4] Ray Kurzweil, The Law of Accelerating Returns. March 7, 2001. http://www.kurzweilai.net/articles/art0134.html?printable=1

[5] Matthew R. Simmons, Revisiting The Limits to Growth: Could The Club of Rome Have Been Correct, After All? (Part One). Sep 30 2000. http://www.energybulletin.net/node/1512 Note that technological optimists always quote the chess example, while environmental doomsayers always quote the lily pad example.

[6] High-performance lithium battery anodes using silicon nanowires, Candace K. Chan, Hailin Peng, Gao Liu, Kevin McIlwrath, Xiao Feng Zhang, Robert A. Huggins & Yi Cui, Nature Nanotechnology 3, 31 — 35 (2008). http://www.nature.com/nnano/journal/v3/n1/abs/nnano.2007.411.html

[7] See Nanotechnology’s biggest stories of 2008 http://www.newscientist.com/article/dn16340-nanotechnologys-biggest-stories-of-2008.html and Top Ten Nanotechnology Patents of 2008 http://tinytechip.blogspot.com/2008/12/top-ten-nanotechnology-patents-of-2008.html

[8] Paul Rothemund. Folding DNA to create nanoscale shapes and patterns, Nature, V440N16. March 2006.

[9] Christian E. Schafmeister. The Building Blocks of Molecular Nanotechnology. Conference on Productive Nanosystems: Launching the Technology Roadmap. Arlington, VA. Oct. 9–10, 2007.

[10] John N. Randall. A Path to Atomically Precise Manufacturing. Conference on Productive Nanosystems: Launching the Technology Roadmap. Arlington, VA. Oct. 9–10, 2007.

[11] Ralph Merkle and Robert Freitas Jr., “Theoretical analysis of a carbon-carbon dimer placement tool for diamond mechanosynthesis,” Journal of Nanoscience and Nanotechnology. 3(August 2003):319-324; http://www.rfreitas.com/Nano/JNNDimerTool.pdf

[12] Robert A. Freitas Jr. and Ralph C. Merkle, A Minimal Toolset for Positional Diamond Mechanosynthesis, Journal of Computational and Theoretical Nanoscience. Vol.5, 760–861, 2008

[13] Jingping Peng, Robert. Freitas, Jr., Ralph Merkle, James Von Ehr, John Randall, and George D. Skidmore. Theoretical Analysis of Diamond Mechanosynthesis. Part III. Positional C2 Deposition on Diamond C(110) Surface Using Si/Ge/Sn-Based Dimer Placement Tools. Journal of Computational and Theoretical Nanoscience. Vol.3, 28-41, 2006. http://www.molecularassembler.com/Papers/JCTNPengFeb06.pdf

[14] Adrian Bowyer, et al. RepRap-Wealth without money. http://reprap.org/bin/view/Main/WebHome

[15] John Storrs Hall, The Weather Machine. December 23, 2008, http://www.foresight.org/nanodot/?p=2922

[16] National Security Space Office. Space-Based Solar Power As an Opportunity for Strategic Security: Phase 0 Architecture Feasibility Study. http://www.scribd.com/doc/8736624/SpaceBased-Solar-Power-Interim-Assesment-01

[17] John Storrs Hall, Utility Fog: The Stuff that Dreams are Made Of. http://autogeny.org/Ufog.html

[18] John Storrs Hall, The Space Pier: A hybrid Space-launch Tower concept. http://autogeny.org/tower/tower.html

[19] Pacific Northwest National Laboratory, SAMMS: Self-Assembled Monolayers on Mesoporous Supports. http://samms.pnl.gov/

[20] OECD Nuclear Energy Agency. Trends in the nuclear fuel cycle: economic, environmental and social aspects, Organization for Economic Co-operation and Development 2001

[21] Mark Clayton. Will lasers brighten nuclear’s future? The Christian Science Monitor/ August 27, 2008. http://features.csmonitor.com/innovation/2008/08/27/will-lasers-brighten-nuclears-future/

[22] Paul Werbos, What should we be doing today to enhance world energy security, in order to reach a sustainable global energy system? http://www.werbos.com/energy.htm See also Robert Zubrin, Energy Victory: Winning the War on Terror by Breaking Free of Oil. Prometheus Books. November 2007.

[23] John Storrs Hall, The weather machine. December 23, 2008, http://www.foresight.org/nanodot/?p=2922

[24] Tihamer Toth-Fejel, A Few Lesser Implications of Nanofactories: Global Warming is the Least of our Problems, Nanotechnology Perceptions, March 2009.

[25] Exceptions would be small groups who were subject to selective pressure to increase intelligence, such as the Ashkenazi Jews.

[26] Joel Mokyr , The Lever of Riches: Technological Creativity and Economic Progress. Oxford University Press, USA (April 9, 1992). http://www.amazon.com/Lever-Riches-Technological-Creativity-Economic/dp/0195074777

[27] Zhao (Peter) Xiao, Market Economies With Churches and Market Economies Without Churches http://www.danwei.org/business/churches_and_the_market_econom.php

[28] ibid.

[29] Peter Kreeft, Ecumenical Jihad: Ecumenism and the Culture War, Ignatius Press (March 1996). More specifically, Kreeft points out that Muslims have lower rates of abortion, adultery, fornication, and sodomy; and higher rates of prayer and devotion to God. Kreeft then repeats the Biblical admonition that God blesses those who obey His commandments. For atheists and agnostics, it might be more palatable to think of it as evolution in action: If a group encourages behavior that reduces the number of capable offspring, then it is doomed.

[30] Farrukh Saleem, Muslims amongst world’s poorest weakest, illiterate: What Went Wrong. November 08, 2005 http://islamicterrorism.wordpress.com/2008/07/01/muslims-amongst-worlds-poorest-weakest-illiterate-what-went-wrong/

[31] ibid.

[32] Pope Benedict XVI. Faith, Reason and the University: Memories and Reflections. University of Regensburg, September 2006. http://www.vatican.va/holy_father/benedict_xvi/speeches/2006/september/documents/hf_ben-xvi_spe_20060912_university-regensburg_en.html

[33] Note that this report is not a critique of Muslim people—only their beliefs (though it may not feel that way to them).

[34] Kaufmann, E. P. , “Islamism, Religiosity and Fertility in the Muslim World,” Annual meeting of the ISA’s 50th Annual Convention: Exploring the Past, Anticipating the Future. New York, NY. Feb 13-15, 2009. http://www.allacademic.com/meta/p312181_index.html

[35] On the other hand (to put things in perspective), compared to the atheists Stalin, Mao, and Pol Pot, even the most deadly Muslims extremists are rank amateurs at mass murder. Perhaps that is why Communism has barely lasted two generations, while Islam has lasted fourteen centuries. You just can’t go around killing people.

Tihamer Toth-Fejel, MS

General Dynamics Advanced Information Systems

Michigan Research and Development Center

The summer 2010 “

The summer 2010 “ Also speaking at the H+ Summit @ Harvard is

Also speaking at the H+ Summit @ Harvard is