Some weird religious stories w/ transhumanism Expect the conflict between religion and transhumanism to get worse, as closed-minded conservative viewpoints get challenged by radical science and a future with no need for an afterlife: http://barbwire.com/2017/04/06/cybernetic-messiah-transhumanism-artificial-intelligence/ & http://www.livebytheword.blog/google-directors-push-for-computers-inside-human-brains-is-anti-christ-human-rights-abuse-theologians-explain/ & http://ctktexas.com/pastoral-backstory-march-30th-2017/

By J. Davila Ashcroft

The recent film Ghost in the Shell is a science fiction tale about a young girl (known as Major) used as an experiment in a Transhumanist/Artificial Intelligence experiment, turning her into a weapon. At first, she complies, thinking the company behind the experiment saved her life after her family died. The truth is, however, that the company took her forcefully while she was a runaway. Major finds out that this company has done the same to others as well, and this knowledge causes her to turn on the company. Throughout the story the viewer is confronted with the existential questions behind such an experiment as Major struggles with the trauma of not feeling things like the warmth of human skin, and the sensations of touch and taste, and feels less than human, though she is told many times she is better than human. While this is obviously a science fiction story, what might comes as a surprise to some is that the subject matter of the film is not just fiction. Transhumanism and Artificial Intelligence on the level of the things explored in this film are all too real, and seem to be only a few years around the corner.

Recently it was reported that Elon Musk of SpaceX fame had a rather disturbing meeting with Demis Hassabis. Hassabis is the man in charge of a very disturbing project with far reaching plans akin to the Ghost in the Shell story, known as DeepMind. DeepMind is a Google project dedicated to exploring and developing all the possible uses of Artificial Intelligence. Musk stated during this meeting that the colonization of Mars is important because Hassabis’ work will make earth too dangerous for humans. By way of demonstrating how dangerous the goals of DeepMind are, one of its business partners, Shane Lange is reported to have stated, “I think human extinction will probably occur, and this technology will play a part in it.” Lange likely understands what critics of artificial intelligence have been saying for years. That is, such technology has an almost certain probability of become “self aware”. That is, becoming aware of its own existence, abilities, and developing distinct opinions and protocols that override those of its creators. If artificial intelligence does become sentient, that would mean, for advocates of A.I., that we would then owe them moral consideration. They, however, would owe humanity no such consideration if they perceived us as a danger to their existence, since we could simply disconnect them. In that scenario we would be an existential threat, and what do you think would come of that? Thus Lange’s statement carries an important message.

Already so-called “deep learning” machines are capable of figuring out solutions that weren’t programmed into them, and actually teach themselves to improve. For example, AlphaGo, an artificial intelligence designed to play the game Go, developed its own strategies for winning at the game. Strategies which its creators cannot explain and are at a loss to understand.

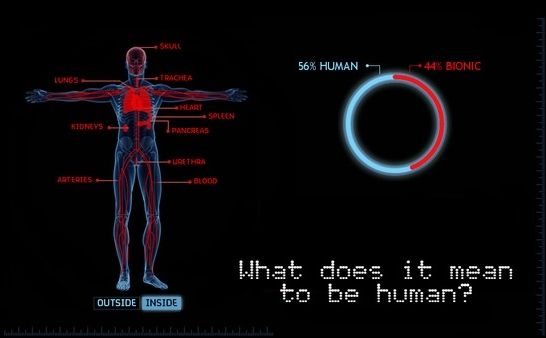

Transhumanist Philosophy The fact is many of us have been physically altered in some way. Some of the most common examples are lasik surgery, hip and knee replacements, and heart valve replacements, and nearly everyone has had vaccines that enhance our normal physical ability to resist certain illnesses and disease. The question is, how far is too far? How “enhanced” is too enhanced?

Read more