For millennia, our planet has sustained a robust ecosystem; healing each deforestation, algae bloom, pollution or imbalance caused by natural events. Before the arrival of an industrialized, destructive and dominant global species, it could pretty much deal with anything short of a major meteor impact. In the big picture, even these cataclysmic events haven’t destroyed the environment—they just changed the course of evolution and rearranged the alpha animal.

But with industrialization, the race for personal wealth, nations fighting nations, and modern comforts, we have recognized that our planet is not invincible. This is why Lifeboat Foundation exists. We are all about recognizing the limits to growth and protecting our fragile environment.

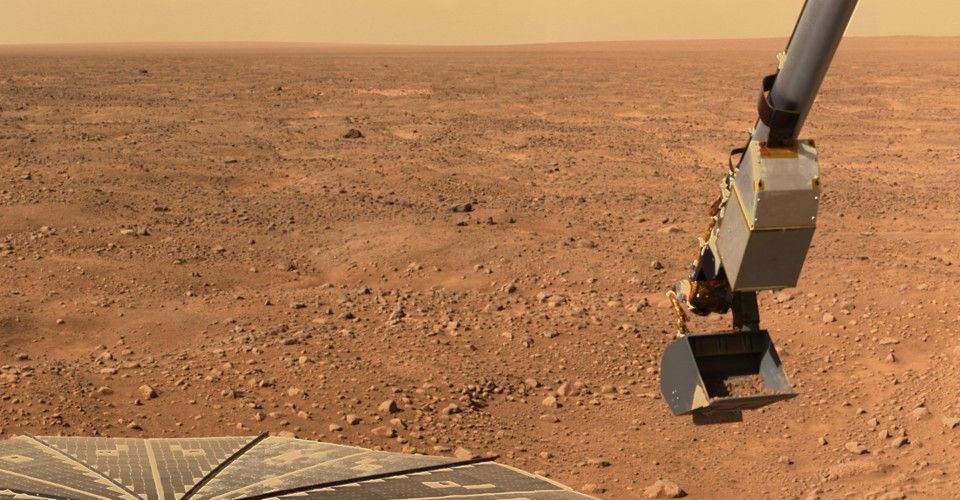

Check out this April news article on the US president’s forthcoming appointment of Jim Bridenstine, a vocal climate denier, as head of NASA. NASA is one of the biggest agencies on earth. Despite a lack of training or experience—without literacy in science, technology or astrophysics—he was handed an enormous responsibility, a staff of 17,000 and a budget of $19 billion.

In 2013, Bridenstine criticized former president Obama for wasting taxpayer money on climate research, and claimed that global temperatures stopped rising 15 years ago.

The Vox News headline states “Next NASA administrator is a Republican congressman with no background in science”. It points out that Jim Bridenstine’s confirmation has been controversial — even among members of his own party.

Sometimes, flip-flopping is a good thing

In less than one month, Jim Bridenstine has changed—he has changed a lot!

After less then a month as head of NASA, he is convinced that climate change is real, that human activity is the significant cause and that it presents an existential threat. He has changed from climate denier to a passionate advocate for doing whatever is needed to reverse our impact and protect the environment.

What changed?

Bridenstine acknowledges that he was a denier, but feels that exposure to the evidence and science is overwhelming and convincing—even in with just a few weeks exposure to world class scientists and engineers.

For anyone who still claims that there is no global warming or that the evidence is ‘iffy’, it is worth noting that Bridenstine was a hand-picked goon. His appointment was recommended by right wing conservatives and rubber stamped by the current administration. He was a Denier—but had a sufficiently open mind to listen to experts and review the evidence.

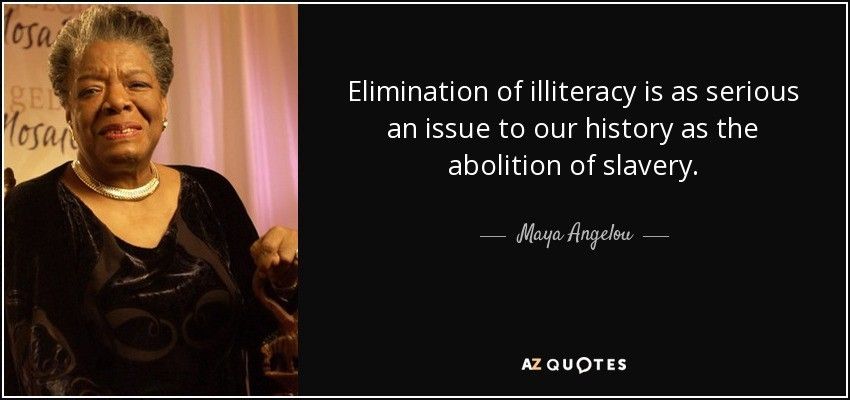

Do you suppose that the US president is listening? Do you suppose that he will grasp the most important issues of this century? What about other world leaders, legislative bodies and rock stars? Will they use their powers or influence to do the right thing? For the sake of our existence, let us hope they follow the lead of Jim Bridenstine, former climate denier!

Philip Raymond co-chairs CRYPSA, hosts the New York Bitcoin Event and is keynote speaker at Cryptocurrency Conferences. He sits on the New Money Systems board of Lifeboat Foundation. Book a presentation or consulting engagement.

Don’t get me wrong. I may be in the minority, but I generally trust Google. I recognize that I am raw material and not a client. I accept the tradeoff that I make when I use Gmail, web search, navigate to a destination or share documents. I benefit from this bargain as Google matches my behavior with improved filtering of marketing directed at me.

Don’t get me wrong. I may be in the minority, but I generally trust Google. I recognize that I am raw material and not a client. I accept the tradeoff that I make when I use Gmail, web search, navigate to a destination or share documents. I benefit from this bargain as Google matches my behavior with improved filtering of marketing directed at me.