Experience the whole new future created by OLED and see how OLED will bring significant change to our life.

For more information visit▶

LG OLED TV UK URL: http://www.lg.com/uk/lgoled/

Experience the whole new future created by OLED and see how OLED will bring significant change to our life.

For more information visit▶

LG OLED TV UK URL: http://www.lg.com/uk/lgoled/

The VR sound barrier; how do we address?

I’m staring at a large iron door in a dimly lit room. “Hey,” a voice says, somewhere on my right. “Hey buddy, you there?” It’s a heavily masked humanoid. He proceeds to tell me that my sensory equipment is down and will need to be fixed. Seconds later, the heavy door groans. A second humanoid leads the way into the spaceship where my suit will be repaired.

Inside a wide room with bright spotlights I notice an orange drilling machine. “OK, before we start, I need to remove the panel from the back of your head,” says the humanoid. I hear the whirring of a drill behind me. I squirm and reflexively raise my shoulders. The buzzing gets louder, making the hair on the nape of my neck stand up.

Then I snapped out of it. I removed the Oculus Rift DK2 strapped on my face and the headphones pressed on my ears and was back on the crowded floors of the Consumer Electronics Show in Vegas. But for a few terrifying seconds, the realistic audio in Fixing Incus, a virtual reality demo built on RealSpace 3D audio engine, had tricked my brain into thinking a machine had pulled nails out from the back of my head.

BMI is an area that will only explode when the first set of successful tests are presented to the public. I suggest investors, technologists, and researchers keep an eye on this one because it’s own impact to the world is truly inmense especially when you realize BMI changes everything in who we view how we process and connect with others, business, our homes, public services, transportation, healthcare, etc.

Implantable brain-machine interfaces (BMI) that will allow their users to control computers with thoughts alone will soon going to be a reality. DARPA has announced its plans to make such wetware. The interface would not be more than two nickels placed one on the other.

These implantable chips as per the DARPA will ‘open the channel between the human brain and modern electronics’. Though DARPA researchers have earlier also made few attempts to come up with a brain-machine interface, previous versions were having limited working.

The wetware is being developed a part of the Neural Engineering System Design (NESD) program. The device would translate the chemical signals in neurons into digital code. Phillip Alvelda, the NESD program manager, said, “Today’s best brain-computer interface systems are like two supercomputers trying to talk to each other using an old 300-baud modem. Imagine what will become possible when we upgrade our tools to really open the channel between the human brain and modern electronics”.

Hot damn, our Ghost in the Shell future is getting closer by the day. DARPA announced on Tuesday that it is interested in developing wetware — implantable brain-machine interfaces (BMI) that will allow their users to control computers with their thoughts. The device, developed as part of the Neural Engineering System Design (NESD) program, would essentially translate the chemical signals in our neurons into digital code. What’s more, DARPA expects this interface to be no larger than two nickels stacked atop one another.

“Today’s best brain-computer interface systems are like two supercomputers trying to talk to each other using an old 300-baud modem,” Phillip Alvelda, the NESD program manager, said in a statement. “Imagine what will become possible when we upgrade our tools to really open the channel between the human brain and modern electronics.”

The advanced research agency hopes the device to make an immediate impact — you know, once it’s actually invented — in the medical field. Since the proposed BMI would connect to as many as a million individual neurons (a few magnitudes more than the 100 or so that current devices can link with), patients suffering from vision or hearing loss would see an unprecedented gain in the fidelity of their assistive devices. Patients who have lost limbs would similarly see a massive boost in the responsiveness and capabilities of their prosthetics.

“CES wrapped up last week and I can say it was the best one I’ve seen in a decade. Three big stories jumped out this year:

1. VR.

2. Self driving cars.

3. AR.”

Tour of future office. Just @ your Boss. #LG

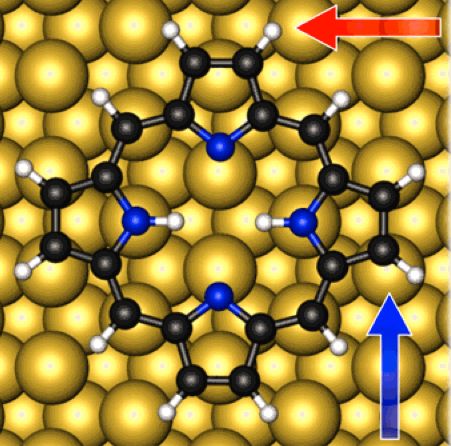

Interesting article about nanoswitches and how this technology enables the self-assembly of molecules. This actually does help progress many efforts such as molecular memory devices, photovoltaics, gas sensors, light emission, etc. However, I see the potential use in nanobot technology as it relates to future alignment mappings with the brain.

Molecular nanoswitch: calculated adsorption geometry of porphine adsorbed at copper bridge site (credit: Moritz Müller et al./J. Chem. Phys.)

Technical University of Munich (TUM) researchers have simulated a self-assembling molecular nanoswitch in a supercomputer study.

As with other current research in bottom-up self-assembly nanoscale techniques, the goal is to further miniaturize electronic devices, overcoming the physical limits of currently used top-down procedures such as photolithography.

Another interesting find from KurzweilAI.

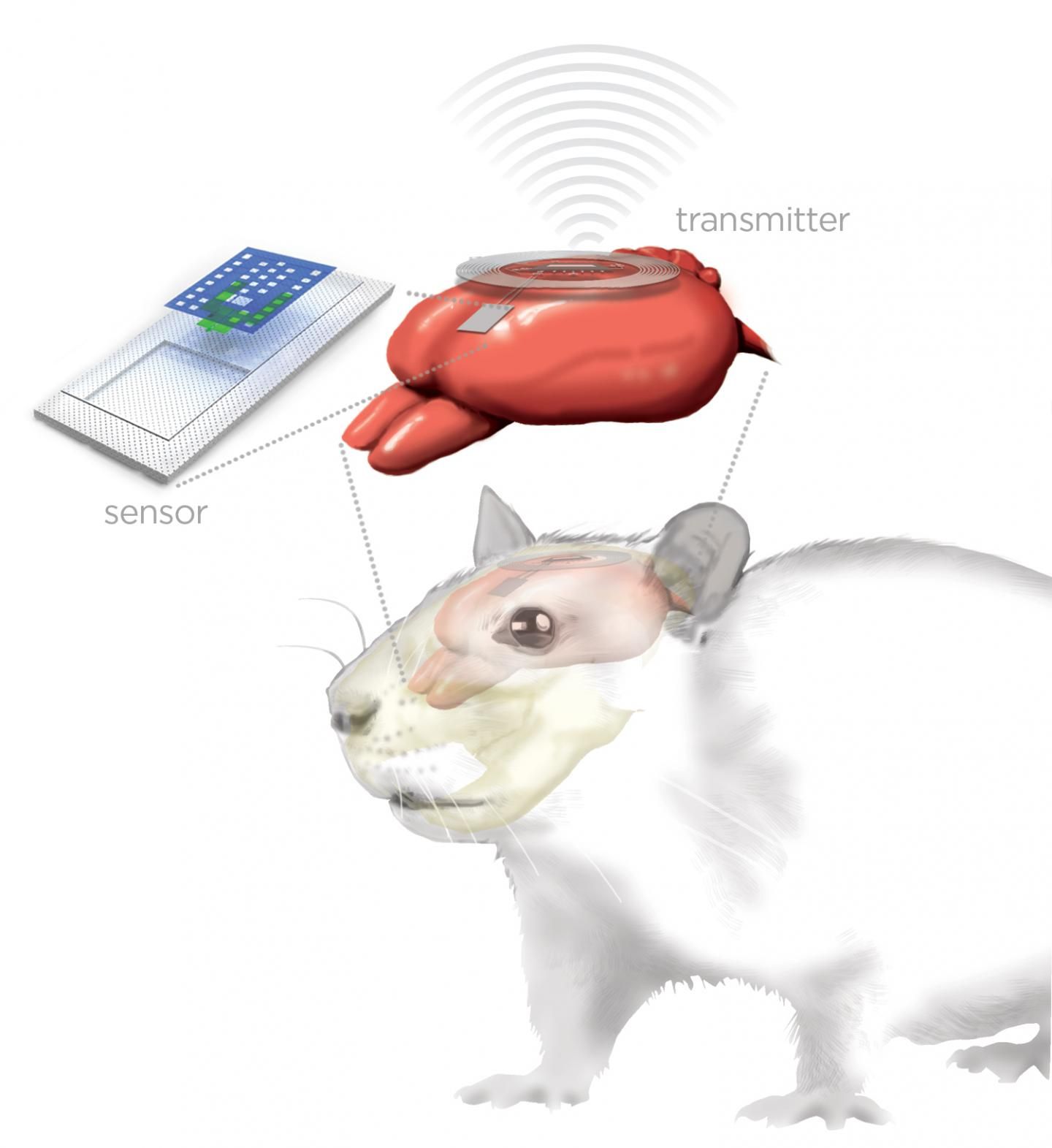

Artist’s rendering of bioresorbable implanted brain sensor (top left) connected via biodegradable wires to external wireless transmitter (ring, top right) for monitoring a rat’s brain (red) (credit: Graphic by Julie McMahon)

Researchers at University of Illinois at Urbana-Champaign and Washington University School of Medicine in St. Louis have developed a new class of small, thin electronic sensors that can monitor temperature and pressure within the skull — crucial health parameters after a brain injury or surgery — then melt away when they are no longer needed, eliminating the need for additional surgery to remove the monitors and reducing the risk of infection and hemorrhage.

Similar sensors could be adapted for postoperative monitoring in other body systems as well, the researchers say.

A new DARPA program aims to develop an implantable neural interface able to provide unprecedented signal resolution and data-transfer bandwidth between the human brain and the digital world. The interface would serve as a translator, converting between the electrochemical language used by neurons in the brain and the ones and zeros that constitute the language of information technology. The goal is to achieve this communications link in a biocompatible device no larger than one cubic centimeter in size, roughly the volume of two nickels stacked back to back.

The program, Neural Engineering System Design (NESD), stands to dramatically enhance research capabilities in neurotechnology and provide a foundation for new therapies.

“Today’s best brain-computer interface systems are like two supercomputers trying to talk to each other using an old 300-baud modem,” said Phillip Alvelda, the NESD program manager. “Imagine what will become possible when we upgrade our tools to really open the channel between the human brain and modern electronics.”

Allows for more easily building tiny machines, biomedical sensors, optical computers, solar panels, and other devices — no complex clean room required; portable version planned.

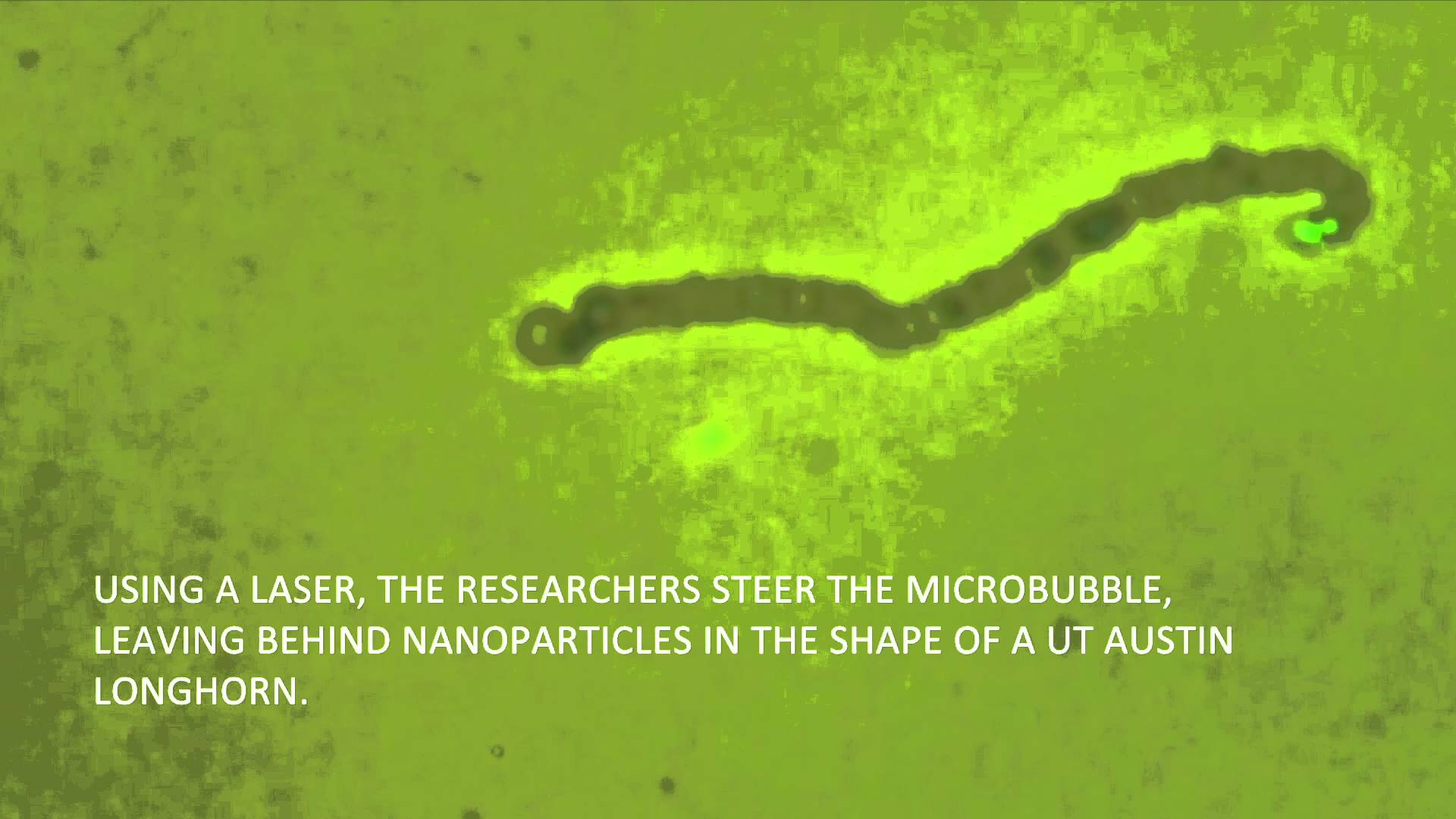

Illustration of the bubble-pen pattern-writing process using an optically controlled microbubble on a plasmonic substrate. The small blue spheres are colloidal nanoparticles. (credit: Linhan Lin et al./Nano Letters)

Researchers in the Cockrell School of Engineering at The University of Texas at Austin have created “bubble-pen lithography” — a device and technique to quickly, gently, and precisely use microbubbles to “write” using gold, silicon and other nanoparticles between 1 and 100 nanometers in size as “ink” on a surface.

The new technology is aimed at allowing researchers to more easily build tiny machines, biomedical sensors, optical computers, solar panels, and other devices.