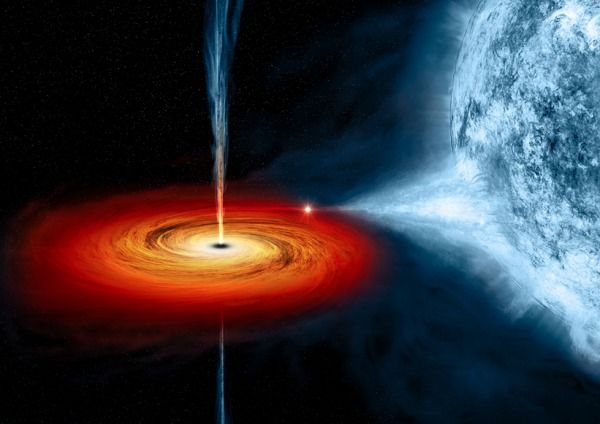

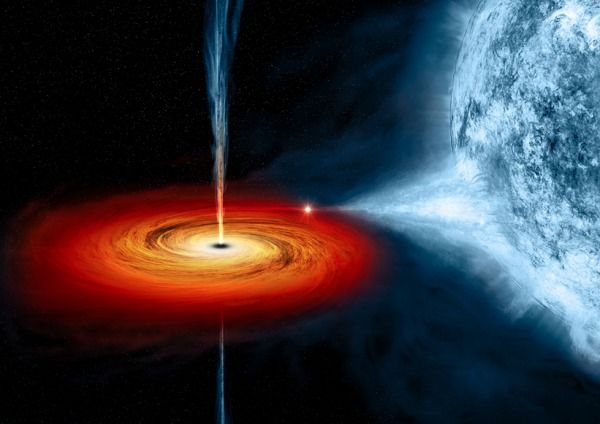

Lurking 8,000 light years from Earth is a black hole 12 times more massive than our sun. It’s…

Lurking 8,000 light years from Earth is a black hole 12 times more massive than our sun. It’s…

“In its inaugural year of observations, the Dark Energy Survey has already turned up at least eight objects that look to be new satellite dwarf galaxies of the Milky Way.”

In 2014, I submitted my paper “A Universal Approach to Forces” to the journal Foundations of Physics. The 1999 Noble Laureate, Prof. Gerardus ‘t Hooft, editor of this journal, had suggested that I submit this paper to the journal Physics Essays.

My previous 2009 submission “Gravitational acceleration without mass and noninertia fields” to Physics Essays, had taken 1.5 years to review and be accepted. Therefore, I decided against Prof. Gerardus ‘t Hooft’s recommendation as I estimated that the entire 6 papers (now published as Super Physics for Super Technologies) would take up to 10 years and/or $20,000 to publish in peer reviewed journals.

Prof. Gerardus ‘t Hooft had brought up something interesting in his 2008 paper “A locally finite model for gravity” that “… absence of matter now no longer guarantees local flatness…” meaning that accelerations can be present in spacetime without the presence of mass. Wow! Isn’t this a precursor to propulsion physics, or the ability to modify spacetime without the use of mass?

As far as I could determine, he didn’t pursue this from the perspective of propulsion physics. A year earlier in 2007, I had just discovered the massless formula for gravitational acceleration g=τc^2, published in the Physics Essays paper referred above. In effect, g=τc^2 was the mathematical solution to Prof. Gerardus ‘t Hooft’s “… absence of matter now no longer guarantees local flatness…”

Prof. Gerardus ‘t Hooft used string theory to arrive at his inference. Could he empirically prove it? No, not with strings. It took a different approach, numerical modeling within the context of Einstein’s Special Theory of Relativity (STR) to derive a mathematic solution to Prof. Gerardus ‘t Hooft’s inference.

In 2013, I attended Dr. Brian Greens’s Gamow Memorial Lecture, held at the University of Colorado Boulder. If I had heard him correctly, the number of strings or string states being discovered has been increasing, and were now in the 10500 range.

I find these two encounters telling. While not rigorously proved, I infer that (i) string theories are unable to take us down a path the can be empirically proven, and (ii) they are opened ended i.e. they can be used to propose any specific set of outcomes based on any specific set of inputs. The problem with this is that you now have to find a theory for why a specific set of inputs. I would have thought that this would be heartbreaking for theoretical physicists.

In 2013, I presented the paper “Empirical Evidence Suggest A Need For A Different Gravitational Theory,” at the American Physical Society’s April conference held in Denver, CO. There I met some young physicists and asked them about working on gravity modification. One of them summarized it very well, “Do you want me to commit career suicide?” This explains why many of our young physicists continue to seek employment in the field of string theories where unfortunately, the hope of empirically testable findings, i.e. winning the Noble Prize, are next to nothing.

I think string theories are wrong.

Two transformations or contractions are present with motion, Lorentz-FitzGerald Transformation (LFT) in linear motion and Newtonian Gravitational Transformations (NGT) in gravitational fields.

The fundamental assumption or axiom of strings is that they expand when their energy (velocity) increases. This axiom (let’s name it the Tidal Axiom) appears to have its origins in tidal gravity attributed to Prof. Roger Penrose. That is, macro bodies elongate as the body falls into a gravitational field. To be consistent with NGT the atoms and elementary particles would contract in the direction of this fall. However, to be consistent with tidal gravity’s elongation, the distances between atoms in this macro body would increase at a rate consistent with the acceleration and velocities experienced by the various parts of this macro body. That is, as the atoms get flatter, the distances apart get longer. Therefore, for a string to be consistent with LFT and NGT it would have to contract, not expand. One suspects that this Tidal Axiom’s inconsistency with LFT and NGT has led to an explosion of string theories, each trying to explain Nature with no joy. See my peer-reviewed 2013 paper New Evidence, Conditions, Instruments & Experiments for Gravitational Theories published in the Journal of Modern Physics, for more.

The vindication of this contraction is the discovery of the massless formula for gravitational acceleration g=τc^2 using Newtonian Gravitational Transformations (NGT) to contract an elementary particle in a gravitational field. Neither quantum nor string theories have been able to achieve this, as quantum theories require point-like inelastic particles, while strings expand.

What worries me is that it takes about 70 to 100 years for a theory to evolve into commercially viable consumer products. Laser are good examples. So, if we are tying up our brightest scientific minds with theories that cannot lead to empirical validations, can we be the primary technological superpower a 100 years from now?

The massless formula for gravitational acceleration g=τc^2, shows us that new theories on gravity and force fields will be similar to General Relativity, which is only a gravity theory. The mass source in these new theories will be replaced by field and particle motions, not mass or momentum exchange. See my Journal of Modern Physics paper referred above on how to approach this and Super Physics for Super Technologies on how to accomplish this.

Therefore, given that the primary axiom, the Tidal Axiom, of string theories is incorrect it is vital that we recognize that any mathematical work derived from string theories is invalidated. And given that string theories are particle based theories, this mathematical work is not transferable to the new relativity type force field theories.

I forecast that both string and quantum gravity theories will be dead by 2017.

When I was seeking funding for my work, I looked at the Broad Agency Announcements (BAAs) for a category that includes gravity modification or interstellar propulsion. To my surprise, I could not find this category in any of our research organizations, including DARPA, NASA, National Science Foundation (NSF), Air Force Research Lab, Naval Research Lab, Sandia National Lab or the Missile Defense Agency.

So what are we going to do when our young graduates do not want to or cannot be employed in string theory disciplines?

(Originally published in the Huffington Post)

Gravity modification, the scientific term for antigravity, is the ability to modify the gravitational field without the use of mass. Thus legacy physics, the RSQ (Relativity, String & Quantum) theories, cannot deliver either the physics or technology as these require mass as their field origin.

Ron Kita who recently received the first US patent (8901943) related to gravity modification, in recent history, introduced me to Dr. Takaaki Musha some years ago. Dr. Musha has a distinguished history researching Biefeld-Brown in Japan, going back to the late 1980s, and worked for the Ministry of Defense and Honda R&D.

Dr. Musha is currently editing New Frontiers in Space Propulsion (Nova Publishers) expected later this year. He is one of the founders of the International Society for Space Science whose aim is to develop new propulsion systems for interstellar travel.

Wait. What? Honda? Yes. For us Americans, it is unthinkable for General Motors to investigate gravity modification, and here was Honda in the 1990s, at that, researching this topic.

In recent years Biefeld-Brown has gained some notoriety as an ionic wind effect. I, too, was of this opinion until I read Dr. Musha’s 2008 paper “Explanation of Dynamical Biefeld-Brown Effect from the Standpoint of ZPF field.” Reading this paper I realized how thorough, detailed and meticulous Dr. Musha was. Quoting selected portions from Dr. Musha’s paper:

In 1956, T.T. Brown presented a discovery known as the Biefeld-Bown effect (abbreviated B-B effect) that a sufficiently charged capacitor with dielectrics exhibited unidirectional thrust in the direction of the positive plate.

From the 1st of February until the 1st of March in 1996, the research group of the HONDA R&D Institute conducted experiments to verify the B-B effect with an improved experimental device which rejected the influence of corona discharges and electric wind around the capacitor by setting the capacitor in the insulator oil contained within a metallic vessel … The experimental results measured by the Honda research group are shown …

V. Putz and K. Svozil,

… predicted that the electron experiences an increase in its rest mass under an intense electromagnetic field …

and the equivalent

… formula with respect to the mass shift of the electron under intense electromagnetic field was discovered by P. Milonni …

Dr. Musha concludes his paper with,

… The theoretical analysis result suggests that the impulsive electric field applied to the dielectric material may produce a sufficient artificial gravity to attain velocities comparable to chemical rockets.

Given, Honda R&D’s experimental research findings, this is a major step forward for the Biefeld-Brown effect, and Biefeld-Brown is back on the table as a potential propulsion technology.

We learn two lesson.

First, that any theoretical analysis of an experimental result is advanced or handicapped by the contemporary physics. While the experimental results remain valid, at the time of the publication, zero point fluctuation (ZPF) was the appropriate theory. However, per Prof. Robert Nemiroff’s 2012 stunning discovery that quantum foam and thus ZPF does not exist, the theoretical explanation for the Biefeld-Brown effect needs to be reinvestigated in light of Putz, Svozil and Milonni’s research findings. This is not an easy task as that part of the foundational legacy physics is now void.

Second, it took decades of Dr. Musha’s own research to correctly advise Honda R&D how to conduct with great care and attention to detail, this type of experimental research. I would advise anyone serious considering Biefeld-Brown experiments to talk to Dr. Musha, first.

Another example of similar lessons relates to the Finnish/Russian Dr. Podkletnov’s gravity shielding spinning superconducting ceramic disc i.e. an object placed above this spinning disc would lose weight.

I spent years reading and rereading Dr. Podkletnov’s two papers (the 1992 “A Possibility of Gravitational Force Shielding by Bulk YBa2Cu3O7-x Superconductor” and the 1997 “Weak gravitational shielding properties of composite bulk YBa2Cu3O7-x superconductor below 70K under e.m. field”) before I fully understood all the salient observations.

Any theory on Dr. Podkletnov’s experiments must explain four observations, the stationary disc weight loss, spinning disc weight loss, weight loss increase along a radial distance and weight increase. Other than my own work I haven’t see anyone else attempt to explain all four observation within the context of the same theoretical analysis. The most likely inference is that legacy physics does not have the tools to explore Podkletnov’s experiments.

But it gets worse.

Interest in Dr. Podkletnov’s work was destroyed by two papers claiming null results. First, Woods et al, (the 2001 “Gravity Modification by High-Temperature Superconductors”) and second, Hathaway et al (the 2002 “Gravity Modification Experiments Using a Rotating Superconducting Disk and Radio Frequency Fields”). Reading through these papers it was very clear to me that neither team were able to faithfully reproduce Dr. Podkletnov’s work.

My analysis of Dr. Podkletnov’s papers show that the disc is electrified and bi-layered. By bi-layered, the top side is superconducting and the bottom non-superconducting. Therefore, to get gravity modifying effects, the key to experimental success is, bottom side needs to be much thicker than the top. Without getting into too much detail, this would introduce asymmetrical field structures, and gravity modifying effects.

The necessary dialog between theoretical explanations and experimental insight is vital to any scientific study. Without this dialog, there arises confounding obstructions; theoretically impossible but experiments work or theoretically possible but experiments don’t work. With respect to Biefeld-Brown, Dr. Musha has completed the first iteration of this dialog.

Above all, we cannot be sure what we have discovered is correct until we have tested these discoveries under different circumstances. This is especially true for future propulsion technologies where we cannot depend on legacy physics for guidance, and essentially don’t understand what we are looking for.

In the current RSQ (pronounced risk) theory climate, propulsion physics is not a safe career path to select. I do hope that serious researchers reopen the case for both Biefeld-Brown and Podkletnov experiments, and the National Science Foundation (NSF) leads the way by providing funding to do so.

(Originally published in the Huffington Post)

Recent revelations of NASA’s Eagleworks Em Drive caused a sensation on the internet as to why interstellar propulsion can or cannot be possible. The nay sayers pointed to shoddy engineering and impossible physics, and ayes pointed to the physics of the Alcubierre-type warp drives based on General Relativity.

So what is it? Are warp drives feasible? The answer is both yes and no. Allow me to explain.

The empirical evidence of the Michelson-Morley experiment of 1887, now known as the Lorentz-FitzGerald Transformations (LFT), proposed by FitzGerald in 1889, and Lorentz in 1892, show beyond a shadow of doubt that nothing can have a motion with a velocity greater than the velocity of light. In 1905 Einstein derived LFT from first principles as the basis for the Special Theory of Relativity (STR).

So if nothing can travel faster than light why does the Alcubierre-type warp drive matter? The late Prof. Morris Klein explained in his book, Mathematics: The Loss of Certainty, that mathematics has become so powerful that it can now be used to prove anything, and therefore, the loss of certainty in the value of these mathematical models. The antidote for this is to stay close to the empirical evidence.

My good friend Dr. Andrew Beckwith (Prof., Chongqing University, China) explains that there are axiomatic problems with the Alcubierre-type warp drive theory. Basically the implied axioms (or starting assumptions of the mathematics) requires a multiverse universe or multiple universes, but the mathematics is based on a single universe. Thus even though the mathematics appears to be sound its axioms are contradictory to this mathematics. As Dr. Beckwith states, “reducto ad absurdum”. For now, this unfortunately means that there is no such thing as a valid warp drive theory. LFT prevents this.

For a discussion of other problems in physical theories please see my peer reviewed 2013 paper “New Evidence, Conditions, Instruments & Experiments for Gravitational Theories” published in the Journal of Modern Physics. In this paper I explain how General Relativity can be used to propose some very strange ideas, and therefore, claiming that something is consistent with General Relativity does not always lead to sensible outcomes.

The question we should be asking is not, can we travel faster than light (FTL) but how do we bypass LFT? Or our focus should not be how to travel but how to effect destination arrival.

Let us take one step back. Since Einstein, physicists have been working on a theory of everything (TOE). Logic dictates that for a true TOE, the TOE must be able to propose from first principles, why conservation of mass-energy and conservation of momentum hold. If these theories cannot, they cannot be TOEs. Unfortunately all existing TOEs have these conservation laws as their starting axioms, and therefore, are not true TOEs. The importance of this requirement is that if we cannot explain why conservation of momentum is true, like Einstein did with LFT, how do we know how to apply this in developing interstellar propulsion engines? Yes, we have to be that picky, else we will be throwing millions if not billions of dollars in funding into something that probably won’t work in practice.

Is a new physics required to achieve interstellar propulsion? Does a new physics exists?

In 2007, after extensive numerical modeling I discovered the massless formula for gravitational acceleration, g=τc^2, where tau τ is the change in the time dilation transformation (dimensionless LFT) divided by that distance. (The error in the modeled gravitational acceleration is less than 6 parts per million). Thereby, proving that mass is not required for gravitational theories and falsifying the RSQ (Relativity, String & Quantum) theories on gravity. There are two important consequences of this finding, (1) we now have a new propulsion equation, and (2) legacy or old physics cannot deliver.

But gravity modification per g=τc^2 is still based on motion, and therefore, constrained by LFT. That is, gravity modification cannot provide for interstellar propulsion. For that we require a different approach, the new physics.

At least from the perspective of propulsion physics, having a theoretical approach for a single formula g=τc^2 would not satisfy the legacy physics community that a new physics is warranted or even exists. Therefore, based on my 16 years of research involving extensive numerical modeling with the known empirical data, in 2014, I wrote six papers laying down the foundations of this new physics:

1. “A Universal Approach to Forces”: There is a 4th approach to forces that is not based on Relativity, String or Quantum (RSQ) theories.

2. “The Variable Isotopic Gravitational Constant”: The Gravitational Constant G is not a constant, and independent of mass, therefore gravity modification without particle physics is feasible.

3. “A Non Standard Model Nucleon/Nuclei Structure”: Falsifies the Standard Model and proposes Variable Electric Permittivity (VEP) matter.

4. “Replacing Schrödinger”: Proposes that the Schrödinger wave function is a good but not an exact model.

5. “Particle Structure”: Proposes that the Standard Model be replaced with the Component Standard Model.

6. “Spectrum Independence”: Proposes that photons are spectrum independent, and how to accelerate nanowire technology development.

This work, published under the title Super Physics for Super Technologies is available for all to review, critique and test its validity. (A non-intellectual emotional gut response is not a valid criticism). That is, the new physics does exist. And the relevant outcome per interstellar propulsion is that subspace exists, and this is how Nature implements probabilities. Note, neither quantum nor string theories ask the question, how does Nature implement probabilities? And therefore, are unable to provide an answer. The proof of subspace can be found in how the photon electromagnetic energy is conserved inside the photon.

Subspace is probabilistic and therefore does not have the time dimension. In other words destination arrival is not LFT constrained by motion based travel, but is effected by probabilistic localization. We therefore, have to figure out navigation in subspace or vectoring and modulation. Vectoring is the ability to determine direction, and modulation is the ability to determine distance. This approach is new and has an enormous potential of being realized as it is not constrained by LFT.

Yes, interstellar propulsion is feasible, but not as of the warp drives we understand today. As of 2012, there are only about 50 of us on this planet working or worked towards solving the gravity modification and interstellar propulsion challenge.

So the question is not, whether gravity modification or interstellar propulsion is feasible, but will we be the first nation to invent this future?

(Originally published in the Huffington Post)

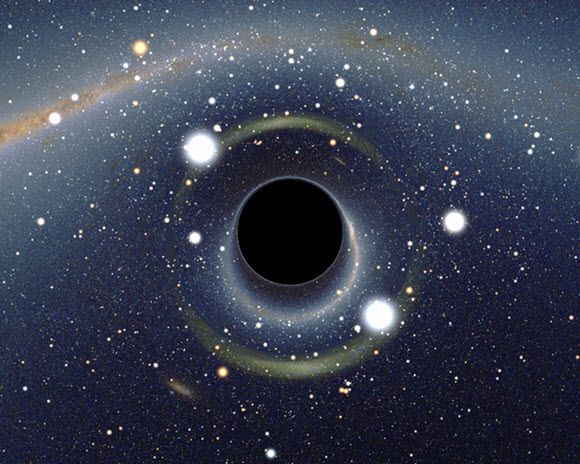

Simulated view of a black hole (credit: Alain Riazuelo of the French National Research Agency, via Wikipedia)

Consider how many natural laws and constants—both physical and chemical—have been discovered since the time of the early Greeks. Hundreds of thousands of natural laws have been unveiled in man’s never ending quest to understand Earth and the universe.

I couldn’t name 1% of the laws of nature and physics. Here are just a few that come to mind from my high school science classes. I shall not offer a bulleted list, because that would suggest that these random references to laws and constants are organized or complete. It doesn’t even scratch the surface…

Newton’s Law of force (F=MA), Newton’s law of gravity, The electromagnetic force, strong force, weak force, Avogadro’s Constant, Boyle’s Law, the Lorentz Transformation, Maxwell’s equations, laws of thermodynamics, E=MC2, particles behave as waves, superpositioning of waves, universe inflation rate, for every action… etc, etc.

For some time, physicists, astronomers, chemists, and even theologians have pondered an interesting puzzle: Why is our universe so carefully tuned for our existence? And not just our existence—After all, it makes sense that our stature, our senses and things like muscle mass and speed have evolved to match our environment. But here’s the odd thing—If even one of a great many laws, properties or constants were off by even a smidgen, the whole universe could not exist—at least not in a form that could support life as we imagine it! Even the laws and numbers listed above. All of creation would not be here, if any of these were just a bit off…

Well, there might be something out there, but it is unlikely to have resulted in life—not even life very different than ours. Why? Because without the incredibly unique balance of physical and chemical properties that we observe, matter would not coalesce into stars, planets would not crunch into balls that hold an atmosphere, and they would not clear their path to produce a stable orbit for eons. Compounds and tissue would not bind together. In fact, none of the things that we can imagine could exist.

Of course, theologians have a pat answer. In one form or another, religions answer all of cosmology by stating a matter of faith: “The universe adheres to God’s design, and so it makes sense that everything works”. This is a very convenient explanation, because these same individuals forbid the obvious question: ‘Who created God?’ and ‘What existed before God?’ Just ask Bill Nye or Bill Maher. They have accepted offers to debate those who feel that God created Man instead of the other way around.

Scientists, on the other hand, take pains to distance themselves from theological implications. They deal in facts and observable phenomenon. Then, they form a hypotheses and begin testing. That’s what we call the scientific method.

If any being could evolve without the perfect balance of laws and constants that we observe, it would be a single intelligence distributed amongst a cold cloud of gas. In fact, a universe that is not based on many of the observed numbers (including the total mass of everything in existence) probably could not be stable for very long.

Does this mean that it’s all about you?! Are you, Dear reader, the only thing in existence?—a living testament to René Descartes?

Does this mean that it’s all about you?! Are you, Dear reader, the only thing in existence?—a living testament to René Descartes?

Don’t discount that notion. Cosmologists acknowledge that your own existence is the only thing of which you can be absolutely sure. (“I think. Therefore, I am”). If you cannot completely trust your senses as s portal to reality, then no one else provably exists. But, most scientists (and the rest of us, too) are willing to assume that we come from a mother and father and that the person in front of us exists as a separate thinking entity. After all, if we can’t start with this assumption, then the rest of physics and reality hardly matters, because we are too far removed from the ‘other’ reality to even contemplate what is outside of our thoughts.

Two questions define the field of cosmology—How did it all begin and why does it work? Really big questions are difficult to test, and so we must rely heavily on tools and observation:

• Is the Big Bang a one-off event, or is it one in a cycle of recurring events?

• Is there anything beyond the observable universe? (something apart from the Big Bang)

• Does natural law observed in our region of the galaxy apply everywhere?

• Is there intelligent life beyond Earth?

Having theories that are difficult to test does not mean that scientists aren’t making progress. Even in the absence of frequent testing, a lot can be learned from observation. Prior to 1992, no planet had ever been observed or detected outside of our solar system. For this reason, we had no idea of the likelihood that planets form and take orbit around stars.

Today, almost 2000 exoplanets have been discovered with 500 of them belonging to multiple planetary systems. All of these were detected by indirect evidence—either the periodic eclipsing of light from a star, which indicates that something is in orbit around it, or subtle wobbling of the star itself, which indicates that it is shifting around a shared center of gravity with a smaller object. But wait! Just this month, a planet close to our solar system (about 30 light years away) was directly observed. This is a major breakthrough, because it gives us an opportunity to perform spectral analysis of the planet and its atmosphere.

Is this important? That depends on goals and your point of view. For example, one cannot begin to speculate on the chances for intelligent life, if we have no idea how common or unusual it is for a star to be orbited by planets. It is a critical factor in the Drake Equation. (I am discounting the possibility of a life form living within a sun, not because it is impossible or because I am a human-chauvinist, but because it would not likely be a life form that we will communicate with in this millennium).

Of course, progress sometimes raises completely new questions. In the 1970s, Francis Drake and Carl Sagan began exploring the changing rate of expansion between galaxies. This created an entirely new question and field of study related to the search for dark matter.

Of course, progress sometimes raises completely new questions. In the 1970s, Francis Drake and Carl Sagan began exploring the changing rate of expansion between galaxies. This created an entirely new question and field of study related to the search for dark matter.

Concerning the titular question: “Why is the universe fine-tuned for life?”, cosmologist Stephen Hawking offered an explanation last year that might help us to understand. At last, it offers a theory, even if it is difficult to test. The media did their best to make Professor Hawking’s explanation digestible, explaining it something like this [I am paraphrasing]:

There may be multiple universes. We observe only the one in which we exist. Since our observations are limited to a universe with physical constants and laws that resulted in us—along with Stars, planets, gravity and atmospheres, it seems that the conditions for life are all too coincidental. But if we imagine countless other universes outside of our realm (very few with life-supporting properties), then the coincidence can be dismissed. In effect, as observers, we are regionalized into a small corner.

The press picked up on this explanation with an unfortunate headline that blared the famous Professor had proven that God does not exist. Actually, Hawking said that miracles stemming out of religious beliefs are “not compatible with science”. Although he is an atheist, he said nothing about God not existing. He simply offered a theory to explain an improbable coincidence.

The press picked up on this explanation with an unfortunate headline that blared the famous Professor had proven that God does not exist. Actually, Hawking said that miracles stemming out of religious beliefs are “not compatible with science”. Although he is an atheist, he said nothing about God not existing. He simply offered a theory to explain an improbable coincidence.

I am not a Cosmologist. I only recently have come to understand that it is the science of origin and is comprised of astronomy, particle physics, chemistry and philosophy. (But not religion—please don’t go there!). If my brief introduction piques your interest, a great place to spread your wings is with Tim Maudlin’s recent article in Aeon Magazine, The Calibrated Cosmos. Tim succinctly articulates the problem of a fine-tuned universe in the very first paragraph:

“Theories now suggest that the most general structural elements of the universe — the stars and planets, and the galaxies that contain them — are the products of finely calibrated laws and conditions that seem too good to be true.”

And: “Had the constants of nature taken slightly different values, we would not be here.”

The article delves into the question thoroughly, while still reading at a level commensurate with Sunday drivers like you and me. If you write to Tim, tell him I sent you. Tell him that his beautifully written article has added a whole new facet to my appreciation for being!

Philip Raymond is Co-Chair of The Cryptocurrency Standards Association and CEO of Vanquish Labs.

This is his fourth article for Lifeboat Foundation and his first as an armchair cosmologist.

Related: Quantum Entanglement: EPR Paradox

The Planetary Society’s LightSail launched yesterday, May 20th, 2015.

[youtube_sc url=“https://www.youtube.com/watch?v=-cEXKu_Onlk” title=“LightSail%20from%20Carl%20Sagan%20to%20Now”]

Until 2006 our Solar System consisted essentially of a star, planets, moons, and very much smaller bodies known as asteroids and comets. In 2006 the International Astronomical Union’s (IAU) Division III Working Committee addressed scientific issues and the Planet Definition Committee address cultural and social issues with regard to planet classifications. They introduced the “pluton” for bodies similar to planets but much smaller.

The IAU set down three rules to differentiate between planets and dwarf planets. First, the object must be in orbit around a star, while not being itself a star. Second, the object must be large enough (or more technically correct, massive enough) for its own gravity to pull it into a nearly spherical shape. The shape of objects with mass above 5×1020 kg and diameter greater than 800 km would normally be determined by self-gravity, but all borderline cases would have to be established by observation.

Third, plutons or dwarf planets, are distinguished from classical planets in that they reside in orbits around the Sun that take longer than 200 years to complete (i.e. they orbit beyond Neptune). Plutons typically have orbits with a large orbital inclination and a large eccentricity (noncircular orbits). A planet should dominate its zone, either gravitationally, or in its size distribution. That is, the definition of “planet” should also include the requirement that it has cleared its orbital zone. Of course this third requirement automatically implies the second. Thus, one notes that planets and plutons are differentiated by the third requirement.

As we are soon to become a space faring civilization, we should rethink these cultural and social issues, differently, by subtraction or addition. By subtraction, if one breaks the other requirements? Comets and asteroids break the second requirement that the object must be large enough. Breaking the first requirement, which the IAU chose not address at the time, would have planet sized bodies not orbiting a star. From a socio-cultural perspective, one could suggest that these be named “darktons” (from dark + plutons). “Dark” because without orbiting a star, these objects would not be easily visible; “tons” because in deep space, without much matter, these bodies could not meet the third requirement of being able to dominate its zone.

Taking this socio-cultural exploration a step further, by addition, a fourth requirement is that of life sustaining planets. The scientific evidence suggest that life sustaining bodies would be planet-sized to facilitate a stable atmosphere. Thus, a life sustaining planet would be named “zoeton” from the Greek zoe for life. For example Earth is a zoeton while Mars may have been.

Again by addition, one could define, from the Latin aurum for gold, “auton”, as a heavenly body, comets, asteroids, plutons and planets, whose primary value is that of mineral or mining interest. Therefore, Jupiter is not a zoeton, but could be an auton if one extracts hydrogen or helium from this planet. Another auton is 55 Cancri e, a planet 40 light years away, for mining diamonds with an estimated worth of $26.9x1030. The Earth is both a zoeton and an auton, as it both, sustains life and has substantial mining interests, respectively. Not all plutons or planets could be autons. For example Pluto would be too cold and frozen for mining to be economical, and therefore, frozen darktons would most likely not be autons.

At that time the IAU also did not address the upper limit for a planet’s mass or size. Not restricting ourselves to planetary science would widen our socio-cultural exploration. A social consideration would be the maximum gravitational pull that a human civilization could survive, sustain and flourish in. For example, for discussion sake, a gravitational pull greater the 2x Earth’s or 2g, could be considered the upper limit. Therefore, planets with larger gravitational pulls than 2g would be named “kytons” from the Antikythera mechanical computer as only machines could survive and sustain such harsh conditions over long periods of time. Jupiter would be an example of such a kyton.

Are there any bodies between the gaseous planet Jupiter and brown dwarfs? Yes, they have been named Y-dwarfs. NASA found one with a surface temperature of only 80 degrees Fahrenheit, just below that of a human. It is possible these Y-dwarfs could be kytons and autons as a relatively safe (compared to stars) source of hydrogen.

Taking a different turn, to complete the space faring vocabulary, one can redefine transportation by their order of magnitudes. Atmospheric transportation, whether for combustion intake or winged flight can be termed, “atmosmax” from “atmosphere”, and Greek “amaxi” for car or vehicle. Any vehicle that is bound by the distances of the solar system but does not require an atmosphere would be a “solarmax”. Any vehicle that is capable of interstellar travel would be a “starship”. And one capable of intergalactic travel would be a “galactica”.

We now have socio-cultural handles to be a space faring civilization. A vocabulary that facilitates a common understanding and usage. Exploration implies discovery. Discovery means new ideas to tackle new environments, new situations and new rules. This can only lead to positive outcomes. Positive outcomes means new wealth, new investments and new jobs. Let’s go forth and add to these cultural handles.

—

Ben Solomon is a Committee Member of the Nuclear and Future Flight Propulsion Technical Committee, American Institute of Aeronautics & Astronautics (AIAA), and author of An Introduction to Gravity Modification and Super Physics for Super Technologies: Replacing Bohr, Heisenberg, Schrödinger & Einstein (Kindle Version)