Don’t miss another chance to win a powerful GPU.

Don’t miss another chance to win a powerful GPU.

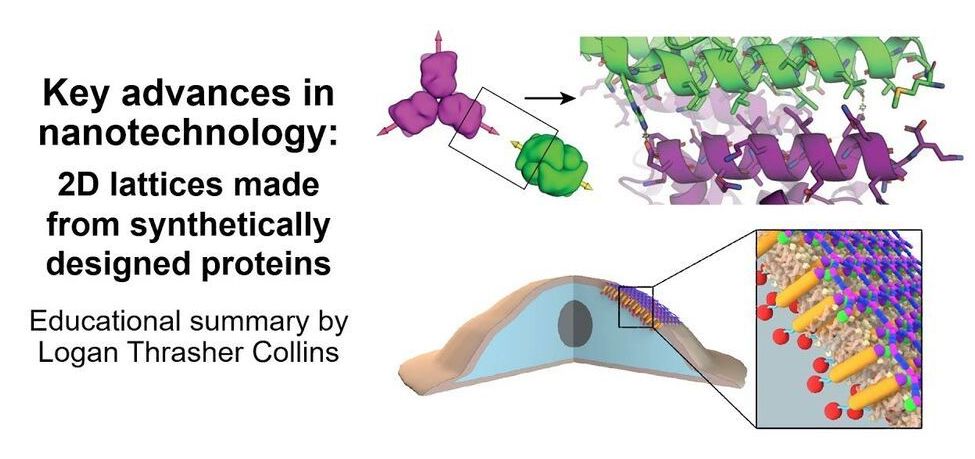

Check out my short video in which I explain some super exciting research in the area of nanotechnology: de novo protein lattices! I specifically discuss a journal article by Ben-Sasson et al. titled “Design of biologically active binary protein 2D materials”.

Here, I explain an exciting nanotechnology paper “Design of biologically active binary protein 2D materials” (https://doi.org/10.1038/s41586-020-03120-8).

Though I am not involved in this particular research myself, I have worked in adjacent areas such as de novo engineering of aggregating antimicrobial peptides, synthetic biology, nanotechnology-based tools for neuroscience, and gene therapy. I am endlessly fascinated by this kind of computationally driven de novo protein design and would love to incorporate it in my own research at some point in the future.

I am a PhD candidate at Washington University in St. Louis and the CTO of the startup company Conduit Computing. I am also a published science fiction writer and a futurist. To learn more about me, check out my website: https://logancollinsblog.com/.

In search for a unifying quantum gravity theory that would reconcile general relativity with quantum theory, it turns out quantum theory is more fundamental, after all. Quantum mechanical principles, some physicists argue, apply to all of reality (not only the realm of ultra-tiny), and numerous experiments confirm that assumption. After a century of Einsteinian relativistic physics gone unchallenged, a new kid of the block, Computational Physics, one of the frontrunners for quantum gravity, states that spacetime is a flat-out illusion and that what we call physical reality is actually a construct of information within [quantum neural] networks of conscious agents. In light of the physics of information, computational physicists eye a new theory as an “It from Qubit” offspring, necessarily incorporating consciousness in the new theoretic models and deeming spacetime, mass-energy as well as gravity emergent from information processing.

In fact, I expand on foundations of such new physics of information, also referred to as [Quantum] Computational Physics, Quantum Informatics, Digital Physics, and Pancomputationalism, in my recent book The Syntellect Hypothesis: Five Paradigms of the Mind’s Evolution. The Cybernetic Theory of Mind I’m currently developing is based on reversible quantum computing and projective geometry at large. This ontological model, a “theory of everything” of mine, agrees with certain quantum gravity contenders, such as M-Theory on fractal dimensionality and Emergence Theory on the code-theoretic ontology, but admittedly goes beyond all current models by treating space-time, mass-energy and gravity as emergent from information processing within a holographic, multidimensional matrix with the Omega Singularity as the source.

There’s plenty of cosmological anomalies of late that make us question the traditional interpretation of relativity. First off, what Albert Einstein (1879 — 1955) himself called “the biggest blunder” of his scientific career – t he rate of the expansion of our Universe, or the Hubble constant – is the subject of a very important discrepancy: Its value changes based how scientists try to measure it. New results from the Hubble Space Telescope have now “raised the discrepancy beyond a plausible level of chance,” according to one of the latest papers published in the Astrophysical Journal. We are stumbling more often on all kinds of discrepancies in relativistic physics and the standard cosmological model. Not only the Hubble constant is “constantly” called into question but even the speed of light, if measured by different methods, and on which Einsteinian theories are based upon, shows such discrepancies and turns out not really “constant.”

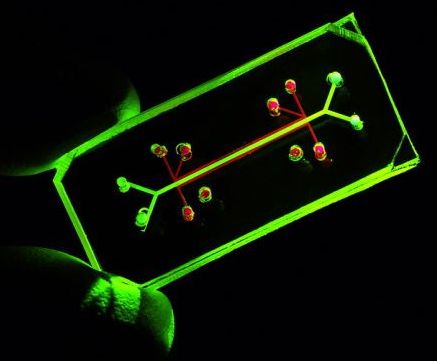

Toshiba’s Cambridge Research Laboratory has achieved quantum communications over optical fibres exceeding 600 km in length, three times further than the previous world record distance.

The breakthrough will enable long distance, quantum-secured information transfer between metropolitan areas and is a major advance towards building a future Quantum Internet.

The term “Quantum Internet” describes a global network of quantum computers, connected by long distance quantum communication links. This technology will improve the current Internet by offering several major benefits – such as the ultra-fast solving of complex optimisation problems in the cloud, a more accurate global timing system, and ultra-secure communications. Personal data, medical records, bank details, and other information will be physically impossible to intercept by hackers. Several large government initiatives to build a Quantum Internet have been announced in China, the EU and the USA.

U.S. chip goliath Qualcomm has said it is open to the idea of investing in U.K. chip designer Arm if the company’s $40 billion sale to Nvidia is blocked by regulators, according to The Telegraph newspaper.

Qualcomm’s incoming CEO, Cristiano Amon, said Qualcomm would be willing to buy a stake in Arm alongside other industry investors if SoftBank, Arm’s current owner, listed the company on the stock market instead of selling it to Nvidia, the newspaper reported Sunday.

“If Arm has an independent future, I think you will find there is a lot of interest from a lot of the companies within the ecosystem, including Qualcomm, to invest in Arm,” Amon said. “If it moves out of SoftBank and it goes into a process of becoming a publicly-traded company, [with] a consortium of companies that invest, including many of its customers, I think those are great possibilities.”

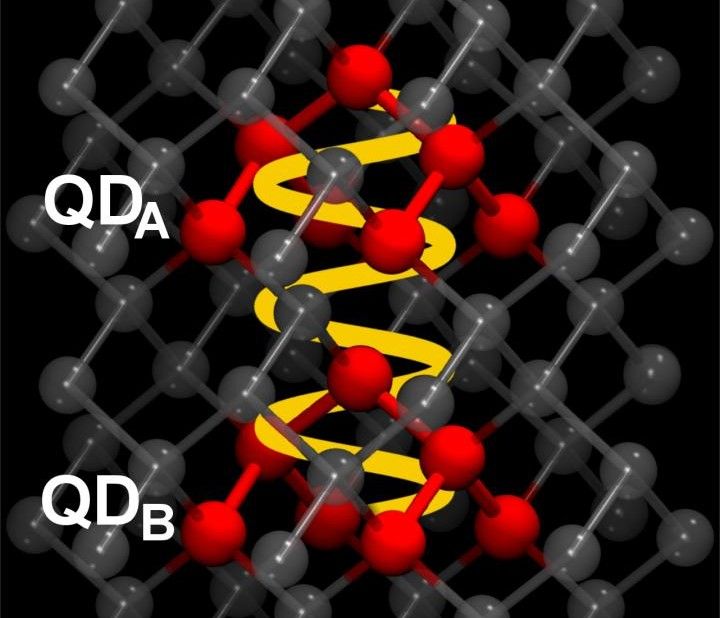

“Conditional witnessing” technique makes many-body entangled states easier to measure.

Quantum error correction – a crucial ingredient in bringing quantum computers into the mainstream – relies on sharing entanglement between many particles at once. Thanks to researchers in the UK, Spain and Germany, measuring those entangled states just got a lot easier. The new measurement procedure, which the researchers term “conditional witnessing”, is more robust to noise than previous techniques and minimizes the number of measurements required, making it a valuable method for testing imperfect real-life quantum systems.

Quantum computers run their algorithms on quantum bits, or qubits. These physical two-level quantum systems play an analogous role to classical bits, except that instead of being restricted to just “0” or “1” states, a single qubit can be in any combination of the two. This extra information capacity, combined with the ability to manipulate quantum entanglement between qubits (thus allowing multiple calculations to be performed simultaneously), is a key advantage of quantum computers.

The problem with qubits

However, qubits are fragile. Virtually any interaction with their environment can cause them to collapse like a house of cards and lose their quantum correlations – a process called decoherence. If this happens before an algorithm finishes running, the result is a mess, not an answer. (You would not get much work done on a laptop that had to restart every second.) In general, the more qubits a quantum computer has, the harder they are to keep quantum; even today’s most advanced quantum processors still have fewer than 100 physical qubits.