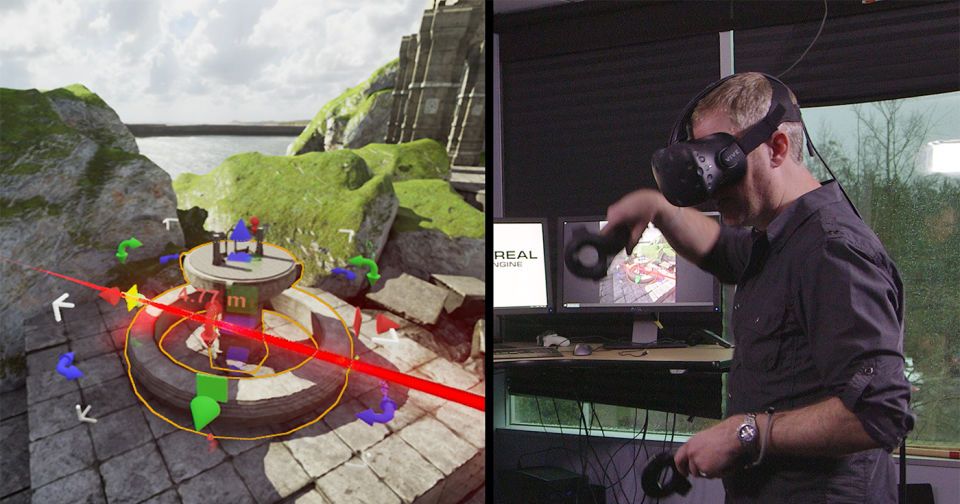

Meet “Unreal Engine”; VR’s friend in VR game creations.

Epic Games has been teasing “the future of VR development” recently, and the team is finally ready to tell everyone what that is: Creating virtual reality content within virtual reality itself, using the full version of its Unreal Engine 4. Epic cofounder Tim Sweeney says that while the company’s been supporting the likes of the Oculus Rift from the outset, the irony is that, up to this point, the experiences we’ve seen so far have been developed using the same tools as traditional video games. “Now you can go into VR, have the entire Unreal editor functioning and do it live,” he says. “It almost gives you god-like powers to manipulate the world.”

So rather than using the same 2D tools (a keyboard, mouse and computer monitor) employed in traditional game development, people making experiences for VR in Unreal can now use a head-mounted display and motion controllers to manipulate objects in a 3D space. “Your brain already knows how to do this stuff because we all have an infinite amount of experience picking up and moving 3D objects,” Sweeney says. “The motions you’d do in the real world, you’d do in the editor and in the way you’d expect to; intuitively.”

Imagine walking around an environment you’re creating in real time, like a carpenter surveying his or her progress while building a house. Looking around, you notice that the pillar you dropped in place earlier is unexpectedly blocking some of the view through a window you just added. Now there isn’t a clear line of sight to the snowcapped mountain on the horizon. Within the VR update for Unreal Engine 4, you can pick the pillar up with your hands and adjust its placement until it’s right.

Read more