Tech development is no longer a linear process, and businesses have to respond accordingly.

Tech development is no longer a linear process, and businesses have to respond accordingly.

“This month, urban thinkers from the United Nations, the European Commission and other organizations are meeting in Brussels to continue a curiously complex attempt: developing a universal definition of the “city.””

Nowadays, the latest buzzword of attraction is “Artificial Intelligence” and its immediate impact on our advertising sector. As the CEO of Gravity4, I thought it to be only appropriate to help dissect this new evolutionary phase of our industry as we apply it. It is no doubt that ‘Deep Learning’ is our future, and it is on course to have a huge impact on the lives of everyday consumers and business sectors. In the scientific world, deep learning is referred to as “deep neural networks”. These involve a family of artificial intelligence, popularly known as AI, something named way back in 1955, and something which Facebook, Google and Microsoft are all now pushing for with Herculean force. In fact, according to the international data corporation, it is estimated that from a global standpoint, by 2020, the artificial intelligence market could reach close to $50 billion.

Getting to Grips With the Terminology

AI refers to a collection of tools and technologies, some of which are relatively new, and some of which are time-tested. The techniques that are employed allow computers to use these tools and technologies to imitate human intelligence. These include: machine learning such as deep learning, decision trees, if-then rules, and logic.

Many of these same experts point to the human experience as a key differentiator for accountants. Many people and businesses have unique needs that first or second generation AI will be unable to understand. For example, explaining complicated tax forms is better done by a human than AI right now.

Accountants today have the power to define the future of the profession. The industry must develop the ability to adapt and evolve, as well as become proactive about the needs of tomorrow’s clients. Accounting as a profession needs to change, providing consultation and guidance to help clients prepare for and meet the future.

I think offering clients insight and expertise is a better bet than offering a simple service that can be replicated by machines.

Interest in rejuvenation biotechnology is growing rapidly and attracting investors.

- Jim Mellon has made an investment in Insilico Medicine to enable the company to validate the many molecules discovered using deep learning and launch multi-modal biomarkers of human aging

Monday, April 10, 2017, Baltimore, MD — Insilico Medicine, Inc, a big data analytics company applying deep learning techniques to drug discovery, biomarker development, and aging research today announced that it has closed an investment from the billionaire biotechnology investor Jim Mellon. Proceeds will be used to perform pre-clinical validation of multiple lead molecules developed using Insilico Medicine’s drug discovery pipelines and to advance research in deep learned biomarkers of aging and disease.

“Unlike many wealthy business people who rely entirely on their advisors to support their investment in biotechnology, Jim Mellon has spent a substantial amount of time familiarizing himself with recent developments in biogerontology. He does not just come in with the funding, but brings in expert knowledge and a network of biotechnology and pharmaceutical executives, who work very quickly and focus on the commercialization potential. We are thrilled to have Mr. Mellon as one of our investors and business partners”, said Alex Zhavoronkov, PhD, founder, and CEO of Insilico Medicine, Inc.

Audio engineering can make computerized customer support lines seem friendlier and more helpful.

Say you’re on the phone with a company and the automated virtual assistant needs a few seconds to “look up” your information. And then you hear it. The sound is unmistakable. It’s familiar. It’s the clickity-clack of a keyboard. You know it’s just a sound effect, but unlike hold music or a stream of company information, it’s not annoying. In fact, it’s kind of comforting.

Michael Norton and Ryan Buell of the Harvard Business School studied this idea —that customers appreciate knowing that work is being done on their behalf, even when the only “person” “working” is an algorithm. They call it the labor illusion.

Some weird religious stories w/ transhumanism Expect the conflict between religion and transhumanism to get worse, as closed-minded conservative viewpoints get challenged by radical science and a future with no need for an afterlife: http://barbwire.com/2017/04/06/cybernetic-messiah-transhumanism-artificial-intelligence/ & http://www.livebytheword.blog/google-directors-push-for-computers-inside-human-brains-is-anti-christ-human-rights-abuse-theologians-explain/ & http://ctktexas.com/pastoral-backstory-march-30th-2017/

By J. Davila Ashcroft

The recent film Ghost in the Shell is a science fiction tale about a young girl (known as Major) used as an experiment in a Transhumanist/Artificial Intelligence experiment, turning her into a weapon. At first, she complies, thinking the company behind the experiment saved her life after her family died. The truth is, however, that the company took her forcefully while she was a runaway. Major finds out that this company has done the same to others as well, and this knowledge causes her to turn on the company. Throughout the story the viewer is confronted with the existential questions behind such an experiment as Major struggles with the trauma of not feeling things like the warmth of human skin, and the sensations of touch and taste, and feels less than human, though she is told many times she is better than human. While this is obviously a science fiction story, what might comes as a surprise to some is that the subject matter of the film is not just fiction. Transhumanism and Artificial Intelligence on the level of the things explored in this film are all too real, and seem to be only a few years around the corner.

Recently it was reported that Elon Musk of SpaceX fame had a rather disturbing meeting with Demis Hassabis. Hassabis is the man in charge of a very disturbing project with far reaching plans akin to the Ghost in the Shell story, known as DeepMind. DeepMind is a Google project dedicated to exploring and developing all the possible uses of Artificial Intelligence. Musk stated during this meeting that the colonization of Mars is important because Hassabis’ work will make earth too dangerous for humans. By way of demonstrating how dangerous the goals of DeepMind are, one of its business partners, Shane Lange is reported to have stated, “I think human extinction will probably occur, and this technology will play a part in it.” Lange likely understands what critics of artificial intelligence have been saying for years. That is, such technology has an almost certain probability of become “self aware”. That is, becoming aware of its own existence, abilities, and developing distinct opinions and protocols that override those of its creators. If artificial intelligence does become sentient, that would mean, for advocates of A.I., that we would then owe them moral consideration. They, however, would owe humanity no such consideration if they perceived us as a danger to their existence, since we could simply disconnect them. In that scenario we would be an existential threat, and what do you think would come of that? Thus Lange’s statement carries an important message.

Already so-called “deep learning” machines are capable of figuring out solutions that weren’t programmed into them, and actually teach themselves to improve. For example, AlphaGo, an artificial intelligence designed to play the game Go, developed its own strategies for winning at the game. Strategies which its creators cannot explain and are at a loss to understand.

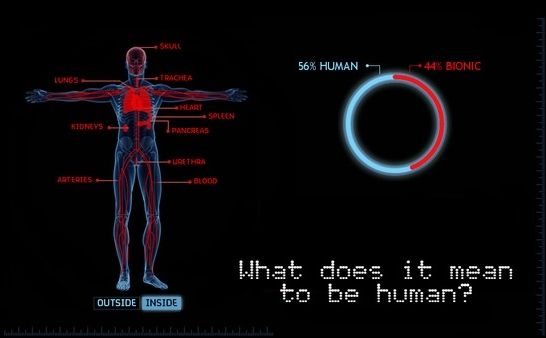

Transhumanist Philosophy The fact is many of us have been physically altered in some way. Some of the most common examples are lasik surgery, hip and knee replacements, and heart valve replacements, and nearly everyone has had vaccines that enhance our normal physical ability to resist certain illnesses and disease. The question is, how far is too far? How “enhanced” is too enhanced?

“The clothing industry is said to be the world’s second most polluting business, runner-up in grubbiness to oil.”

Although some thinkers use the term “singularity” to refer to any dramatic paradigm shift in the way we think and perceive our reality, in most conversations The Singularity refers to the point at which AI surpasses human intelligence. What that point looks like, though, is subject to debate, as is the date when it will happen.

In a recent interview with Inverse, Stanford University business and energy and earth sciences graduate student Damien Scott provided his definition of singularity: the moment when humans can no longer predict the motives of AI. Many people envision singularity as some apocalyptic moment of truth with a clear point of epiphany. Scott doesn’t see it that way.

“We’ll start to see narrow artificial intelligence domains that keep getting better than the best human,” Scott told Inverse. Calculators already outperform us, and there’s evidence that within two to three years, AI will outperform the best radiologists in the world. In other words, the singularity is already happening across each specialty and industry touched by AI — which, soon enough, will be all of them. If you’re of the mind that the singularity means catastrophe for humans, this likens the process for humans to the experience of the frogs placed into the pot of water that slowly comes to a boil: that is to say, killing us so slowly that we don’t notice it’s already begun.

Deep learning owes its rising popularity to its vast applications across an increasing number of fields. From healthcare to finance, automation to e-commerce, the RE•WORK Deep Learning Summit (27−28 April) will showcase the deep learning landscape and its impact on business and society.

Of notable interest is speaker Jeffrey De Fauw, Research Engineer at DeepMind. Prior to joining DeepMind, De Fauw developed a deep learning model to detect Diabetic Retinopathy (DR) in fundus images, which he will be presenting at the Summit. DR is a leading cause of blindness in the developed world and diagnosing it is a time-consuming process. De Fauw’s model was designed to reduce diagnostics time and to accurately identify patients at risk, to help them receive treatment as early as possible.

Joining De Fauw will be Brian Cheung, A PhD student from UC Berkeley, and currently working at Google Brain. At the event, he will explain how neural network models are able to extract relevant features from data with minimal feature engineering. Applied in the study of physiology, his research aims to use a retinal lattice model to examine retinal images.