I am a former Microsoft programmer who wrote a book (for a general audience) about the future of software called After the Software Wars. Eric Klien has invited me to post on this blog (Software and the Singularity, AI and Driverless cars) Here are the sections on the Space Elevator. I hope you find these pages food for thought and I appreciate any feedback.

A Space Elevator in 7

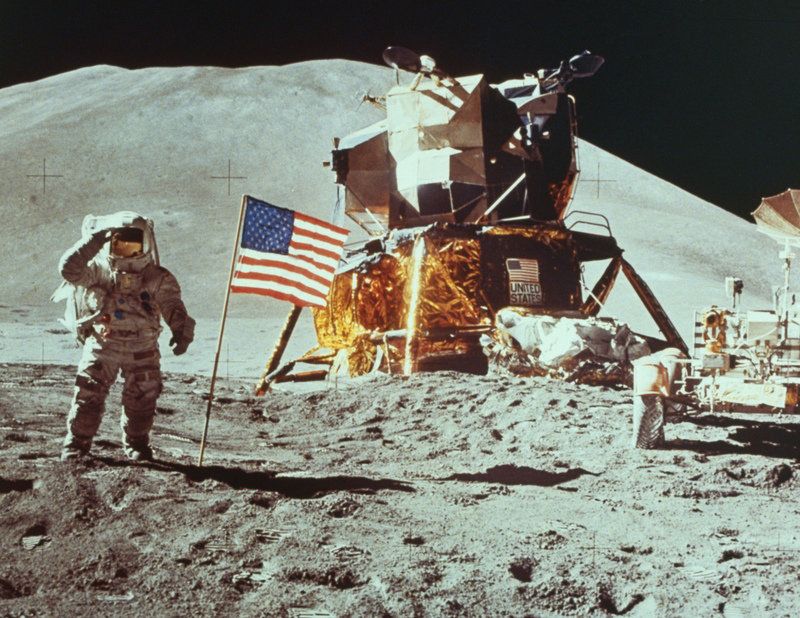

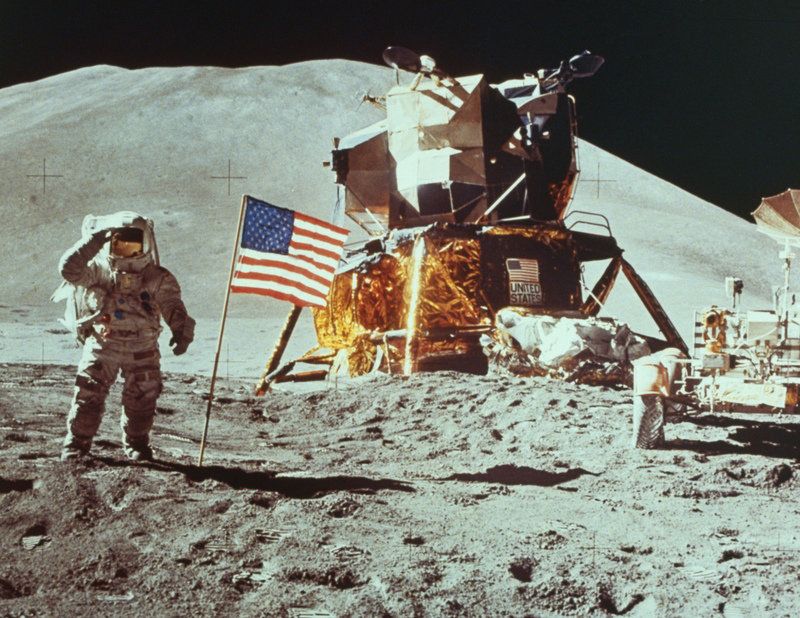

Midnight, July 20, 1969; a chiaroscuro of harsh contrasts appears on the television screen. One of the shadows moves. It is the leg of astronaut Edwin Aldrin, photographed by Neil Armstrong. Men are walking on the moon. We watch spellbound. The earth watches. Seven hundred million people are riveted to their radios and television screens on that July night in 1969. What can you do with the moon? No one knew. Still, a feeling in the gut told us that this was the greatest moment in the history of life. We were leaving the planet. Our feet had stirred the dust of an alien world.

—Robert Jastrow, Journey to the Stars

Management is doing things right, Leadership is doing the right things!

—Peter Drucker

SpaceShipOne was the first privately funded aircraft to go into space, and it set a number of important “firsts”, including being the first privately funded aircraft to exceed Mach 2 and Mach 3, the first privately funded manned spacecraft to exceed 100km altitude, and the first privately funded reusable spacecraft. The project is estimated to have cost $25 million dollars and was built by 25 people. It now hangs in the Smithsonian because it serves no commercial purpose, and because getting into space is no longer the challenge — it is the expense.

In the 21st century, more cooperation, better software, and nanotechnology will bring profound benefits to our world, and we will put the Baby Boomers to shame. I focus only on information technology in this book, but materials sciences will be one of the biggest tasks occupying our minds in the 21st century and many futurists say that nanotech is the next (and last?) big challenge after infotech.

I’d like to end this book with one more big idea: how we can jump-start the nanotechnology revolution and use it to colonize space. Space, perhaps more than any other endeavor, has the ability to harness our imagination and give everyone hope for the future. When man is exploring new horizons, there is a swagger in his step.

Colonizing space will change man’s perspective. Hoarding is a very natural instinct. If you give a well-fed dog a bone, he will bury it to save it for a leaner day. Every animal hoards. Humans hoard money, jewelry, clothes, friends, art, credit, books, music, movies, stamps, beer bottles, baseball statistics, etc. We become very attached to these hoards. Whether fighting over $5,000 or $5,000,000 the emotions have the exact same intensity.

When we feel crammed onto this pale blue dot, we forget that any resource we could possibly want is out there in incomparably big numbers. If we allocate the resources merely of our solar system to all 6 billion people equally, then this is what we each get:

| Resource |

Amount |

| Hydrogen |

34,000 billion Tons |

| Iron |

834 billion Tons |

| Silicates (sand, glass) |

834 billion Tons |

| Oxygen |

34 billion Tons |

| Carbon |

34 billion Tons |

| Energy production |

64 trillion Kilowatts per hour |

Even if we confine ourselves only to the resources of this planet, we have far more than we could ever need. This simple understanding is a prerequisite for a more optimistic and charitable society, which has characterized eras of great progress. Unfortunately, NASA’s current plans are far from adding that swagger.

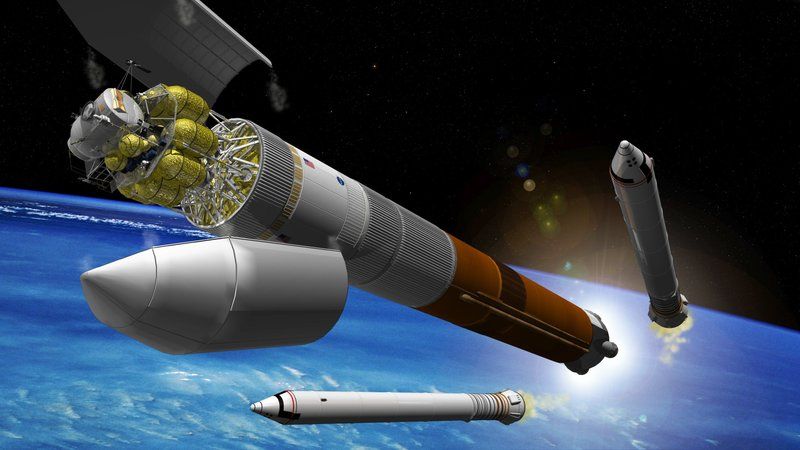

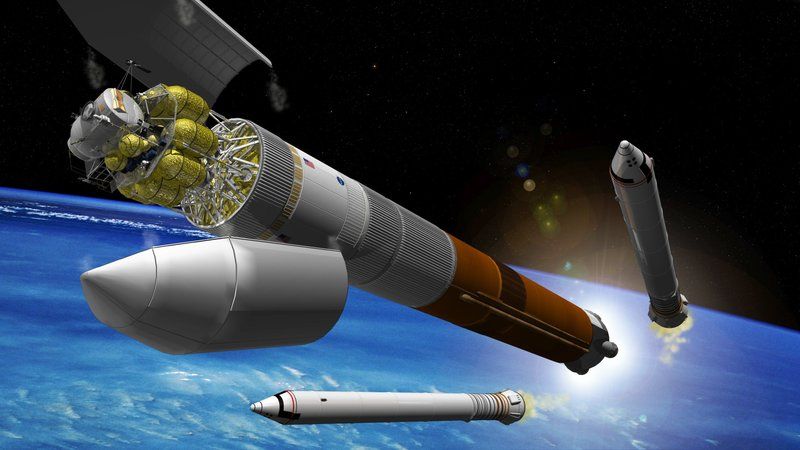

If NASA follows through on its 2004 vision to retire the Space Shuttle and go back to rockets, and go to the moon again, this is NASA’s own imagery of what we will be looking at on DrudgeReport.com in 2020.

Our astronauts will still be pissing in their space suits in 2020.

According to NASA, the above is what we will see in 2020, but if you squint your eyes, it looks just like 1969:

All this was done without things we would call computers.

Only a government bureaucracy can make such little progress in 50 years and consider it business as usual. There are many documented cases of large government organizations plagued by failures of imagination, yet no one considers that the rocket-scientist-bureaucrats at NASA might also be plagued by this affliction. This is especially ironic because the current NASA Administrator, Michael Griffin, has admitted that many of its past efforts were failures:

- The Space Shuttle, designed in the 1970s, is considered a failure because it is unreliable, expensive, and small. It costs $20,000 per pound of payload to put into low-earth orbit (LEO), a mere few hundred miles up.

- The International Space Station (ISS) is small, and only 200 miles away, where gravity is 88% of that at sea-level. It is not self-sustaining and doesn’t get us any closer to putting people on the moon or Mars. (By moving at 17,000 miles per hour, it falls fast enough to stay in the same orbit.) America alone spent $100 billion on this boondoggle.

The key to any organization’s ultimate success, from NASA to any private enterprise, is that there are leaders at the top with vision. NASA’s mistakes were not that it was built by the government, but that the leaders placed the wrong bets. Microsoft, by contrast, succeeded because Bill Gates made many smart bets. NASA’s current goal is “flags and footprints”, but their goal should be to make it cheap to do those things, a completely different objective.1

I don’t support redesigning the Space Shuttle, but I also don’t believe that anyone at NASA has seriously considered building a next-generation reusable spacecraft. NASA is basing its decision to move back to rockets primarily on the failures of the first Space Shuttle, an idea similar to looking at the first car ever built and concluding that cars won’t work.

Unfortunately, NASA is now going back to technology even more primitive than the Space Shuttle. The “consensus” in the aerospace industry today is that rockets are the future. Rockets might be in our future, but they are also in the past. The state-of-the-art in rocket research is to make them 15% more efficient. Rocket research is incremental today because the fundamental chemistry and physics hasn’t changed since their first launches in the mid-20th century.

Chemical rockets are a mistake because the fuel which propels them upward is inefficient. They have a low “specific impulse”, which means it takes lots of fuel to accelerate the payload, and even more more fuel to accelerate that fuel! As you can see from the impressive scenes of shuttle launches, the current technology is not at all efficient; rockets typically contain 6% payload and 94% overhead. (Jet engines don’t work without oxygen but are 15 times more efficient than rockets.)

If you want to know why we have not been back to the moon for decades, here is an analogy:

| What would taking delivery of this car cost you? |

| A Californian buys a car made in Japan. |

| The car is shipped in its own car carrier. |

| The car is off-loaded in the port of Los Angeles. |

| The freighter is then sunk. |

The latest in propulsion technology is electrical ion drives which accelerate atoms 20 times faster than chemical rockets, which mean you need much less fuel. The inefficiency of our current chemical rockets is what is preventing man from colonizing space. Our simple modern rockets might be cheaper than our complicated old Space Shuttle, but it will still cost thousands of dollars per pound to get to LEO, a fancy acronym for 200 miles away. Working on chemical rockets today is the technological equivalent of polishing a dusty turd, yet this is what our esteemed NASA is doing.

The Space Elevator

When a distinguished but elderly scientist states that something is possible, he is almost certainly right. When he states that something is impossible, he is very probably wrong.

—Arthur C. Clarke RIP, 1962

The best way to predict the future is to invent it. The future is not laid out on a track. It is something that we can decide, and to the extent that we do not violate any known laws of the universe, we can probably make it work the way that we want to. —Alan Kay

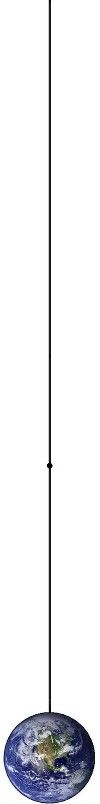

A NASA depiction of the space elevator. A space elevator will make it hundreds of times cheaper to put a pound into space. It is an efficiency difference comparable to that between the horse and the locomotive.

One of the best ways to cheaply get back into space is kicking around NASA’s research labs:

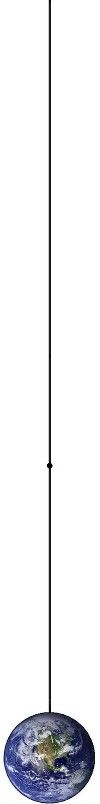

Scale picture of the space elevator relative to the size of Earth. The moon is 30 Earth-diameters away, but once you are at GEO, it requires relatively little energy to get to the moon, or anywhere else.

A space elevator is a 65,000-mile tether upon which we can launch things into space in a slow, safe, and cheap way.

And these climbers don’t even need to carry their energy as you can use solar panels to provide the energy for the climbers. All this means you need much less fuel. Everything is fully reusable, so when you have built such a system, it is easy to have daily launches.

The first elevator’s climbers will travel into space at just a few hundred miles per hour — a very safe speed. Building a device which can survive the acceleration and jostling is a large part of the expense of putting things into space today. This technology will make it hundreds, and eventually thousands of times cheaper to put things, and eventually people, into space.

A space elevator might sound like science fiction, but like many of the ideas of science fiction, it is a fantasy that makes economic sense. While you needn’t trust my opinion on whether a space elevator is feasible, NASA has never officially weighed in on the topic — also a sign they haven’t given it serious consideration.

This all may sound like science fiction, but compared to the technology of the 1960s, when mankind first embarked on a trip to the moon, a space elevator is simple for our modern world to build. In fact, if you took a cellphone back to the Apollo scientists, they’d treat it like a supercomputer and have teams of engineers huddled over it 24 hours a day. With only the addition of the computing technology of one cellphone, we might have shaved a year off the date of the first moon landing.

Carbon Nanotubes

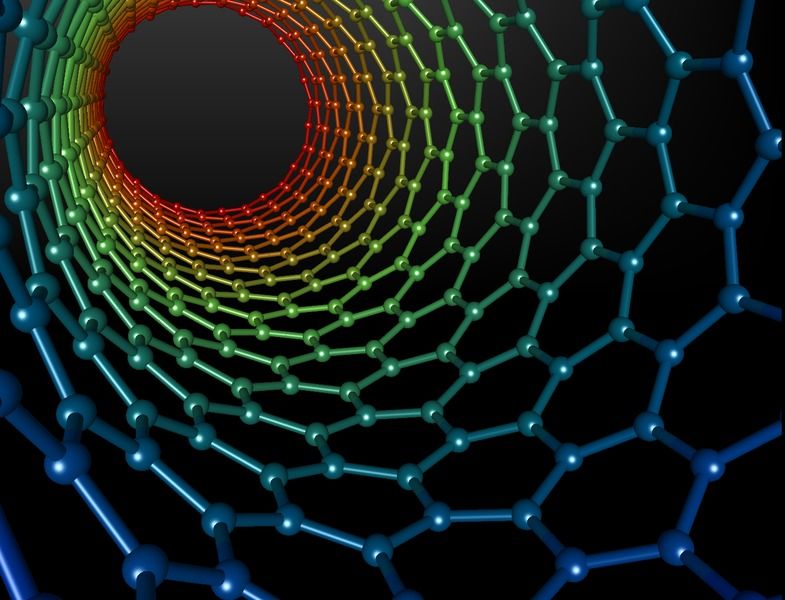

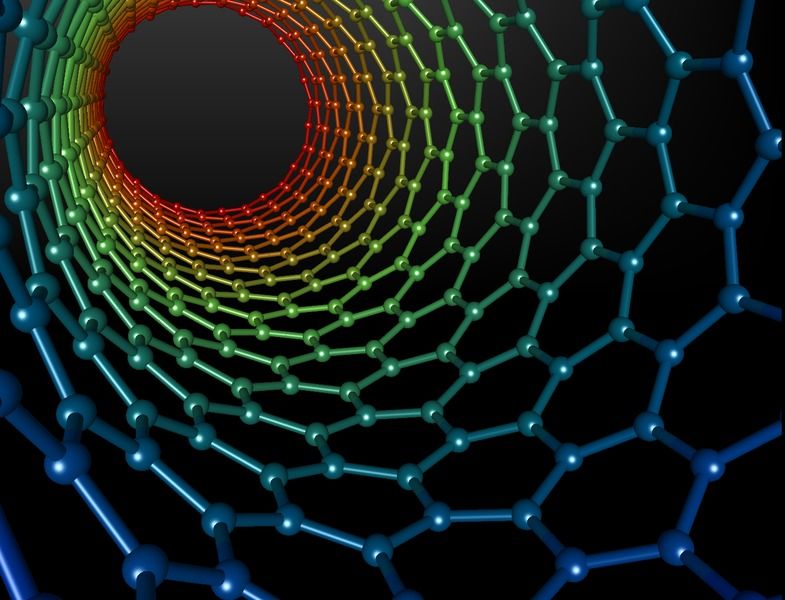

Nanotubes are Carbon atoms in the shape of a hexagon. Graphic created by Michael Ströck.

We have every technological capability necessary to build a space elevator with one exception: carbon nanotubes (CNT). To adapt a line from Thomas Edison, a space elevator is 1% inspiration, and 99% perspiration.

Carbon nanotubes are extremely strong and light, with a theoretical strength of three million kilograms per square centimeter; a bundle the size of a few hairs can lift a car. The theoretical strength of nanotubes is far greater than what we would need for our space elevator; current baseline designs specify a paper-thin, 3-foot-wide ribbon. These seemingly flimsy dimensions would be strong enough to support their own weight, and the 10-ton climbers using the elevator.

The nanotubes we need for our space elevator are the perfect place to start the nanotechnology revolution because, unlike biological nanotechnology research, which uses hundreds of different atoms in extremely complicated structures, nanotubes have a trivial design.

The best way to attack a big problem like nanotechnology is to first attack a small part of it, like carbon nanotubes. A “Manhattan Project” on general nanotechnology does not make sense because it is too unfocused a problem, but such an effort might make sense for nanotubes. Or, it might simply require the existing industrial expertise of a company like Intel. Intel is already experimenting with nanotubes inside computer chips because metal loses the ability to conduct electricity at very small diameters. But no one has asked them if they could build mile-long ropes.

The US government has increased investments in nanotechnology recently, but we aren’t seeing many results. From space elevator expert Brad Edwards:

There’s what’s called the National Nanotechnology Initiative. When I looked into it, the budget was a billion dollars. But when you look closer at it, it is split up between a dozen agencies, and within each agency it’s split again into a dozen different areas, much of it ends up as $100,000 grants. We looked into it with regards to carbon nanotube composites, and it appeared that about thirty million dollars was going into high-strength materials — and a lot of that was being spent internally in a lot of the agencies; in the end there’s only a couple of million dollars out of the billion-dollar budget going into something that would be useful to us. The money doesn’t have focus, and it’s spread out to include everything. You get a little bit of effort in a thousand different places. A lot of the budget is spent on one entity trying to play catch-up with whoever is leading. Instead of funding the leader, they’re funding someone else internally to catch up.

Again, here is a problem similar to the one we find in software today: people playing catchup rather than working together. I don’t know what nanotechnology scientists do every day, but it sounds like they would do well to follow in the footsteps of our free software pioneers and start cooperating.

The widespread production of nanotubes could be the start of a nanotechnology revolution. And the space elevator, the killer app of nanotubes, will enable the colonization of space.

Why?

William Bradford, speaking in 1630 of the founding of the Plymouth Bay Colony, said that all great and honorable actions are accompanied with great difficulties, and both must be enterprised and overcome with answerable courage.

There is no strife, no prejudice, no national conflict in outer space as yet. Its hazards are hostile to us all. Its conquest deserves the best of all mankind, and its opportunity for peaceful cooperation may never come again. But why, some say, the moon? Why choose this as our goal? And they may well ask why climb the highest mountain? Why, 35 years ago, fly the Atlantic? Why does Rice play Texas?

We choose to go to the moon. We choose to go to the moon in this decade and do the other things, not because they are easy, but because they are hard, because that goal will serve to organize and measure the best of our energies and skills, because that challenge is one that we are willing to accept, one we are unwilling to postpone, and one which we intend to win, and the others, too.

It is for these reasons that I regard the decision last year to shift our efforts in space from low to high gear as among the most important decisions that will be made during my incumbency in the office of the Presidency.

In the last 24 hours we have seen facilities now being created for the greatest and most complex exploration in man’s history. We have felt the ground shake and the air shattered by the testing of a Saturn C-1 booster rocket, many times as powerful as the Atlas which launched John Glenn, generating power equivalent to 10,000 automobiles with their accelerators on the floor. We have seen the site where five F-1 rocket engines, each one as powerful as all eight engines of the Saturn combined, will be clustered together to make the advanced Saturn missile, assembled in a new building to be built at Cape Canaveral as tall as a 48 story structure, as wide as a city block, and as long as two lengths of this field.

The growth of our science and education will be enriched by new knowledge of our universe and environment, by new techniques of learning and mapping and observation, by new tools and computers for industry, medicine, the home as well as the school.

I do not say that we should or will go unprotected against the hostile misuse of space any more than we go unprotected against the hostile use of land or sea, but I do say that space can be explored and mastered without feeding the fires of war, without repeating the mistakes that man has made in extending his writ around this globe of ours.

We have given this program a high national priority — even though I realize that this is in some measure an act of faith and vision, for we do not now know what benefits await us. But if I were to say, my fellow citizens, that we shall send to the moon, 240,000 miles away from the control station in Houston, a giant rocket more than 300 feet tall, the length of this football field, made of new metal alloys, some of which have not yet been invented, capable of standing heat and stresses several times more than have ever been experienced, fitted together with a precision better than the finest watch, carrying all the equipment needed for propulsion, guidance, control, communications, food and survival, on an untried mission, to an unknown celestial body, and then return it safely to earth, re-entering the atmosphere at speeds of over 25,000 miles per hour, causing heat about half that of the temperature of the sun — almost as hot as it is here today — and do all this, and do it right, and do it first before this decade is out — then we must be bold.

—John F. Kennedy, September 12, 1962

Lunar Lander at the top of a rocket. Rockets are expensive and impose significant design constraints on space-faring cargo.

NASA has 18,000 employees and a $17-billion-dollar budget. Even with a fraction of those resources, their ability to oversee the design, handle mission control, and work with many partners is more than equal to this task.

If NASA doesn’t build the space elevator, someone else might, and it would change almost everything about how NASA does things today. NASA’s tiny (15-foot-wide) new Orion spacecraft, which was built to return us to the moon, was designed to fit atop a rocket and return the astronauts to Earth with a 25,000-mph thud, just like in the Apollo days. Without the constraints a rocket imposes, NASA’s spaceship to get us back to the moon would have a very different design. NASA would need to throw away a lot of the R&D they are now doing if a space elevator were built.

Another reason the space elevator makes sense is that it would get the various scientists at NASA to work together on a big, shared goal. NASA has recently sent robots to Mars to dig two-inch holes in the dirt. That type of experience is similar to the skills necessary to build the robotic climbers that would climb the elevator, putting those scientists to use on a greater purpose.

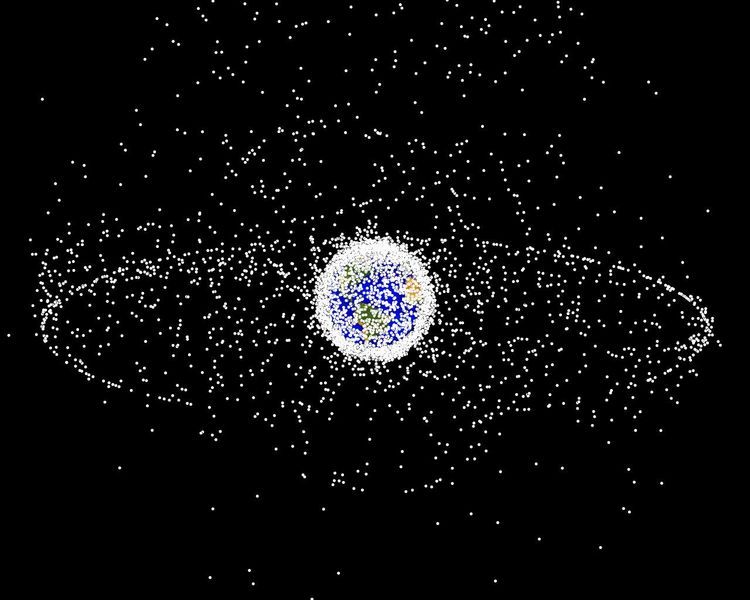

Space debris is a looming hazard, and a threat to the ribbon:

Map of space debris. The US Strategic Command monitors 10,000 large objects to prevent them from being misinterpreted as a hostile missile. China blew up a satellite in January, 2007 which created 35,000 pieces of debris larger than 1 centimeter.

The space elevator provides both a motive, and a means to launch things into space to remove the debris. (The first elevator will need to be designed with an ability to move around to avoid debris!)

Once you have built your first space elevator, the cost of building the second one drops dramatically. A space elevator will eventually make it $10 per pound to put something into space. This will open many doors for scientists and engineers around the globe: bigger and better observatories, a spaceport at GEO, and so forth.

Surprisingly, one of the biggest incentives for space exploration is likely to be tourism. From Hawaii to Africa to Las Vegas, the primary revenue in many exotic places is tourism. We will go to the stars because man is driven to explore and see new things.

Space is an extremely harsh place, which is why it is such a miracle that there is life on Earth to begin with. The moon is too small to have an atmosphere, but we can terraform Mars to create one, and make it safe from radiation and pleasant to visit. This will also teach us a lot about climate change, and in fact, until we have terraformed Mars, I am going to assume the global warming alarmists don’t really know what they are talking about yet.2 One of the lessons in engineering is that you don’t know how something works until you’ve done it once.

Terraforming Mars may sound like a silly idea today, but it is simply another engineering task.3 I worked in several different groups at Microsoft, and even though the set of algorithms surrounding databases are completely different from those for text engines, they are all engineering problems and the approach is the same: break a problem down and analyze each piece. (One of the interesting lessons I learned at Microsoft was the difference between real life and standardized tests. In a standardized test, if a question looks hard, you should skip it and move on so as not to waste precious time. At Microsoft, we would skip past the easy problems and focus our time on the hard ones.)

Engineering teaches you that there are an infinite number of ways to attack a problem, each with various trade-offs; it might take 1,000 years to terraform Mars if we were to send one ton of material, but only 20 years if we could send 1,000 tons of material. Whatever we finally end up doing, the first humans to visit Mars will be happy that we turned it green for them. This is another way our generation can make its mark.

A space elevator is a doable mega-project, but there is no progress beyond a few books and conferences because the very small number of people on this planet who are capable of initiating this project are not aware of the feasibility of the technology.

Brad Edwards, one of the world’s experts on the space elevator, has a PhD and a decade of experience designing satellites at Los Alamos National Labs, and yet he has told me that he is unable to get into the doors of leadership at NASA, or the Gates Foundation, etc. No one who has the authority to organize this understands that a space elevator is doable.

Glenn Reynolds has blogged about the space elevator on his very influential Instapundit.com, yet a national dialog about this topic has not yet happened, and NASA is just marching ahead with its expensive, dim ideas. My book is an additional plea: one more time, and with feeling!

How and When

It does not follow from the separation of planning and doing in the analysis of work that the planner and the doer should be two different people. It does not follow that the industrial world should be divided into two classes of people: a few who decide what is to be done, design the job, set the pace, rhythm and motions, and order others about; and the many who do what and as they are told.

—Peter Drucker

There are a many interesting details surrounding a space elevator, and for those interested in further details, I recommend The Space Elevator, co-authored by Brad Edwards.

The size of the first elevator is one of biggest questions to resolve. If you were going to lay fiber optic cables across the Atlantic ocean, you’d set aside a ton of bandwidth capacity. Likewise, the most important metric for our first space elevator is its size. I believe at least 100 tons / day is a worthy requirement, otherwise the humans will revert to form and start hoarding the cargo space.

The one other limitation with current designs is that they assume climbers which travel hundreds of miles per hour. This is a fine speed for cargo, but it means that it will take days to get into orbit. If we want to send humans into space in an elevator, we need to build climbers which can travel at least 10,000 miles per hour. While this seems ridiculously fast, if you accelerate to this speed over a period of minutes, it will not be jarring. Perhaps this should be the challenge for version two if they can’t get it done the first time.

The conventional wisdom amongst those who think it is even possible is that it will take between 20 and 50 years to build a space elevator. However, anyone who makes such predictions doesn’t understand that engineering is a fungible commodity. I can just presume they must never had the privilege of working with a team of 100 people who in 3 days accomplish as much as you will in a year. Two people will, in general, accomplish something twice as fast as one person.4 How can you say something will unequivocally take a certain amount of time when you don’t specify how many resources it will require or how many people you plan to assign to the task?

Furthermore, predictions are usually way off. If you asked someone how long it would take unpaid volunteers to make Wikipedia as big as the Encyclopedia Britannica, no one would have guessed the correct answer of two and a half years. From creating a space elevator to world domination by Linux, anything can happen in far less time than we think is possible if everyone simply steps up to play their part. The way to be a part of the future is to invent it, by unleashing our scientific and creative energy towards big, shared goals. Wikipedia, as our encyclopedia, was an inspiration to millions of people, and so the resources have come piling in. The way to get help is to create a vision that inspires people. In a period of 75 years, man went from using horses and wagons to landing on the moon. Why should it take 20 years to build something that is 99% doable today?

Many of the components of a space elevator are simple enough that college kids are building prototype elevators in their free time. The Elevator:2010 contest is sponsored by NASA, but while these contests have generated excitement and interest in the press, they are building toys, much like a radio-controlled airplane is a toy compared to a Boeing airliner.

I believe we could have a space elevator built in 7 years. If you divvy up five years of work per person, and add in a year to ramp up and test, you can see how seven years is quite reasonable. Man landed on the moon 7 years after Kennedy’s speech, exactly as he ordained, because dates can be self-fulfilling prophecies. It allows everyone to measure themselves against their goals, and determine if they need additional resources. If we decided we needed an elevator because our civilization had a threat of extermination, one could be built in a very short amount of time.

If the design of the hardware and the software were done in a public fashion, others could take the intermediate efforts and test them and improve them, therefore saving further engineering time. Perhaps NASA could come up with hundreds of truly useful research projects for college kids to help out on instead of encouraging them to build toys. There is a lot of software to be written and that can be started now.

The Unknown Unknown is the nanotubes, but nearly all the other pieces can be built without having any access to them. We will only need them wound into a big spool on the launch date.

I can imagine that any effort like this would get caught up in a tremendous amount of international political wrangling that could easily add years on to the project. We should not let this happen, and we should remind each other that the space elevator is just the railroad car to space — the exciting stuff is the cargo inside and the possibilities out there. A space elevator is not a zero sum endeavor: it would enable lots of other big projects that are totally unfeasible currently. A space elevator would enable various international space agencies that have money, but no great purpose, to work together on a large, shared goal. And as a side effect it would strengthen international relations.5

1 The Europeans aren’t providing great leadership either. One of the big investments of their Space agencies, besides the ISS, is to build a duplicate GPS satellite constellation, which they are doing primarily because of anti-Americanism! Too bad they don’t realize that their emotions are causing them to re-implement 35 year-old technology, instead of spending that $5 Billion on a truly new advancement. Cloning GPS in 2013: Quite an achievement, Europe!

2 Carbon is not a pollutant and is valuable. It is 18% of the mass of the human body, but only .03% of the mass of the Earth. If Carbon were more widespread, diamonds would be cheaper. Driving very fast cars is the best way to unlock the carbon we need. Anyone who thinks we are running out of energy doesn’t understand the algebra in E = mc2.

3 Mars’ moon, Phobos, is only 3,700 miles above Mars, and if we create an atmosphere, it will slow down and crash. We will need to find a place to crash the fragments, I suggest in one of the largest canyons we can find; we could put them next to a cross dipped in urine and call it the largest man-made art.

4 Fred Brooks’ The Mythical Man-Month argues that adding engineers late to a project makes a project later, but ramp-up time is just noise in the management of an engineering project. Also, wikis, search engines, and other technologies invented since his book have lowered the overhead of collaboration.

5 Perhaps the Europeans could build the station at GEO. Russia could build the shuttle craft to move cargo between the space elevator and the moon. The Middle East could provide an electrical grid for the moon. China could take on the problem of cleaning up the orbital space debris and build the first moon base. Africa could attack the problem of terraforming Mars, etc.

The summer 2010 “

The summer 2010 “ Also speaking at the H+ Summit @ Harvard is

Also speaking at the H+ Summit @ Harvard is