TOWARDS a METAMATERIALLY-BASED ANALOGUE SENSOR FOR TELESCOPE EYEPIECES jeremy batterson.

https://www.youtube.com/watch?v=rVQWmWkbzkw.

(NB: Those familiar with photography or telescopy can skip over the “elements of a system,” since they will already know this.)

In many telescopic applications, what is desired is not a more magnified image, but a brighter image. Some astronomical objects, such as the Andromeda galaxy or famous nebulae like M42 are very large in apparent size, but very faint. If the human eye could see the Andromeda galaxy, it would appear four times wider than the Moon. The great Orion nebula M42 is twice the apparent diameter of the Moon.

Astrophotographers have an advantage over visual astronomers in that their digital sensors can be wider than the human pupil, and thus can accommodate larger exit pupils for brighter images.

The common three-factor determination of brightness of a photograph (aperture, ISO, and shutter speed) should actually be five-factor, including what is often left out since it had already been inherently designed into a system: magnification and exit pupil. The common factors are.

Elements of a system: 1 )Aperture. As aperture increases, the light gain of a system increases by the square of increased aperture, so a 2-inch diameter entrance pupil aperture has four times gain over a 1-inch diameter entrance pupil and so on.

2) ISO: the defacto sensitivity of the film or digital sensor. A high ISO sensor will increase the light gain of a photograph, so that a higher ISO sensor will record the same camera shot brighter. In modern digital systems, the ISO factor is added PRIOR to post-shot processing, such as would be done with photoshop curves, since boosting the gain after the image is created will also increase the noise. Adding the brightness factor before the shot is finalized does not incorporate the noise as much and makes clearer images.

3) The shutter speed allows more time for light to accumulate, which is fine for still-shots, where there is no motion. In astronomy, long-exposure photographs keep the shutter open, with the telescope slowly moving with the stars, slowly accumulating light to produce images that would only be obtainable by a huge aperture telescope. In daytime, when much light is available, a high shutter speed allows for moving images to not be blurred.

4)Magnification, often left out in accounts, lowers the light gain by the square of increased magnification, so is the inverse of increased aperture. If we magnify an image two times, the lights is spread over an area four times greater, and the image is thus four times dimmer. Of course, for stars, which are points of light at any magnification, this does not apply; they will appear as bright, but against a darker sky. Anything that has an apparent area, planets, nebulae, etc., will be dimmed.

5) Exit pupil, a key limiting factor in astronomy. The above four factors lead to a physical impossibility of having ultra-fast, bright telescopes or binoculars for visual use. This is why astronomical binoculars are typically 7×50 or something akin. The objective lenses at the front create a 50mm entrance pupil, but if these binoculars go to a significantly lower magnification than 7x, the exit pupil becomes wider than the human dark-adjusted pupil, and much of the light is wasted. If this problem did not exist, astronomers would prefer “2×50” binoculars for many applications—for bright visual images far brighter than the human eye can see.

Analogue vs Digital: Passive night vision devices remain one of the ONLY cases where analogue optics systems remain better than digital ones. Virtually all modern cameras are digital, but all of the best night-vision devices, such as “Generation-3” devices are still analogue. The systemic luminance gain (what the viewer actually sees) of the most advanced analogue night vision system is in the range of 2000x, meaning it can make an image 2000x brighter than what it would appear to be otherwise. This is equivalent to turning a 6-inch telescope into a 268-inch mega-telescope in terms of light gain. For comparison, until fairly recently, the largest telescope in the world was the Mount Palomar 200-inch-wide telescope. In fact, the gain is so high, that filters are often used to remove light-pollution, and little effort made to capture the full exit pupil. Even though some light gain will be lost by having too large an exit pupil, there is so much to spare that it is an acceptable loss. Thus, some astronomers use night vision devices to see views of astronomical objects which would be otherwise beyond any existing amateur telescope. People use these devices as stand-alone viewers to walk around on moonless nights, with only starlight for luminance.

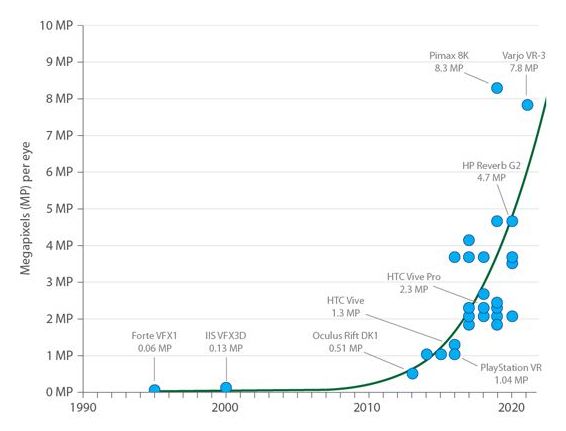

Current Loss of Resolution from Digital Gain: Digital sensors increase light gain by increasing the size of the imaging pixels, so that each one has a larger aperture and thus collects more light. But, an image sensor with larger pixels also has less pixels per square inch and thus less resolution, leading to less clear images. One popular night vision digital device, the Sionyx digital camera, is about the size of a high-end telescope eyepiece, but with a low resolution of 1280×720. This camera boosts light gain by registering infrared and ultraviolet in addition to visible light, while adding a high ISO factor—and by having very large pixel size, and reasonably large sensor size. The problem of pixel resolution will be an inherent problem of digital night vision devices unless the ISO value of each individual pixel can be boosted enough to allow smaller pixels than the human eye can perceive. On the advantageous side, if a camera like the Sionyx were to be used as a telescope eyepiece, its slowest shutter speed could be reduced still further, giving the visual equivalent of a long-exposure photograph. As the viewer watched, the image would continually grow brighter. For visual astronomy, it would also need some sort of projection system to make the image field appear round and closer to the eye, instead of as a rectangular movie screen from the back of a movie theater. Visual astronomers will only want such a digital eyepiece if its image appears similar to the image they are accustomed to seeing through the eyepiece. The alternative to such a digital eyepiece with large pixel size is what we mention below.

A metamaterial-based analogue sensor: If a metamaterial-based analogue sensor is devised, with high ISO value, then this problem can be surmounted. An analogue system, such as that produced by image-intensification tubes of Generation-1 and Generation-2 night vision devices, does not use pixels, and does not lose resolution in the same way.

Analogue sensors also can have the added benefit of being larger than the human pupil, just as a digital sensor, and thus able to accommodate larger exit pupils. They could be used in combination with digital processing for the best of both worlds. In addition, in the same way that the human retina widely distributes cone and rod cells, a metamaterial sensor could widely distribute “cells” which were sensitive to UV, IR and VL, combining them all into one system that enabled humans to see all the way from far infrared all the way up to ultraviolet, and everything in-between.

There are multiple tracks for possible metamaterially-based night vision capacities. Many are being developed by the military and will take some time to work into the civilian economy.

One system, the X27 Camera, gives full-color and good resolution: https://www.youtube.com/watch?v=rVQWmWkbzkw.

One metamaterial developed for the US military allows full-IR spectrum imaging in one compact system. It would be possible to allow near, mid and far infrared to register as a basic RGB color system:

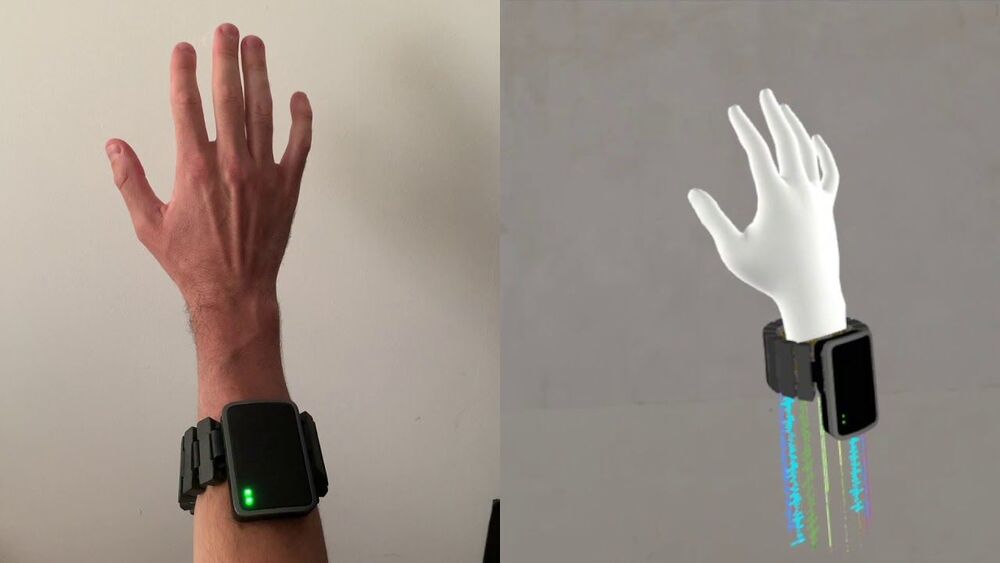

One other possibility is a retinal projection system using special contact metalenses over the eyes, which allow the projection of an image onto the full curved human retina. Retinal projection is a technique on the verge of breakthrough, given much effort and funding for “augmented reality” and virtual reality. A telescopic system could project its image through such an arrangement, giving the impression of a full 180 degree field of view, something no telescope in history has ever done. With such a system, the user would wear a special contact fisheye-type metalens which could project a narrow beam from a special projection eyepiece directly onto the retina. This would be a “real” image, ie. not digitally altered. Alternatively, and more easily, the same virtual reality system that projects onto human vision would simply project a digital rendering of the view from a telescope eyepiece.

As a final aside, one additional area where metamaterials will help existing night vision gain is by transmission. Current analogue devices lose around 90% of their initial gain in the system itself. Thus, a generation-3 intensification tube may have a gain of 20000, but only a system gain of 2000. A huge amount of loss is occurring, which would be greatly reduced simply by having simpler, flat and single metamaterial lenses. A flat metamaterial lens can incorporate all the elements of a complete achromatic multi-lens system into one flat lens so that there will be less light loss. If metalenses could cut transmission loss down to 70%, a typical generation-2 system, which is much cheaper, could perform on par with a typical current generation-3 system. At present metalenses have not reached the stage of development where they can be produced at large apertures, but that will change.

CRANE, Ind. – A Navy engineer has invented a groundbreaking method to improve night vision devices without adding weight or more batteries. Dr. Ben Conley, an electro-optics engineer at the Naval Surface Warfare Center-Crane Division, has developed a special metamaterial to bring full-spectrum infrared to warfighters. On August 28, the Navy was granted a U.S. patent on Conley’s technology.