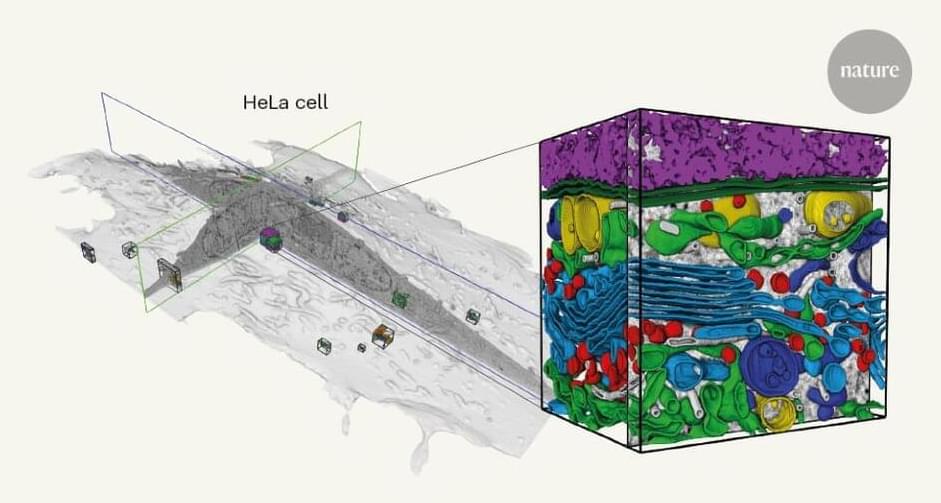

Refinement of a microscopy method enables a detailed look inside cells.

Researchers report the human brain may use next word prediction to drive language processing.

Source: MIT

In the past few years, artificial intelligence models of language have become very good at certain tasks. Most notably, they excel at predicting the next word in a string of text; this technology helps search engines and texting apps predict the next word you are going to type.

Only recently, researchers have uncovered evidence she wasn’t alone. In a 2019 study analyzing the complex mess of humanity’s prehistory, scientists used artificial intelligence (AI) to identify an unknown human ancestor species that modern humans encountered – and shared dalliances with – on the long trek out of Africa millennia ago.

“About 80,000 years ago, the so-called Out of Africa occurred, when part of the human population, which already consisted of modern humans, abandoned the African continent and migrated to other continents, giving rise to all the current populations”, explained evolutionary biologist Jaume Bertranpetit from the Universitat Pompeu Fabra in Spain.

As modern humans forged this path into the landmass of Eurasia, they forged some other things too – breeding with ancient and extinct hominids from other species.

Tesla’s Autopilot and Full Self-Driving Beta are, at their core, safety systems. They may be advanced enough to make driving tasks extremely easy and convenient, but ultimately, CEO Elon Musk has been consistent with the idea that Tesla’s advanced driver-assist technologies are being developed to make the world’s roads as safe as possible.

This is something that seems to be happening now among some members of the FSD Beta group, which is currently being expanded even to drivers with a Safety Score of 99. As the company expands its fleet of vehicles that are equipped with FSD beta, some testers have started sharing stories about how the advanced driver-assist system helped them avoid potential accidents on the road.

FSD Beta tester @FrenchieEAP, for example, recently shared a story about a moment when his Model 3 was sitting at a red light with the Full Self-Driving Beta engaged. When the light turned green, the all-electric sedan started moving forward — before braking suddenly. The driver initially thought that the FSD Beta was stopping for no reason, but a second later, the Model 3 owner realized that a cyclist had actually jumped a red light. The FSD Beta just saw the cyclist before he did.

An artificial intelligence (AI)-based technology rapidly diagnoses rare disorders in critically ill children with high accuracy, according to a report by scientists from University of Utah Health and Fabric Genomics, collaborators on a study led by Rady Children’s Hospital in San Diego. The benchmark finding, published in Genomic Medicine, foreshadows the next phase of medicine, where technology helps clinicians quickly determine the root cause of disease so they can give patients the right treatment sooner.

“This study is an exciting milestone demonstrating how rapid insights from AI-powered decision support technologies have the potential to significantly improve patient care,” says Mark Yandell, Ph.D., co-corresponding author on the paper. Yandell is a professor of human genetics and Edna Benning Presidential Endowed Chair at U of U Health, and a founding scientific advisor to Fabric.

Worldwide, about seven million infants are born with serious genetic disorders each year. For these children, life usually begins in intensive care. A handful of NICUs in the U.S., including at U of U Health, are now searching for genetic causes of disease by reading, or sequencing, the three billion DNA letters that make up the human genome. While it takes hours to sequence the whole genome, it can take days or weeks of computational and manual analysis to diagnose the illness.

Circa 2019 😀

Because they can process massive amounts of data, computers can perform analytical tasks that are beyond human capability. Google, for instance, is using its computing power to develop AI algorithms that construct two-dimensional CT images of lungs into a three-dimensional lung and look at the entire structure to determine whether cancer is present. Radiologists, in contrast, have to look at these images individually and attempt to reconstruct them in their heads. Another Google algorithm can do something radiologists cannot do at all: determine patients’ risk of cardiovascular disease by looking at a scan of their retinas, picking up on subtle changes related to blood pressure, cholesterol, smoking history and aging. “There’s potential signal there beyond what was known before,” says Google product manager Daniel Tse.

The Black Box Problem

AI programs could end up revealing entirely new links between biological features and patient outcomes. A 2019 paper in JAMA Network Open described a deep-learning algorithm trained on more than 85,000 chest x-rays from people enrolled in two large clinical trials that had tracked them for more than 12 years. The algorithm scored each patient’s risk of dying during this period. The researchers found that 53 percent of the people the AI put into a high-risk category died within 12 years, as opposed to 4 percent in the low-risk category. The algorithm did not have information on who died or on the cause of death. The lead investigator, radiologist Michael Lu of Massachusetts General Hospital, says that the algorithm could be a helpful tool for assessing patient health if combined with a physician’s assessment and other data such as genetics.

AI is changing the way we live and the global balance of military power. Ex-Pentagon software chief Nicholas Chaillan said this month the U.S. has already lost out to China in military applications. Even 98-year-old Henry Kissinger weighs in on AI as co-author of a new book due next month, “The Age of AI: And Our Human Future.”

Kai-Fu Lee has been sizing up the implications for decades. The former Google executive turned venture capitalist looked at U.S.-China competition in his 2018 book, “AI Superpowers.” His new book, “AI 2041,” co-authored with science fiction writer Chen Qiufan, suggests how AI will bring sweeping changes to daily life in the next 20 years. I talked earlier this month to Lee, who currently oversees $2.7 billion of assets at Beijing-headquartered Sinovation Ventures. Sinovation has backed seven AI start-ups that have become “unicorns” worth more than $1 billion: AInnovation, 4Paradigm, Megvii, Momenta, WeRide, Horizon Robotics and Bitman. We discussed Lee’s new book, the investments he’s made based on his predictions in it, and where the U.S.-China AI rivalry now stands. Excerpts follow.

Full Story:

The strategy outlines how AI can be applied to defence and security in a protected and ethical way. As such, it sets standards of responsible use of AI technologies, in accordance with international law and NATO’s values. It also addresses the threats posed by the use of AI by adversaries and how to establish trusted cooperation with the innovation community on AI.

Artificial Intelligence is one of the seven technological areas which NATO Allies have prioritized for their relevance to defence and security. These include quantum-enabled technologies, data and computing, autonomy, biotechnology and human enhancements, hypersonic technologies, and space. Of all these dual-use technologies, Artificial Intelligence is known to be the most pervasive, especially when combined with others like big data, autonomy, or biotechnology. To address this complex challenge, NATO Defence Ministers also approved NATO’s first policy on data exploitation.

Individual strategies will be developed for all priority areas, following the same ethical approach as that adopted for Artificial Intelligence.

The truth is these systems aren’t masters of language. They’re nothing more than mindless “stochastic parrots.” They don’t understand a thing about what they say and that makes them dangerous. They tend to “amplify biases and other issues in the training data” and regurgitate what they’ve read before, but that doesn’t stop people from ascribing intentionality to their outputs. GPT-3 should be recognized for what it is; a dumb — even if potent — language generator, and not as a machine so close to us in humanness as to call it “self-aware.”

On the other hand, we should ponder whether OpenAI’s intentions are honest and whether they have too much control over GPT-3. Should any company have the absolute authority over an AI that could be used for so much good — or so much evil? What happens if they decide to shift from their initial promises and put GPT-3 at the service of their shareholders?

Full Story:

Not even our imagination will manage to keep up with technology’s pace.

Martha called his name again, “Ash!” But he wasn’t listening, as always. His eyes fixed on the screen while he uploaded a smiley picture of a younger self. Martha joined him in the living room and pointed to his phone. “You keep vanishing. Down there.” Although annoying, Ash’s addiction didn’t prevent the young loving couple to live an otherwise happy life.

The sun was already out, hidden behind the soft morning clouds when Ash came down the stairs the next day. “Hey, get dressed! Van’s got to be back by two.” They had an appointment but Martha’s new job couldn’t wait. After a playfully reluctant goodbye, he left and she began to draw on her virtual easel.