The effort aimed at identifying criminals from their mugshots raises serious ethical issues about how we should use artificial intelligence.

Machines lace almost all social, political cultural and economic issues currently being discussed. Why, you ask? Clearly, because we live in a world that has all its modern economies and demographic trends pivoting around machines and factories at all scales.

We have reached the stage in the evolution of our civilization where we cannot fathom a day without the presence of machines or automated processes. Machines are not only used in sectors of manufacturing or agriculture but also in basic applications like healthcare, electronics and other areas of research. Although, machines of varying types had entered the industrial landscape long ago, technologies like nanotechnology, the Internet of Things, Big Data have altered the scenario in an unprecedented manner.

The fusion of nanotechnology with conventional mechanical concepts gives rise to the perception of ‘molecular machines’. Foreseen to be a stepping stone into nano-sized industrial revolution, these microscopic machines are molecules designed with movable parts that behave in a way that our regular machines operate in. A nano-scale motor that spins in a given direction in presence of directed heat and light would be an example of a molecular machine.

New biomarkers for aging is good news for researchers!

“Given the high volume of data being generated in the life sciences, there is a huge need for tools that make sense of that data. As such, this new method will have widespread applications in unraveling the molecular basis of age-related diseases and in revealing biomarkers that can be used in research and in clinical settings. In addition, tools that help reduce the complexity of biology and identify important players in disease processes are vital not only to better understand the underlying mechanisms of age-related disease but also to facilitate a personalized medicine approach. The future of medicine is in targeting diseases in a more specific and personalized fashion to improve clinical outcomes, and tools like iPANDA are essential for this emerging paradigm,” said João Pedro de Magalhães, PhD, a trustee of the Biogerontology Research Foundation.

The algorithm, iPANDA, applies deep learning algorithms to complex gene expression data sets and signal pathway activation data for the purposes of analysis and integration, and their proof of concept article demonstrates that the system is capable of significantly reducing noise and dimensionality of transcriptomic data sets and of identifying patient-specific pathway signatures associated with breast cancer patients that characterize their response to Toxicol-based neoadjuvant therapy.

The system represents a substantially new approach to the analysis of microarray data sets, especially as it pertains to data obtained from multiple sources, and appears to be more scalable and robust than other current approaches to the analysis of transcriptomic, metabolomic and signalomic data obtained from different sources. The system also has applications in rapid biomarker development and drug discovery, discrimination between distinct biological and clinical conditions, and the identification of functional pathways relevant to disease diagnosis and treatment, and ultimately in the development of personalized treatments for age-related diseases.

In Brief:

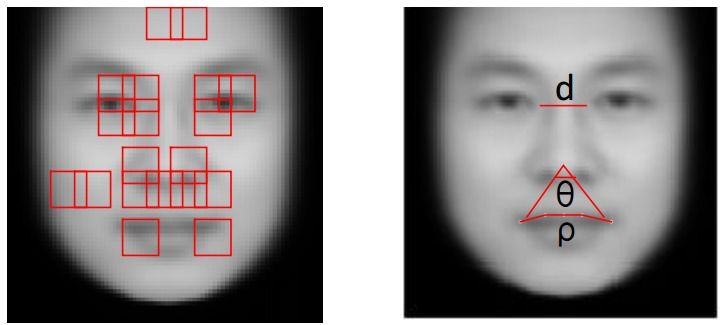

Machine learning technology in neural networks has been pushing artificial intelligence (AI) development to new heights. Most AI systems learn to do things using a set of labelled data provided by their human programmers. Parham Aarabi and Wenzhi Guo, engineers from the University of Toronto, Canada have taken machine learning to a different level, developing an algorithm that can learn things on its own, going beyond its training.

Intel today unveiled new hardware and software targeting the artificial intelligence (AI) market, which has emerged as a focus of investment for the largest data center operators. The chipmaker introduced an FPGA accelerator that offers more horsepower for companies developing new AI-powered services.

The Intel Deep Learning Inference Accelerator (DLIA) combines traditional Intel CPUs with field programmable gate arrays (FPGAs), semiconductors that can be reprogrammed to perform specialized computing tasks. FPGAs allow users to tailor compute power to specific workloads or applications.

The DLIA is the first hardware product emerging from Intel’s $16 billion acquisition of Altera last year. It was introduced at SC16 in Salt Lake City, Utah, the annual showcase for high performance computing hardware. Intel is also rolling out a beefier model of its flagship Xeon processor, and touting its Xeon Phi line of chips optimized for parallelized workloads.