New techniques have been used to make a robot hand, a flapping fish tail, and a coil that can retrieve a ball.

Nov 11 (Reuters) — Companies in North America added a record number of robots in the first nine months of this year as they rushed to speed up assembly lines and struggled to add human workers.

Factories and other industrial users ordered 29,000 robots, 37% more than during the same period last year, valued at $1.48 billion, according to data compiled by the industry group the Association for Advancing Automation. That surpassed the previous peak set in the same time period in 2017, before the global pandemic upended economies.

The rush to add robots is part of a larger upswing in investment as companies seek to keep up with strong demand, which in some cases has contributed to shortages of key goods. At the same time, many firms have struggled to lure back workers displaced by the pandemic and view robots as an alternative to adding human muscle on their assembly lines.

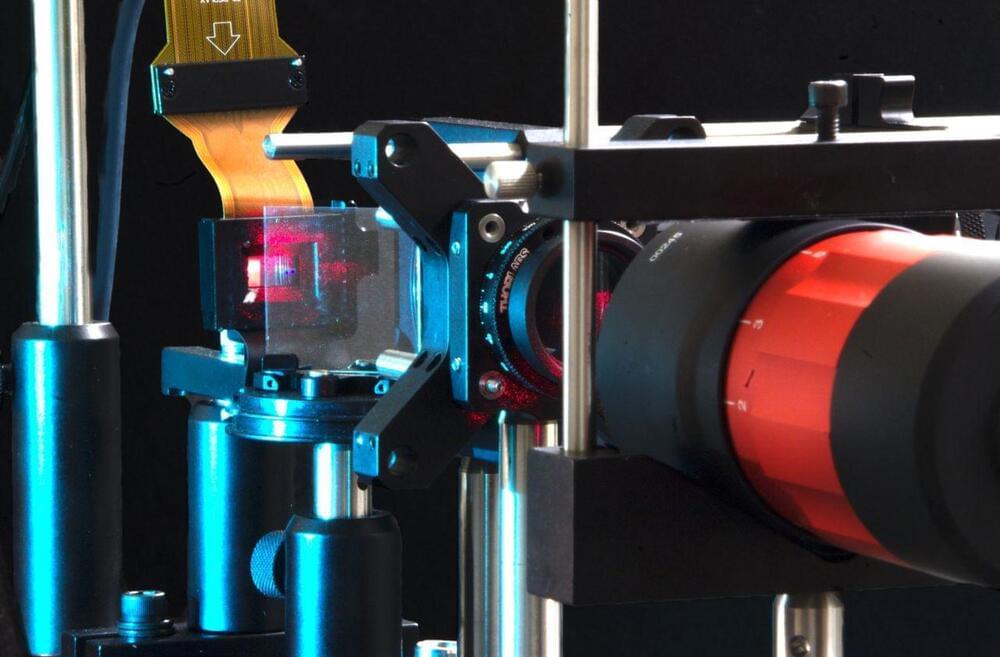

Virtual and augmented reality headsets are designed to place wearers directly into other environments, worlds, and experiences. While the technology is already popular among consumers for its immersive quality, there could be a future where the holographic displays look even more like real life. In their own pursuit of these better displays, the Stanford Computational Imaging Lab has combined their expertise in optics and artificial intelligence. Their most recent advances in this area are detailed in a paper published today (November 12, 2021) in Science Advances and work that will be presented at SIGGRAPH ASIA 2021 in December.

At its core, this research confronts the fact that current augmented and virtual reality displays only show 2D images to each of the viewer’s eyes, instead of 3D – or holographic – images like we see in the real world.

“They are not perceptually realistic,” explained Gordon Wetzstein, associate professor of electrical engineering and leader of the Stanford Computational Imaging Lab. Wetzstein and his colleagues are working to come up with solutions to bridge this gap between simulation and reality while creating displays that are more visually appealing and easier on the eyes.

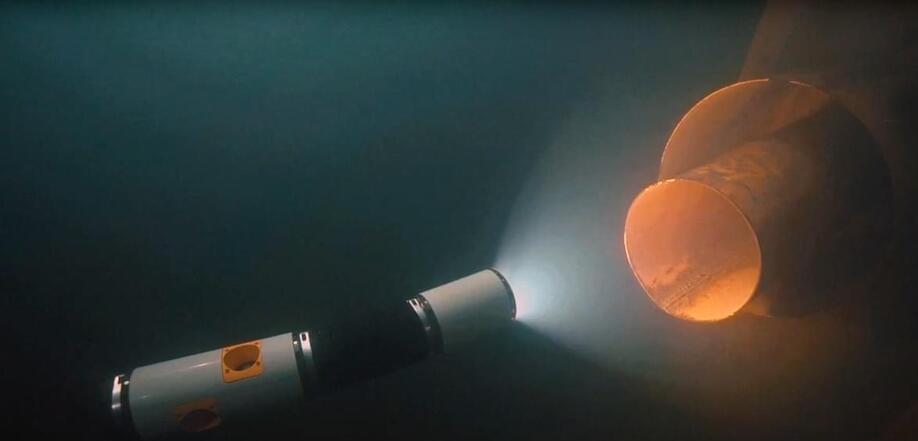

Elon Musk’s revolutionary company Neuralink plans to insert Computer Chips into peoples brains but what if there’s a safer and even more performant way of merging humans and machines in the future?

Enter DARPAs plan to help the emergence of non-invasive brain computer interfaces which led to the organization Battelle to create a kind of Neural Dust to interface with our brains that might be the first step to having Nanobots inside of the human body in the future.

How will Neuralink deal with that potential rival with this cutting edge technology? Its possibilities in Fulldive Virtual Reality Games, Medical Applications, merging humans with artificial intelligence and its potential to scale all around the world are enormous.

If you enjoyed this video, please consider rating this video and subscribing to our channel for more frequent uploads. Thank you! smile

–

#neuralink #ai #elonmusk.

–

Credits:

https://www.youtube.com/watch?v=PhzDIABahyc.

https://www.bensound.com/

Imagine a world in which smart packaging for supermarket-ready meals updates you in real-time to tell you about carbon footprints, gives live warnings on product recalls and instant safety alerts because allergens were detected unexpectedly in the factory.

But how much extra energy would be used powering such a system? And what if an accidental alert meant you were told to throw away your food for no reason?

These are some of the questions asked by team of researchers, including a Lancaster University Lecturer in Design Policy and Futures Thinking, who—by creating objects from a “smart” imaginary new world—are looking at the ethical implications of using artificial intelligence in the food sector.

Microsoft and Nvidia have been working hard to finally create an Artificial Intelligence Model which surpasses and beats OpenAI’s GPT3 with more than double the parameter count and almost reaching the amazing and intelligent amount of 1 Trillion Parameter models. Unless OpenAI comes out with GPT4, it seems like the Megatron-Turing NLP AI Model is to be the best and smartest Artificial Intelligence of 2021 which the most abilities of any Natural Language Processing AI ever.

It’s also much easier to train than GPT3. It requires much less hardware and maybe with the upcoming Nvidia Lovelace GPU’s, it’ll be even easier to run for regular consumers.

–

If you enjoyed this video, please consider rating this video and subscribing to our channel for more frequent uploads. Thank you! smile

–

TIMESTAMPS:

00:00 GPT-3 has been beaten.

02:09 How Transformers work.

03:53 What’s new about this AI Model?

05:45 The Future of Artificial Intelligence.

09:32 Last Words.

–

#ai #agi #microsoft

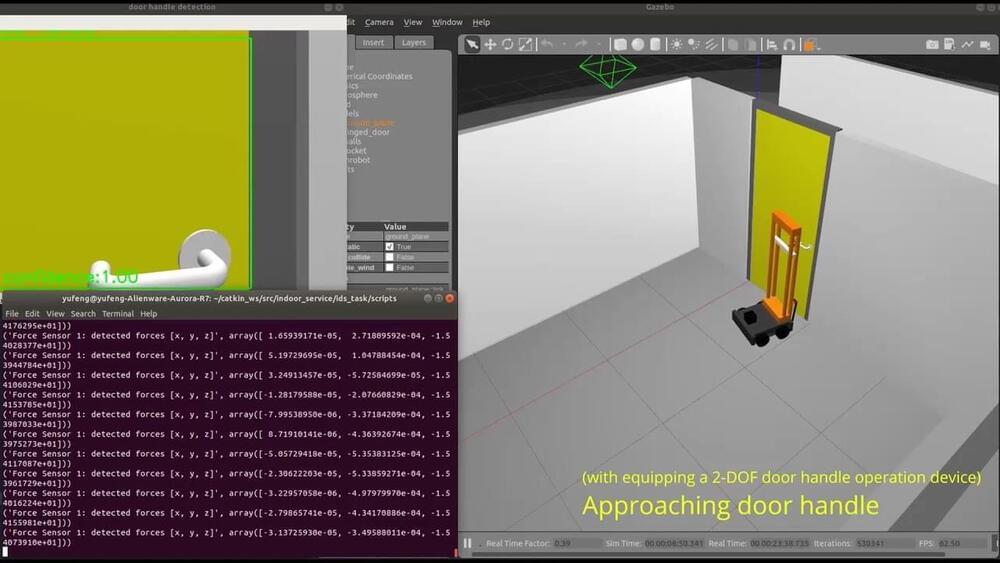

Engineering students have designed an autonomous robot that can find and open doors in 3D digital simulations. Now they’re building the hardware for an autonomous robot that not only can open its own doors but also can find the nearest electric wall outlet to recharge without human help.

One flaw in the notion that robots will take over the world is that the world is full of doors.

And doors are kryptonite to robots, said Ou Ma, an aerospace engineering professor at the University of Cincinnati.