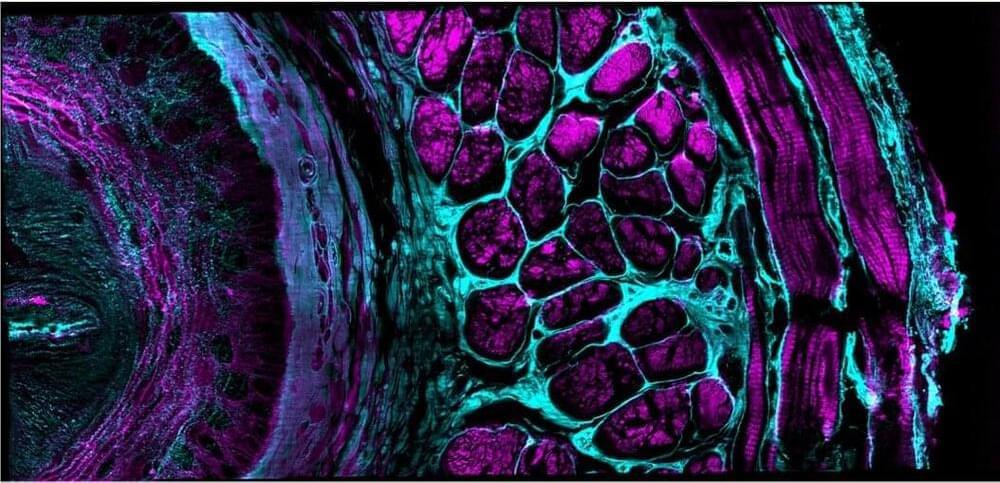

In this DNA factory, organism engineers are using robots and automation to build completely new forms of life.

»Subscribe to Seeker! http://bit.ly/subscribeseeker.

»Watch more Focal Point | https://bit.ly/2M3gmbK

Ginkgo Bioworks, a Boston company specializing in “engineering custom organisms,” aims to reinvent manufacturing, agriculture, biodesign, and more.

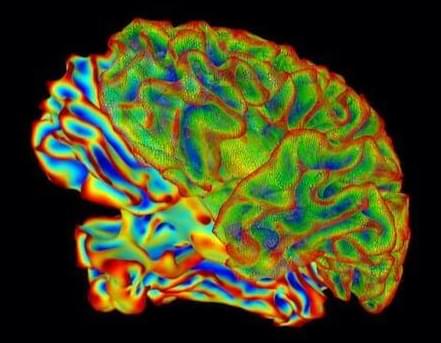

Biologists, software engineers, and automated robots are working side by side to accelerate the speed of nature by taking synthetic DNA, remixing it, and programming microbes, turning custom organisms into mini-factories that could one day pump out new foods, fuels, and medicines.

While there are possibly numerous positive and exciting outcomes from this research, like engineering gut bacteria to produce drugs inside the human body on demand or building self-fertilizing plants, the threat of potential DNA sequences harnessing a pathological function still exists.

That’s why Ginkgo Bioworks is developing a malware software to effectively stomp out the global threat of biological weapons, ensuring that synthetic biology can’t be used for evil.

Learn more about synthetic DNA and this biological assembly line on this episode of Focal Point.