Robot bees are no replacement for our vital pollinators here on Earth. Up on the International Space Station, however, robots bearing the bee name could help spacefaring humans save precious time.

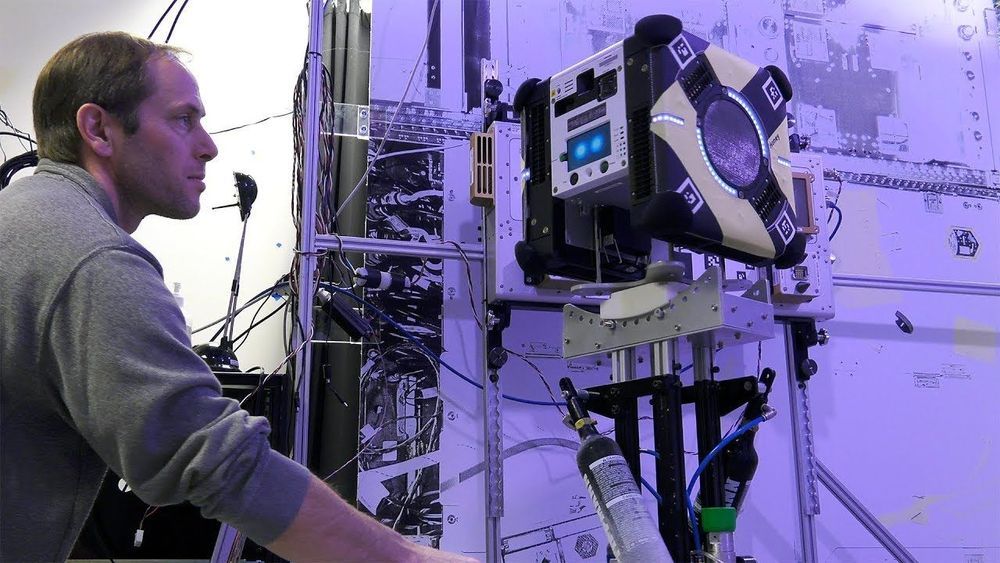

On Friday, NASA astronaut Anne McClain took one of the trio of Astrobees out for a spin. Bumble and its companion Honey both arrived on the ISS a month ago, and are currently going through a series of checks. Bumble passed the first hurdle when McClain manually flew it around the Japanese Experiment Module. Bumble took photos of the module which will be used to make a map for all the Astrobees, guiding them as they begin their tests there.

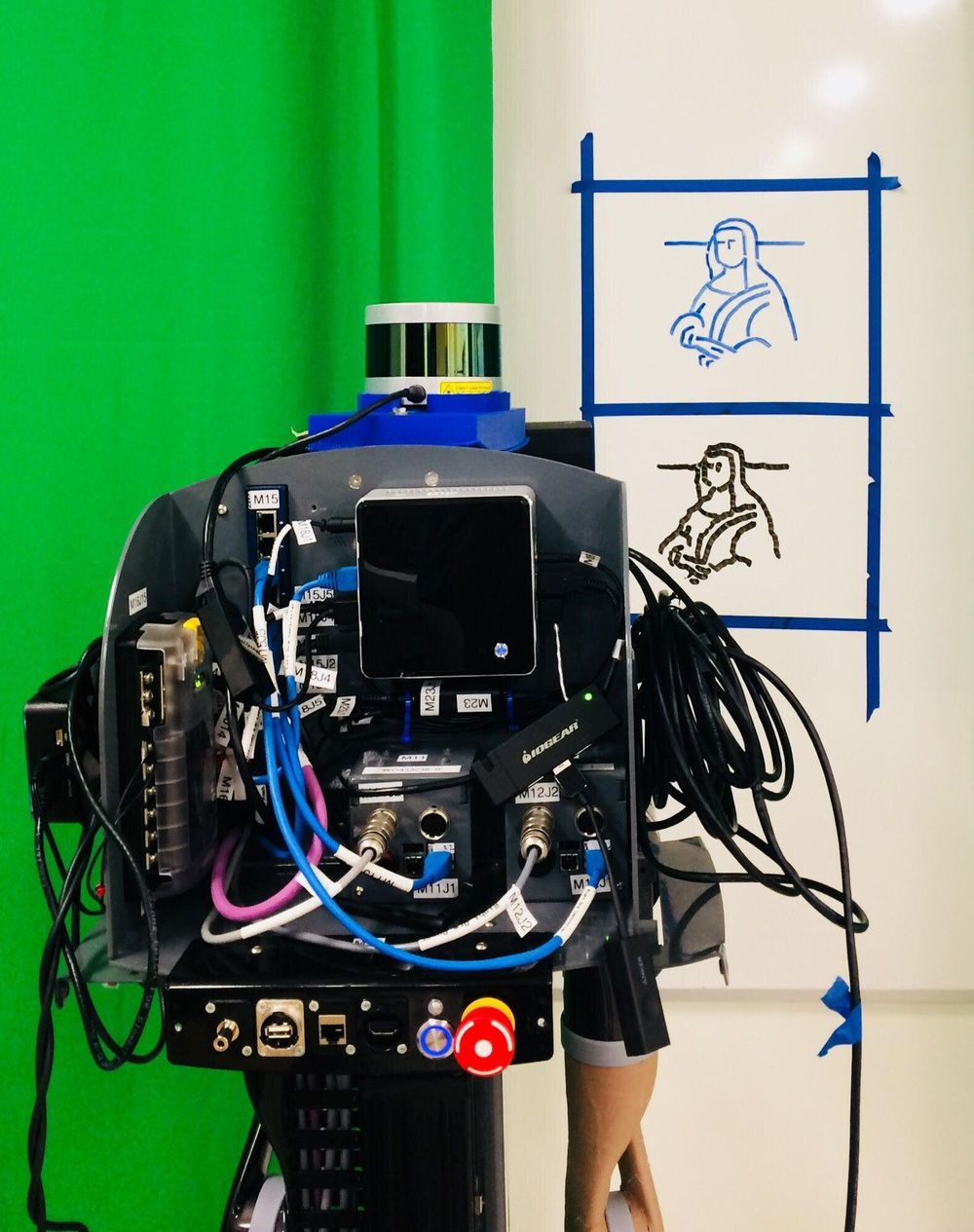

The three cube-shaped robots (Queen will arrive from Earth in the SpaceX resupply mission this July) don’t look anything like their namesakes, but they are non-threatening by design, says Astrobee project manager Maria Bualat. Since they’re built to fly around autonomously, doing tasks for the crew of the International Space Station, “one of our hardest problems is actually dealing with safety concerns,” she says.