AI is Pandora’s box, s’ true…

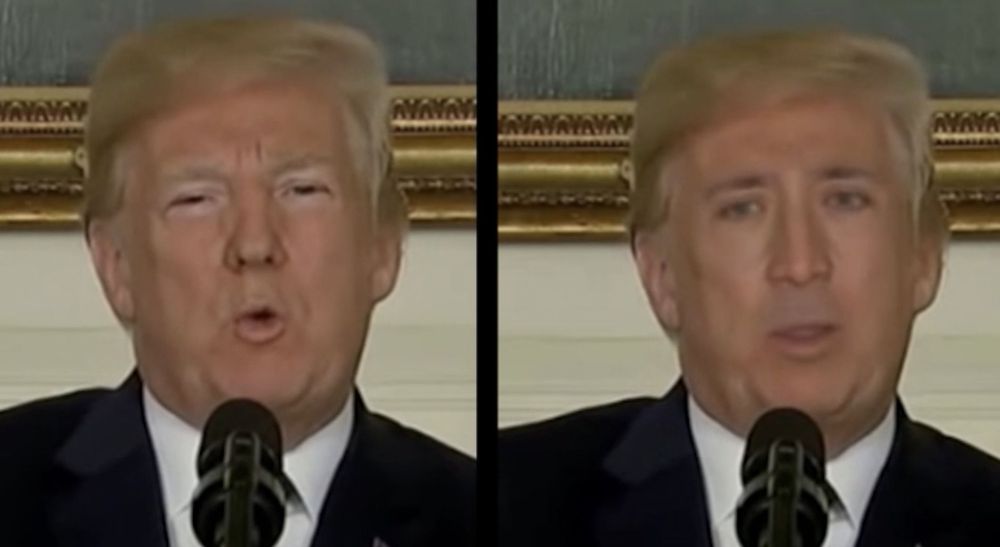

On the one hand we can’t close it and on the other hand our current direction is not good. And this is gonna get worse as AI starts taking its own ‘creative’ decisions… the human overlords will claim it has nothing to do with them if and when things go wrong.

The solution for commercialization is actually quite simple.

If my dog attacks a child on the street when off the leash who is responsible?

The owner of course. Although I dislike that word when it comes to anything living, maybe the dogs human representative is a better term.

Business must be held accountable for its AI dogs off the leash, if necessary keep the leash on for longer. So no need to stop commercialization, just reinstate clear accountability, something which seems to be lacking today.

And in my view, this would actually be more profitable… Everyone happy then.

Getting its world premiere at documentary festival IDFA in Amsterdam, Tonje Hessen Schei’s gripping AI doc “iHuman” drew an audience of more than 700 to a 10 a.m. Sunday screening at the incongruously old-school Pathé Tuschinski cinema. Many had their curiosity piqued by the film’s timely subject matter—the erosion of privacy in the age of new media, and the terrifying leaps being made in the field of machine intelligence—but it’s fair to say that quite a few were drawn by the promise of a Skype Q&A with National Security Agency whistleblower Edward Snowden, who made headlines in 2013 by leaking confidential U.S. intelligence to the U.K.’s Guardian newspaper.

Snowden doesn’t feature in the film, but it couldn’t exist without him: “iHuman” is an almost exhausting journey through all the issues that Snowden was trying to warn us about, starting with our civil liberties. Speaking after the film—which he “very much enjoyed”—Snowden admitted that the subject was still raw for him, and that the writing of his autobiography (this year’s “Permanent Record”), had not been easy. “It was actually quite a struggle,” he revealed. “I had tried to avoid writing that book for a very long time, but when I looked at what was happening in the world and [saw] the direction of developments since I came forward [in 2013], I was haunted by these developments—so much so that I began to consider: what were the costs of silence? Which is [something] I understand very well, given my history.