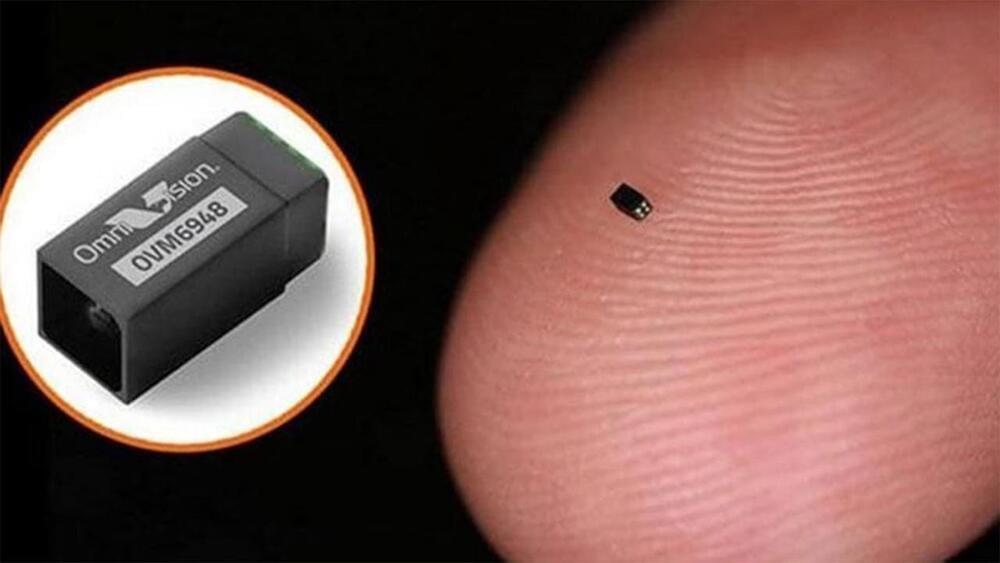

Scientists with the help of next gen Artificial Intelligence managed to create the smallest and most efficient camera in the world. A specialist medical camera that measures just under a nanometer has just entered the Guinness Book of Records. The size of the grain of sand, it is the camera’s tiny sensor that is actually being entered into the world-famous record book, for being the smallest commercially available image sensor.

–

TIMESTAMPS:

00:00 A new leap in Material Science.

00:57 How this new technology works.

03:45 Artificial Intelligence and Material Science.

06:00 The Privacy Concerns of Tiny Cameras.

07:45 Last Words.

–

#ai #camera #technology