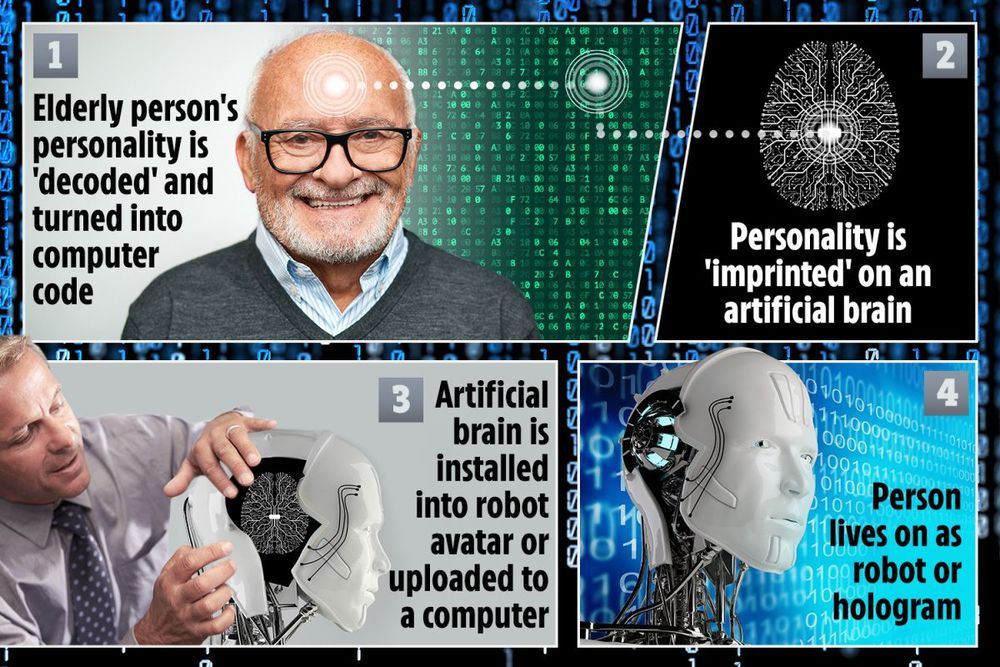

By definition, posthumanism (I choose to call it ‘cyberhumanism’) is to replace transhumanism at the center stage circa 2035. By then, mind uploading could become a reality with gradual neuronal replacement, rapid advancements in Strong AI, massively parallel computing, and nanotechnology allowing us to directly connect our brains to the Cloud-based infrastructure of the Global Brain. Via interaction with our AI assistants, the GB will know us better than we know ourselves in all respects, so mind-transfer, or rather “mind migration,” for billions of enhanced humans would be seamless, sometime by mid-century.

I hear this mantra over and over again — we don’t know what consciousness is. Clearly, there’s no consensus here but in the context of topic discussed, I would summarize my views, as follows: Consciousness is non-local, quantum computational by nature. There’s only one Universal Consciousness. We individualize our conscious awareness through the filter of our nervous system, our “local” mind, our very inner subjectivity, but consciousness itself, the self in a big sense, our “core” self is universal, and knowing it through experience has been called enlightenment, illumination, awakening, or transcendence, through the ages.

Any container with a sufficiently integrated network of information patterns, with a certain optimal complexity, especially complex dynamical systems with biological or artificial brains (say, the coming AGIs) could be filled with consciousness at large in order to host an individual “reality cell,” “unit,” or a “node” of consciousness. This kind of individuated unit of consciousness is always endowed with free will within the constraints of the applicable set of rules (“physical laws”), influenced by the larger consciousness system dynamics. Isn’t too naïve to presume that Universal Consciousness would instantiate phenomenality only in the form of “bio”-logical avatars?