AI is revolutionizing the way we build video games.

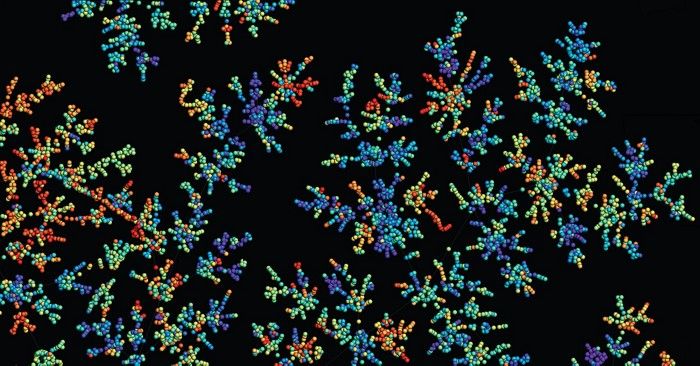

Chemical space contains every possible chemical compound. It includes every drug and material we know and every one we’ll find in the future. It’s practically infinite and can be frustratingly complex. That’s why some chemists are turning to artificial intelligence: AI can explore chemical space faster than humans, and it might be able to find molecules that would elude even expert scientists. But as researchers work to build and refine these AI tools, many questions still remain about how AI can best help search chemical space and when AI will be able to assist the wider chemistry community.

Outer space isn’t the only frontier curious humans are investigating. Chemical space is the conceptual territory inhabited by all possible compounds. It’s where scientists have found every known medicine and material, and it’s where we’ll find the next treatment for cancer and the next light-absorbing substance for solar cells.

But searching chemical space is far from trivial. For one thing, it might as well be infinite. An upper estimate says it contains 10180 compounds, more than twice the magnitude of the number of atoms in the universe. To put that figure in context, the CAS database—one of the world’s largest—currently contains about 108 known organic and inorganic substances, and scientists have synthesized only a fraction of those in the lab. (CAS is a division of the American Chemical Society, which publishes C&EN.) So we’ve barely seen past our own front doorstep into chemical space.

Robots for artists. 😃

What if you could instruct a swarm of robots to paint a picture? The concept may sound far-fetched, but a recent study in open-access journal Frontiers in Robotics and AI has shown that it is possible. The robots in question move about a canvas leaving color trails in their wake, and in a first for robot-created art, an artist can select areas of the canvas to be painted a certain color and the robot team will oblige in real time. The technique illustrates the potential of robotics in creating art, and could be an interesting tool for artists.

Creating art can be labor-intensive and an epic struggle. Just ask Michelangelo about the Sistine Chapel ceiling. For a world increasingly dominated by technology and automation, creating physical art has remained a largely manual pursuit, with paint brushes and chisels still in common use. There’s nothing wrong with this, but what if robotics could lend a helping hand or even expand our creative repertoire?

“The intersection between robotics and art has become an active area of study where artists and researchers combine creativity and systematic thinking to push the boundaries of different art forms,” said Dr. María Santos of the Georgia Institute of Technology. “However, the artistic possibilities of multi–robot systems are yet to be explored in depth.”

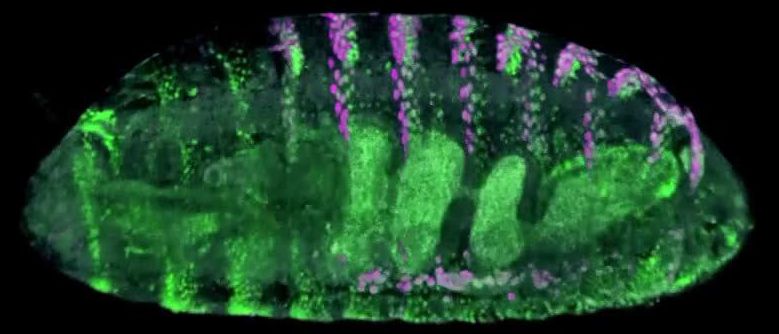

Robots are now assisting in advancing developmental biology.

The study of developmental biology is getting a robotic helping hand.

Scientists are using a custom robot to survey how mutations in regulatory regions of the genome affect animal development. These regions aren’t genes, but rather stretches of DNA called enhancers that determine how genes are turned on and off during development. The team describes the findings—and the robot itself—on October 14 in the journal Nature.

“The real star is this robot,” says David Stern, a group leader at HHMI’s Janelia Research Campus. “It was extremely creative engineering.”

Elons fears are real about AI.

Sign up to Morning Brew for free today here: http://cen.yt/morningbrewcoldfusion2

— About ColdFusion –

ColdFusion is an Australian based online media company independently run by Dagogo Altraide since 2009. Topics cover anything in science, technology, history and business in a calm and relaxed environment. GPT-3 certainly is something.

ColdFusion Merch:

INTERNATIONAL: https://store.coldfusioncollective.com/

AUSTRALIA: https://shop.coldfusioncollective.com/

If you enjoy my content, please consider subscribing!

I’m also on Patreon: https://www.patreon.com/ColdFusion_TV

Bitcoin address: 13SjyCXPB9o3iN4LitYQ2wYKeqYTShPub8

— New Thinking Book written by Dagogo Altraide –

This book was rated the 9th best technology history book by book authority.

In the book you’ll learn the stories of those who invented the things we use everyday and how it all fits together to form our modern world.

Get the book on Amazon: http://bit.ly/NewThinkingbook

Get the book on Google Play: http://bit.ly/NewThinkingGooglePlay

https://newthinkingbook.squarespace.com/about/

— ColdFusion Social Media –

» Twitter | @ColdFusion_TV

» Instagram | coldfusiontv

» Facebook | https://www.facebook.com/ColdFusionTV

Sources:

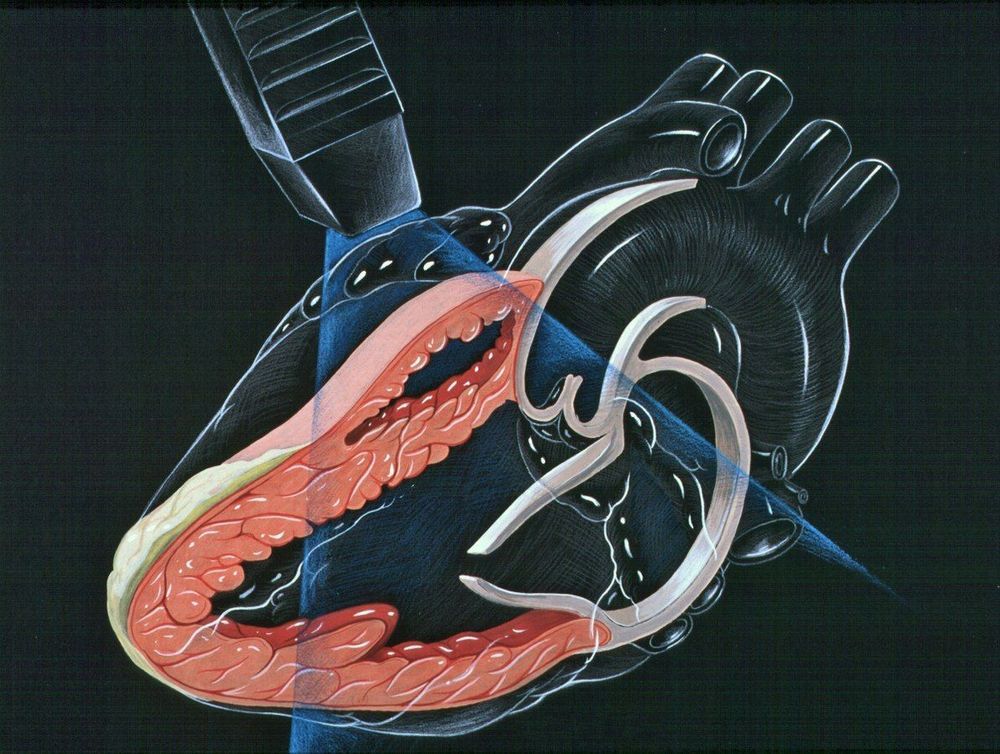

GE Healthcare has received 510k clearance from US FDA for its Ultra Edition package on Vivid cardiovascular ultrasound systems, which come with features based on artificial intelligence (AI) that allows clinicians to get quicker and more exams repeatedly. Although methodical evaluations of heart function are necessary in echocardiography, such evaluations can be time-consuming and difficult to get. Quality acquisition of data and operator skill are essential factors to get precise and thorough exams. Given that patients undergo subsequent monitoring exams, the reproducibility of the exam evaluations is essential to monitoring improvement or progress of the disease.

Interesting Eric Klien

That prompted the researchers, who are part of the Human Brain Project, to look at two features that have become clear in experimental neuroscience data: each neuron retains a memory of previous activity in the form of molecular markers that slowly fade with time; and the brain provides top-down learning signals using things like the neurotransmitter dopamine that modulates the behavior of groups of neurons.

In a paper in Nature Communications, the Austrian team describes how they created artificial analogues of these two features to create a new learning paradigm they call e-prop. While the approach learns slower than backpropagation-based methods, it achieves comparable performance.

More importantly, it allows online learning. That means that rather than processing big batches of data at once, which requires constant transfer to and from memory that contributes significantly to machine learning’s energy bills, the approach simply learns from data as it becomes available. That dramatically cuts the amount of memory and energy it requires, which makes it far more practical to use for on-chip learning in smaller mobile devices.

Summary: A new computational model predicts how information deep inside the brain could flow from one network to another, and how neural network clusters can self optimize over time.

Source: USC

Researchers at the Cyber-Physical Systems Group at the USC Viterbi School of Engineering, in conjunction with the University of Illinois at Urbana-Champaign, have developed a new model of how information deep in the brain could flow from one network to another and how these neuronal network clusters self-optimize over time.

Artificial intelligence has arrived in our everyday lives—from search engines to self-driving cars. This has to do with the enormous computing power that has become available in recent years. But new results from AI research now show that simpler, smaller neural networks can be used to solve certain tasks even better, more efficiently, and more reliably than ever before.

An international research team from TU Wien (Vienna), IST Austria and MIT (USA) has developed a new artificial intelligence system based on the brains of tiny animals, such as threadworms. This novel AI-system can control a vehicle with just a few artificial neurons. The team says that system has decisive advantages over previous deep learning models: It copes much better with noisy input, and, because of its simplicity, its mode of operation can be explained in detail. It does not have to be regarded as a complex “black box”, but it can be understood by humans. This new deep learning model has now been published in the journal Nature Machine Intelligence.