Recently, there has been a reemergence of interest in optical computing platforms for artificial intelligence-related applications. Optics is ideally suited for realizing neural network models because of the high speed, large bandwidth and high interconnectivity of optical information processing. Introduced by UCLA researchers, Diffractive Deep Neural Networks (D2NNs) constitute such an optical computing framework, comprising successive transmissive and/or reflective diffractive surfaces that can process input information through light-matter interaction. These surfaces are designed using standard deep learning techniques in a computer, which are then fabricated and assembled to build a physical optical network. Through experiments performed at terahertz wavelengths, the capability of D2NNs in classifying objects all-optically was demonstrated. In addition to object classification, the success of D2NNs in performing miscellaneous optical design and computation tasks, including e.g., spectral filtering, spectral information encoding, and optical pulse shaping have also been demonstrated.

In their latest paper published in Light: Science & Applications, UCLA team reports a leapfrog advance in D2NN-based image classification accuracy through ensemble learning. The key ingredient behind the success of their approach can be intuitively understood through the experiment of Sir Francis Galton (1822–1911), an English philosopher and statistician, who, while visiting a livestock fair, asked the participants to guess the weight of an ox. None of the hundreds of participants succeeded in guessing the weight. But to his astonishment, Galton found that the median of all the guesses came quite close—1207 pounds, and was accurate within 1% of the true weight of 1198 pounds. This experiment reveals the power of combining many predictions in order to obtain a much more accurate prediction. Ensemble learning manifests this idea in machine learning, where an improved predictive performance is attained by combining multiple models.

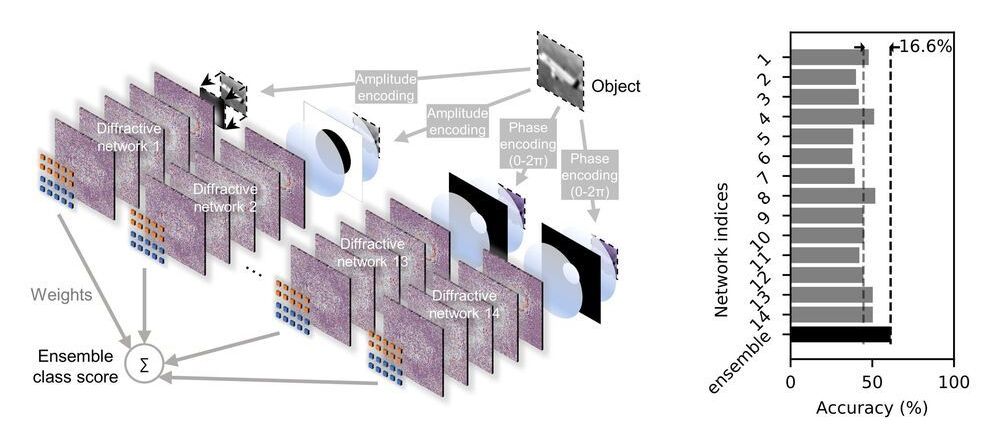

In their scheme, UCLA researchers reported an ensemble formed by multiple D2NNs operating in parallel, each of which is individually trained and diversified by optically filtering their inputs using a variety of filters. 1252 D2NNs, uniquely designed in this manner, formed the initial pool of networks, which was then pruned using an iterative pruning algorithm, so that the resulting physical ensemble is not prohibitively large. The final prediction comes from a weighted average of the decisions from all the constituent D2NNs in an ensemble. The researchers evaluated the performance of the resulting D2NN ensembles on CIFAR-10 image dataset, which contains 60000 natural images categorized in 10 classes and is an extensively used dataset for benchmarking various machine learning algorithms. Simulations of their designed ensemble systems revealed that diffractive optical networks can significantly benefit from the ‘wisdom of the crowd’.