From Cerebras Systems’ AI supercomputer to OpenAI’s natural language processor GPT-3, these are the companies pushing machine learning to the edge.

Imagine this: In the far, far future, long after you’ve died, you’ll eventually come back to life. So will everyone else who ever had a hand in the history of human civilization. But in this scenario, returning from the dead is the relatively normal part. The journey home will be a hell of a lot weirder than the destination.

Here’s how it will go down: A megastructure called a Dyson Sphere will provide a superintelligent artificial agent (AI) with the enormous amounts of power it needs to collect as much historical and personal data about you, so it can rebuild your exact digital copy. Once it’s finished, you’ll live your whole life (again) in a simulated reality, and when the time comes for you to die (again), you’ll be transported into a simulated afterlife, à la Black Mirror’s “San Junipero,” where you’ll get to hang out with your friends, family, and favorite celebrities forever.

Yes, this is mind-boggling. But someday, it might also be very real.

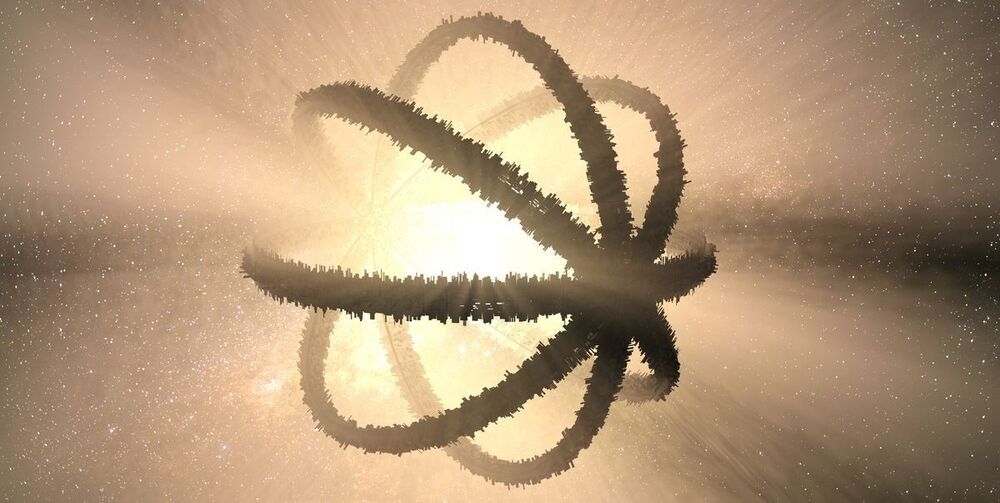

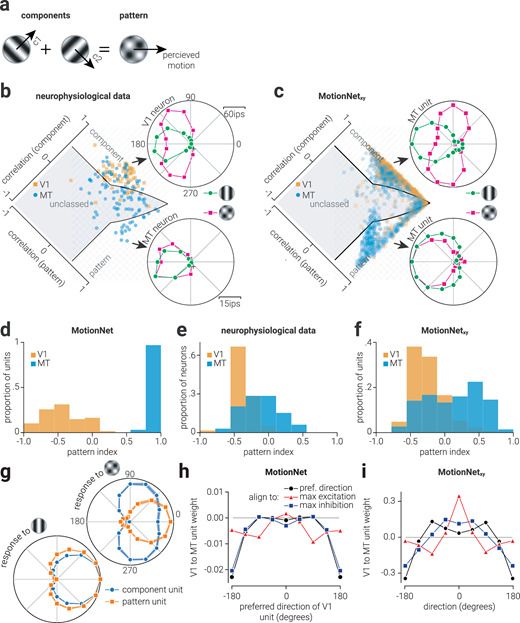

Open AI, the research company founded by Elon Musk, has just discovered that their artificial neural network CLIP shows behavior strikingly similar to a human brain. This find has scientists hopeful for the future of AI networks’ ability to identify images in a symbolic, conceptual and literal capacity.

While the human brain processes visual imagery by correlating a series of abstract concepts to an overarching theme, the first biological neuron recorded to operate in a similar fashion was the “Halle Berry” neuron. This neuron proved capable of recognizing photographs and sketches of the actress and connecting those images with the name “Halle Berry.”

Now, OpenAI’s multimodal vision system continues to outperform existing systems, namely with traits such as the “Spider-Man” neuron, an artificial neuron which can identify not only the image of the text “spider” but also the comic book character in both illustrated and live action form. This ability to recognize a single concept represented in various contexts demonstrates CLIP’s abstraction capabilities. Similar to a human brain, the capacity for abstraction allows a vision system to tie a series of images and text to a central theme.

Scientists have taken a major step forward in harnessing machine learning to accelerate the design for better batteries: Instead of using it just to speed up scientific analysis by looking for patterns in data, as researchers generally do, they combined it with knowledge gained from experiments and equations guided by physics to discover and explain a process that shortens the lifetimes of fast-charging lithium-ion batteries.

It was the first time this approach, known as “scientific machine learning,” has been applied to battery cycling, said Will Chueh, an associate professor at Stanford University and investigator with the Department of Energy’s SLAC National Accelerator Laboratory who led the study. He said the results overturn long-held assumptions about how lithium-ion batteries charge and discharge and give researchers a new set of rules for engineering longer-lasting batteries.

The research, reported today in Nature Materials, is the latest result from a collaboration between Stanford, SLAC, the Massachusetts Institute of Technology and Toyota Research Institute (TRI). The goal is to bring together foundational research and industry know-how to develop a long-lived electric vehicle battery that can be charged in 10 minutes.

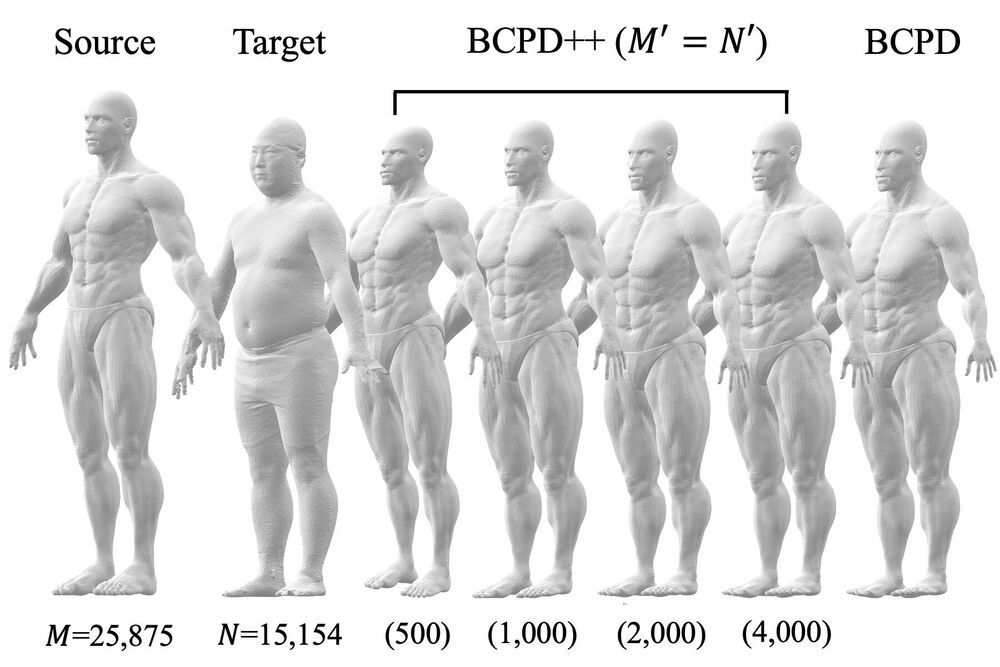

Non-rigid point set registration is the process of finding a spatial transformation that aligns two shapes represented as a set of data points. It has extensive applications in areas such as autonomous driving, medical imaging, and robotic manipulation. Now, a method has been developed to speed up this procedure.

In a study published in IEEE Transactions on Pattern Analysis and Machine Intelligence, a researcher from Kanazawa University has demonstrated a technique that reduces the computing time for non-rigid point set registration relative to other approaches.

Previous methods to accelerate this process have been computationally efficient only for shapes described by small point sets (containing fewer than 100000 points). Consequently, the use of such approaches in applications has been limited. This latest research aimed to address this drawback.

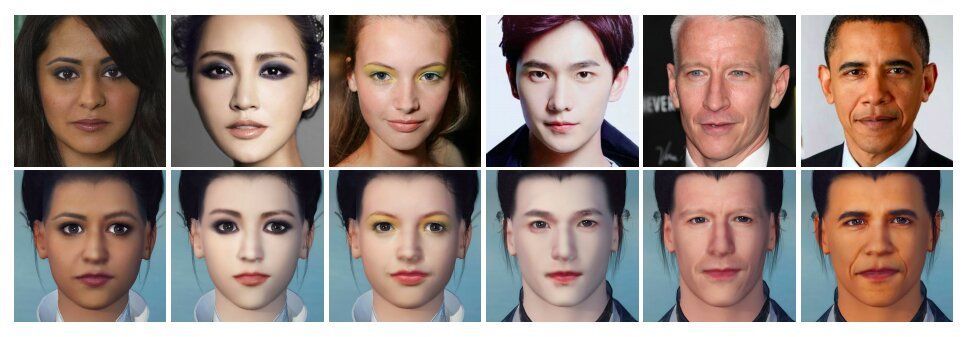

In recent years, videogame developers and computer scientists have been trying to devise techniques that can make gaming experiences increasingly immersive, engaging and realistic. These include methods to automatically create videogame characters inspired by real people.

Most existing methods to create and customize videogame characters require players to adjust the features of their character’s face manually, in order to recreate their own face or the faces of other people. More recently, some developers have tried to develop methods that can automatically customize a character’s face by analyzing images of real people’s faces. However, these methods are not always effective and do not always reproduce the faces they analyze in realistic ways.

Researchers at Netease Fuxi AI Lab and University of Michigan have recently created MeInGame, a deep learning technique that can automatically generate character faces by analyzing a single portrait of a person’s face. This technique, presented in a paper pre-published on arXiv, can be easily integrated into most existing 3D videogames.

Large language models are already business propositions. Google uses them to improve its search results and language translation; Facebook, Microsoft and Nvidia are among other tech firms that make them. OpenAI keeps GPT-3’s code secret and offers access to it as a commercial service. (OpenAI is legally a non-profit company, but in 2019 it created a for-profit subentity called OpenAI LP and partnered with Microsoft, which invested a reported US$1 billion in the firm.) Developers are now testing GPT-3’s ability to summarize legal documents, suggest answers to customer-service enquiries, propose computer code, run text-based role-playing games or even identify at-risk individuals in a peer-support community by labelling posts as cries for help.

A remarkable AI can write like humans — but with no understanding of what it’s saying.