Circa 2020

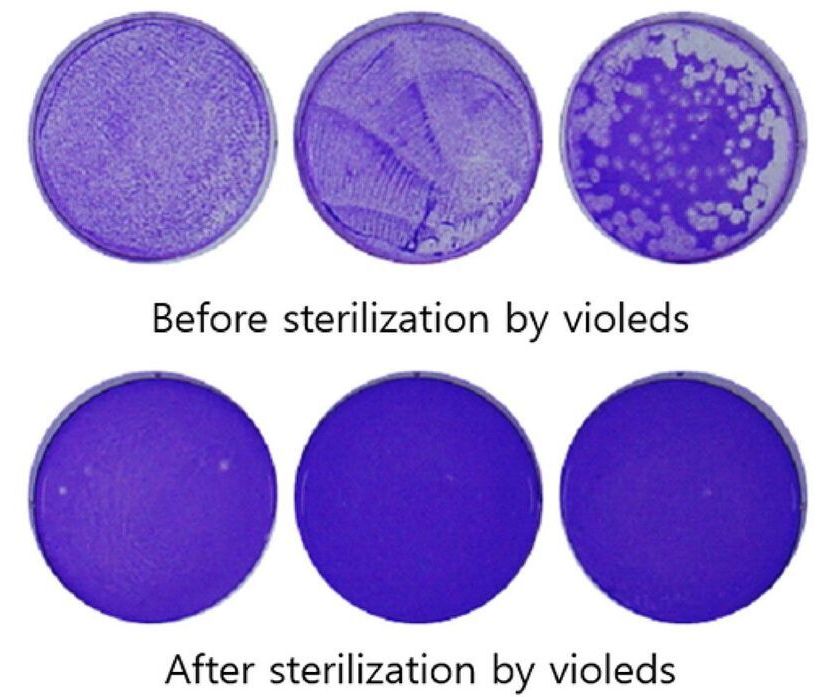

Robots and stranger machines have been using a particular band of ultraviolet light to sterilize surfaces that might be contaminated with coronavirus. Those that must decontaminate large spaces, such as hospital rooms or aircraft cabins, use large, power-hungry mercury lamps to produce ultraviolet-C light. Companies around the world are working to improve the abilities of UV-C producing LEDs, to offer a more compact and efficient alternative. Earlier this month, Seoul Viosys showed what it says is the first 99.9 percent sterilization of SARS-COV-2, the coronavirus that causes COVID-19, using ultraviolet LEDs.

UV LEDs are deadly to viruses and bacteria, because the 100–280 nanometer wavelength C-band shreds genetic material. Unfortunately, it’s also strongly absorbed by nitrogen in the air, so sources have to be powerful to have an effect at a distance. (Air is such a strong barrier, that the sun’s UV-C doesn’t reach the Earth’s surface.) Working with researchers at Korea University, in Seoul, the company showed that its Violed LED modules could eliminate 99.9 percent of the SARS-COV-2 virus using a 30-second dose from a distance of three centimeters.

Unfortunately, the company did not disclose how many of its LEDs were used to achieve that. Assuming that it and the university researchers used a single Violed CMD-FSC-CO1A integrated LED module, a 30-second dose would have delivered at most 600 millijoules of energy. This is somewhat in-line with expectations. A study of UVC’s ability to kill influenza A viruses on N95 respirator masks indicated that about 1 joule per square centimeter would do the job.

While AI is driving value in all aspects of our lives, there are times where it’s hard to separate the aspirations of those who want to use it to do good from those leverag ing AI today to positively impact real change in health and medici ne.

While AI is driving value in all aspects of our lives, there are times where it’s hard to separate the aspirations of those who want to use it to do good from those leverag ing AI today to positively impact real change in health and medici ne.