Circa 2020 o,.o!

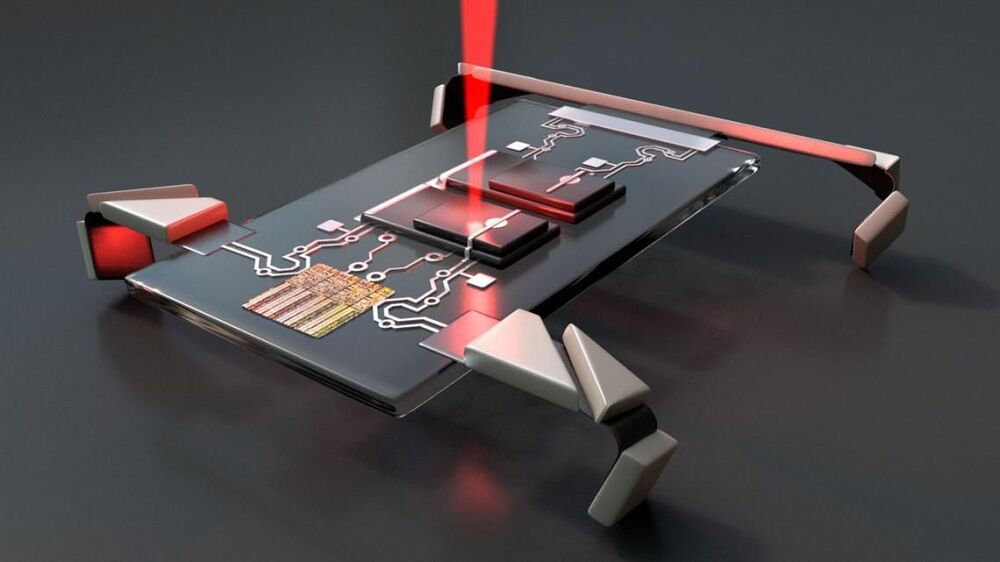

Every robot is, at its heart, a computer that can move. That is true from the largest plane-sized flying machines down to the smallest of controllable nanomachines, small enough to someday even navigate through blood vessels.

New research, published August 26 in Nature, shows that it is possible to build legs into robots mere microns in length. When powered by lasers, these tiny machines can move, and some day, they may save lives in operating rooms or even, possibly, on the battlefield.

This project, funded in part by the Army Research Office and the Air Force Office of Scientific Research, demonstrated that, adapting principles from origami, nano-scale legged robots could be printed and then directed.