# On the Opportunities and.

Risks of Foundation Models.

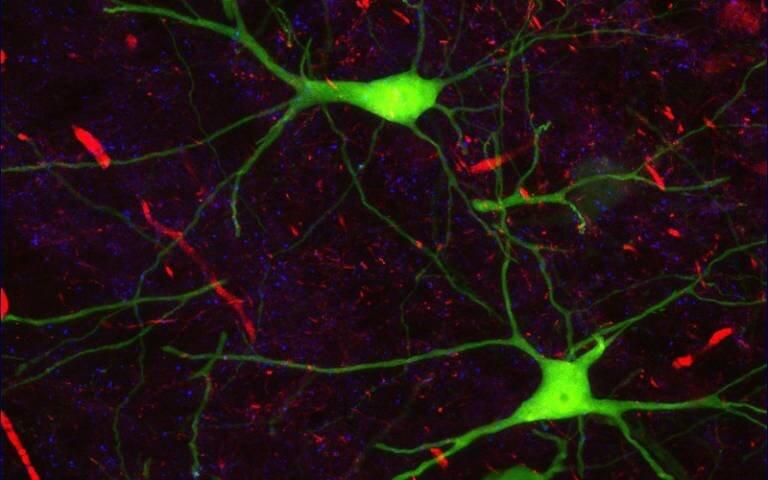

AI is undergoing a paradigm shift with the rise of models (e.g., BERT, DALL-E, GPT-3) that are trained on broad data at scale and are adaptable to a wide range of downstream tasks. We call these models foundation models to underscore their critically central yet incomplete character.

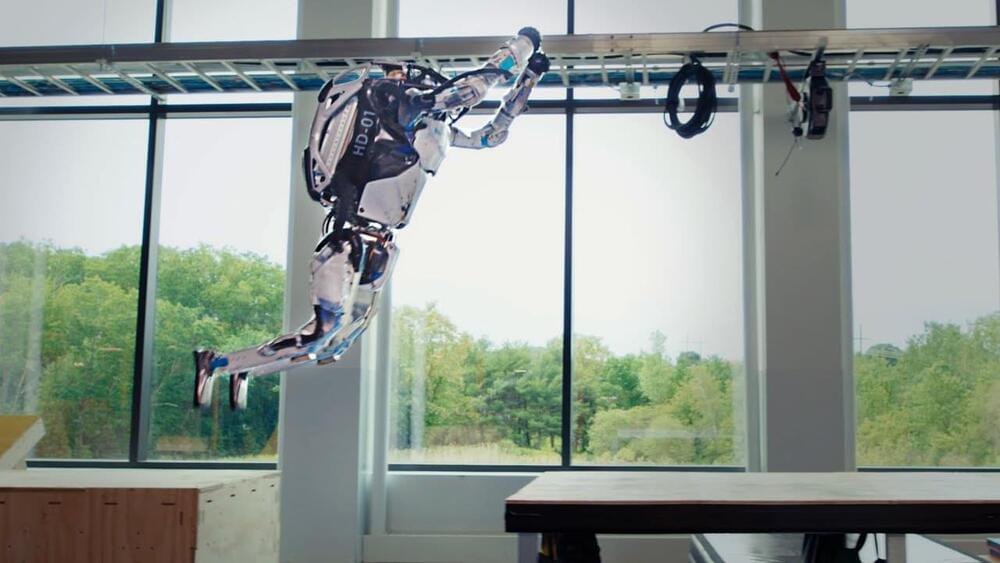

This report provides a thorough account of the opportunities and risks of foundation models, ranging from their capabilities (e.g., language, vision, robotics, reasoning, human interaction) and technical principles (e.g., model architectures, training procedures, data, systems, security, evaluation, theory) to their applications (e.g., law, healthcare, education) and societal impact (e.g., inequity, misuse, economic and environmental impact, legal and ethical considerations).

Though foundation models are based on conventional deep learning and transfer learning, their scale results in new emergent capabilities, and their effectiveness across so many tasks incentivizes homogenization.

Homogenization provides powerful leverage but demands caution, as the defects of the foundation model are inherited by all the adapted models downstream.

Despite the impending widespread deployment of foundation models, we currently lack a clear understanding of how they work, when they fail, and what they are even capable of due to their emergent properties.

To tackle these questions, we believe much of the critical research on foundation models will require deep interdisciplinary collaboration commensurate with their fundamentally sociotechnical nature.

## Original Full Paper (211 pages)

https://arxiv.org/pdf/2108.07258.pdf.

Thanks to Folkstone Design Inc.

#AI #ML #FoundationModels #ComputerAndSociety #SocialImlications.

Photo by yuyeung lau on unsplash.

https://arxiv.org/abs/2108.07258?sf149288348=1