Short Bytes: Artificial Intelligence holds a special place in the future of the humanity. Many tech giants, including Facebook, have long been working on improving the AI to make lives better. Facebook has decided to reveal its milestones in Artificial Intelligence Research in the form of a progress report.

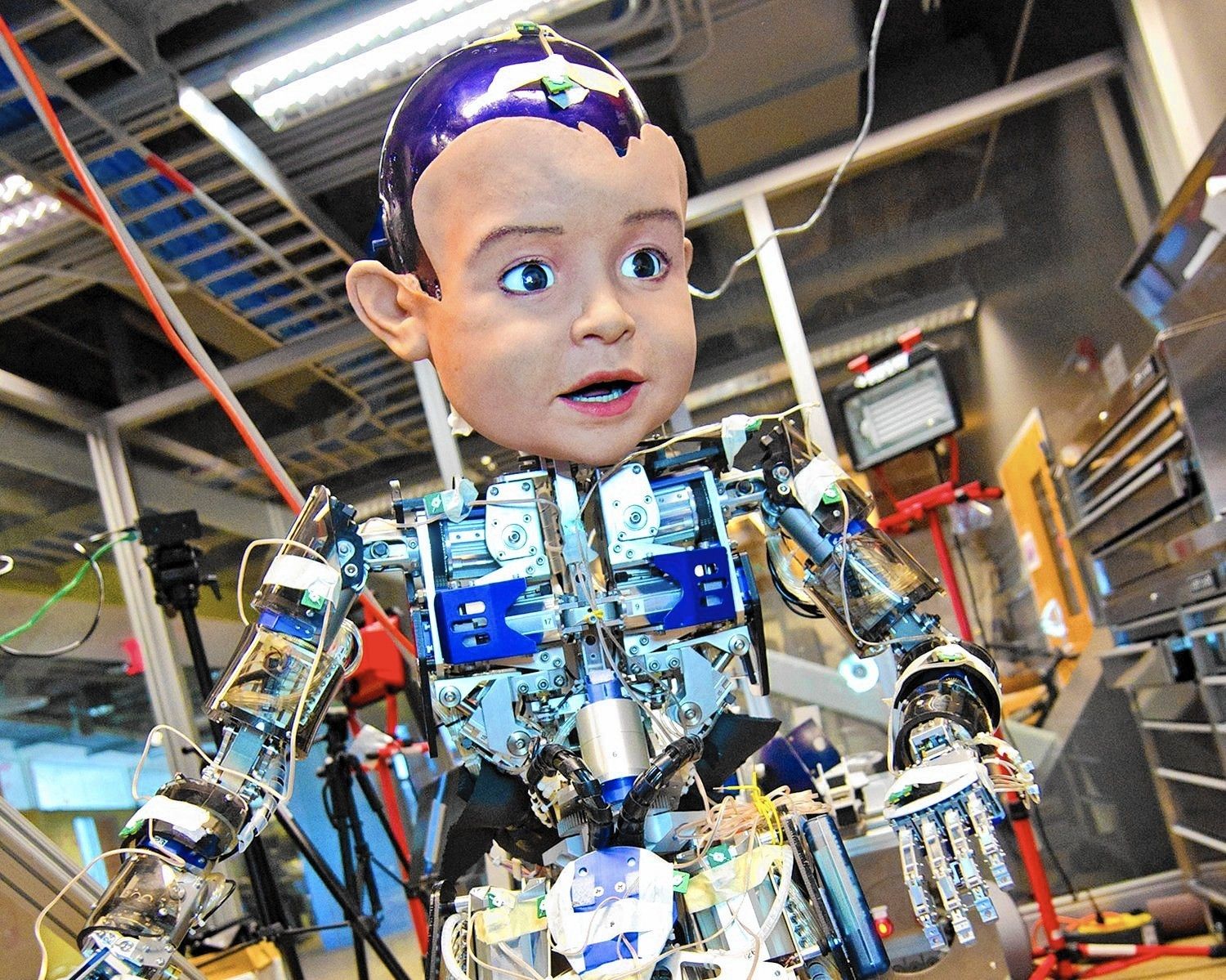

It doesn’t matter if you are scared of AI like Elon Musk or Stephen Hawking or if you have an opinion same as that of Google’s chief of Artificial Intelligence that computers are remarkably dumb. Companies are still going through the byzantine process of training the machines and creating human brain algorithms. Meanwhile, Facebook has just announced its progress report.

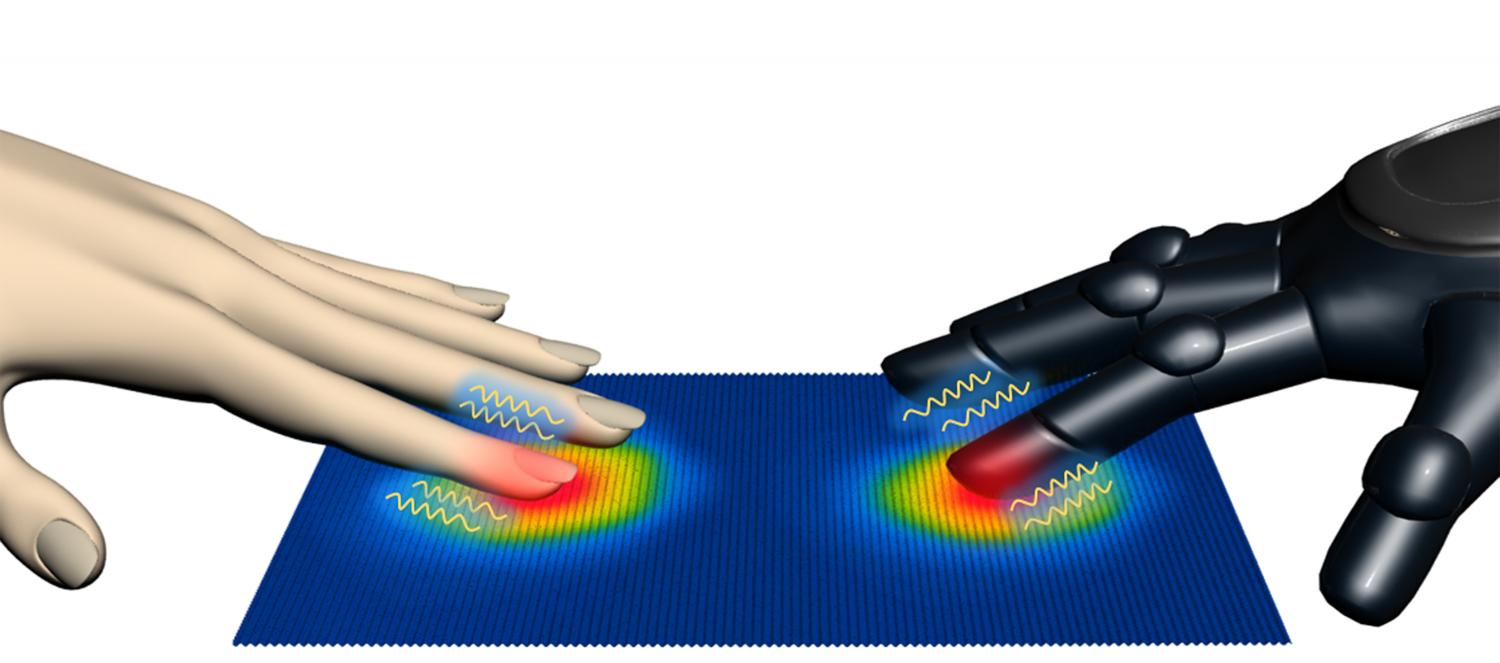

Facebook’s AI research team (FAIR) will present at NIPS, an Artificial Intelligence conference, its report card and reveal the team’s achievements regarding its state-of-the-art systems. Facebook has been trying to improve the image recognition and has created a system that speeds up the process by 30% using 10 times less training data from previous benchmarks.