International Business Machines, still the legal name of century-plus-old IBM, has managed over the years to pull off a dubious feat. Despite selling goods and services in one of the most dynamic industries in the world, the IT sector the company helped create, it has managed to avoid growing.

The company that was synonymous with mainframes, that dominated the early days of the personal computer (a “PC” once meant a device that ran software built to IBM’s technical standards), and that reinvented itself as a tech-consulting goliath, lagged while upstarts and a few of its old competitors zoomed past it.

What IBM excelled at more often was marketing a version of its aspirational self. Its consultants would advise urban planners on how to create “smart cities.” Its command of artificial intelligence, packaged into a software offering whose name evoked its founding family, would cure cancer. Its CEO would wow the Davos set with cleverly articulated visions of how corporations could help fix the ills of society.

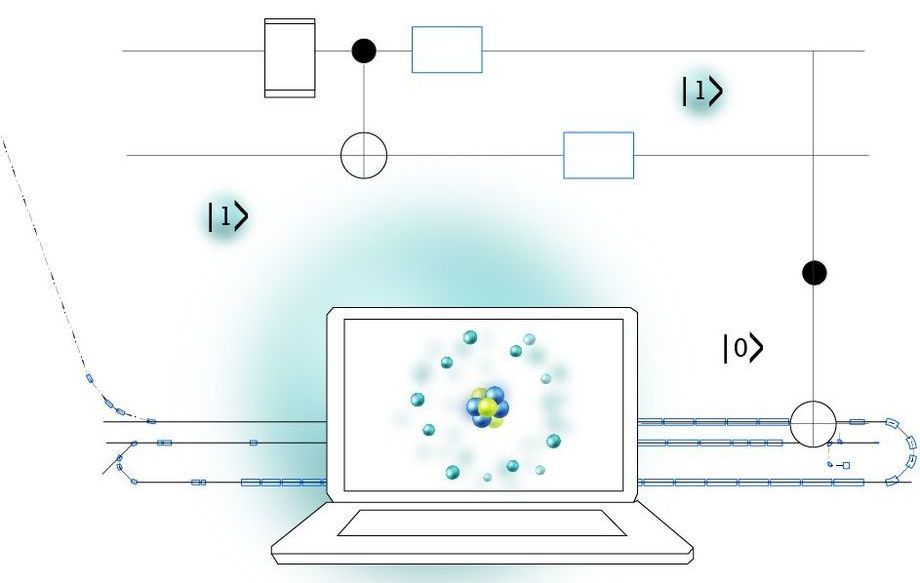

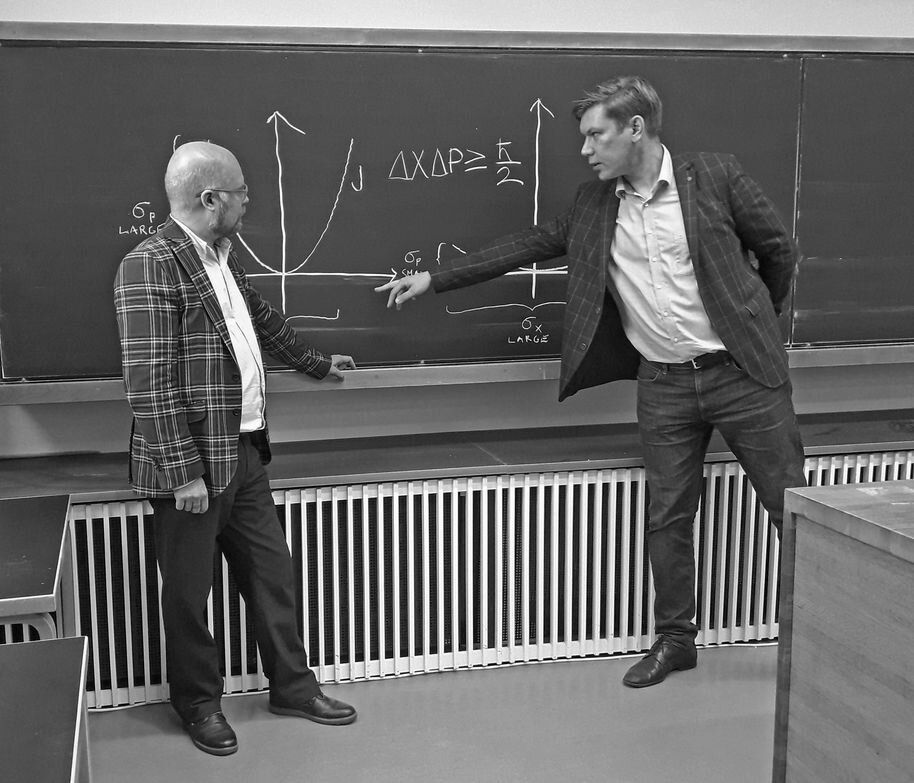

What IBM did not do was grow or participate sufficiently in the biggest trend in business-focused IT, cloud computing. Now, in the words of veteran tech analyst Toni Sacconaghi of research shop Bernstein, new IBM CEO Arvind Krishna is pursuing a strategy of “growth through subtraction.” The company is spinning off its IT outsourcing business, a low-growth, low-margin portion of its services business that rings up $19 billion in annual sales. Krishna told Aaron and Fortune writer Jonathan Vanian that he plans to bulk back up after the spinoff via acquisitions. “We’re open for business,” he said.

The move is bold, if risky. The reason it took so long, and presumably a new leader, to jettison the outsourcing business is that it was meant to drive sales of IBM hardware and other services. But Krishna, promoted for his association with IBM’s nascent cloud-computing effort—just as Microsoft Satya Nadella ran his company’s cloud arm before taking the top job—recognizes that only by discarding a moribund business can IBM focus and invest properly in the one that matters.

IBM CEO Arvind Krishna is spinning off IBM’s IT outsourcing services unit to focus on cloud and quantum computing.