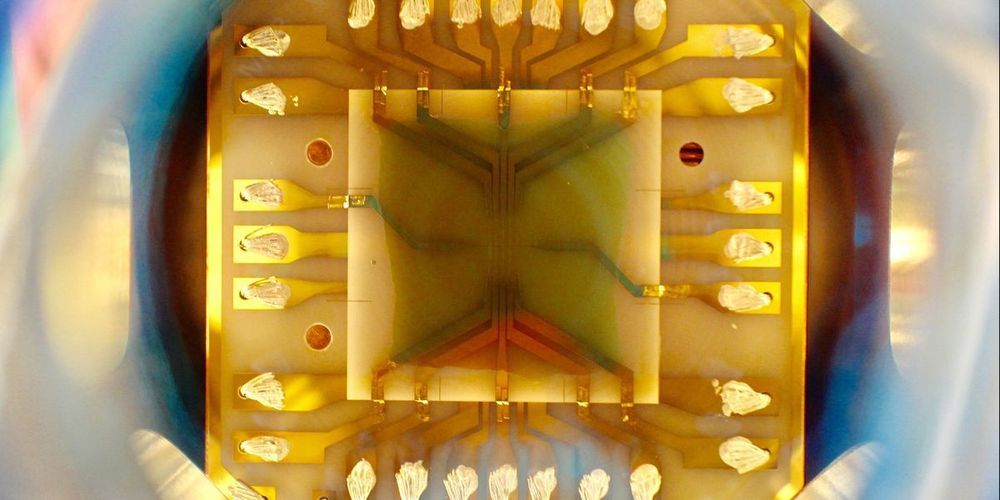

Researchers from Sorbonne University in Paris have achieved a highly efficient transfer of quantum entanglement into and out of two quantum memory devices. This achievement brings a key ingredient for the scalability of a future quantum internet.

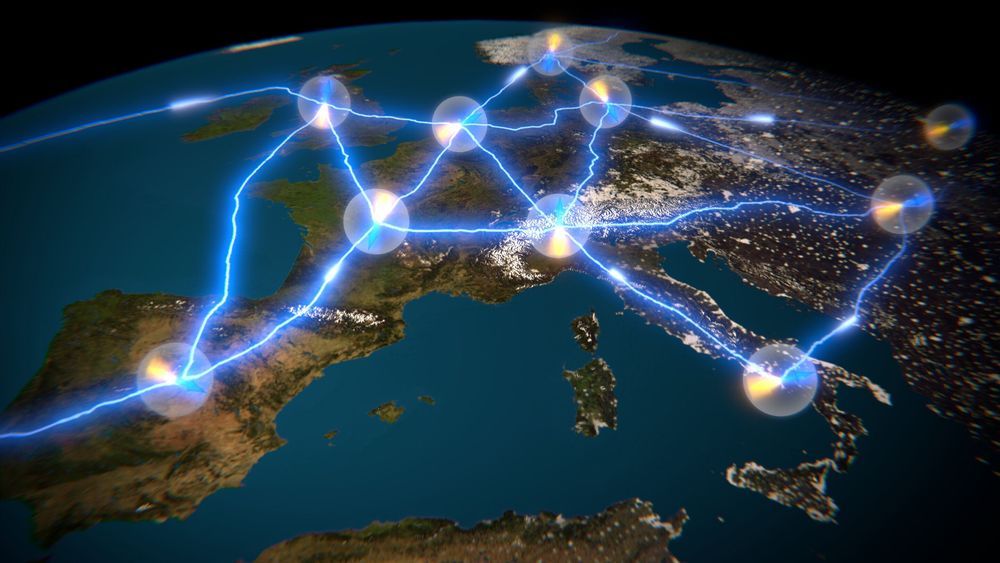

A quantum internet that connects multiple locations is a key step in quantum technology roadmaps worldwide. In this context, the European Quantum Flagship Programme launched the Quantum Internet Alliance in 2018. This consortium coordinated by Stephanie Wehner (QuTech-Delft) consists of 12 leading research groups at universities from eight European countries, in close cooperation with over 20 companies and institutes. They combined their resources and areas of expertise to develop a blueprint for a future quantum internet and the required technologies.

A quantum internet uses an intriguing quantum phenomenon to connect different nodes in a network together. In a normal network connection, nodes exchange information by sending electrons or photons back and forth, making them vulnerable to eavesdropping. In a quantum network, the nodes are connected by entanglement, Einstein’s famous “spooky action at a distance.” These non-classical correlations at large distances would allow not only secure communications beyond direct transmission but also distributed quantum computing or enhanced sensing.