Circa 2020

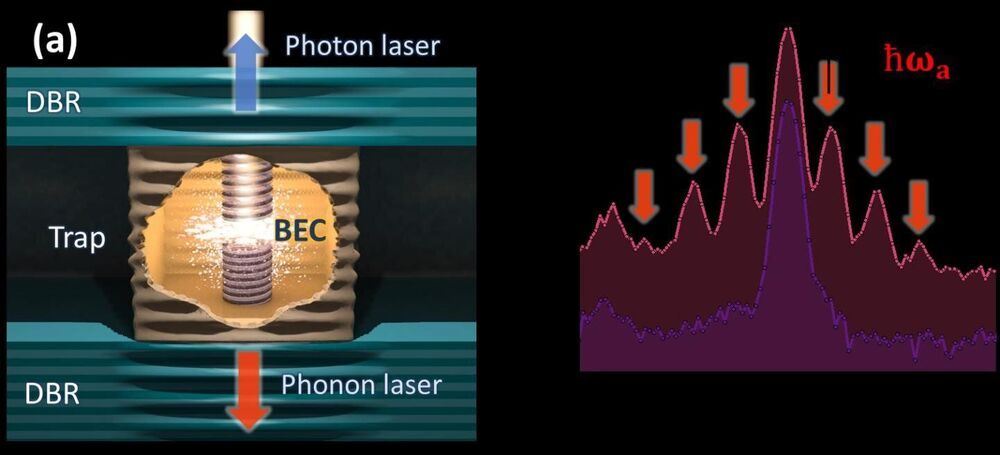

Lasing—the emission of a collimated light beam of light with a well-defined wavelength (color) and phase—results from a self-organization process, in which a collection of emission centers synchronizes itself to produce identical light particles (photons). A similar self-organized synchronization phenomenon can also lead to the generation of coherent vibrations—a phonon laser, where phonon denotes, in analogy to photons, the quantum particles of sound.

Photon lasing was first demonstrated approximately 60 years ago and, coincidentally, 60 years after its prediction by Albert Einstein. This stimulated emission of amplified light found an unprecedented number of scientific and technological applications in multiple areas.

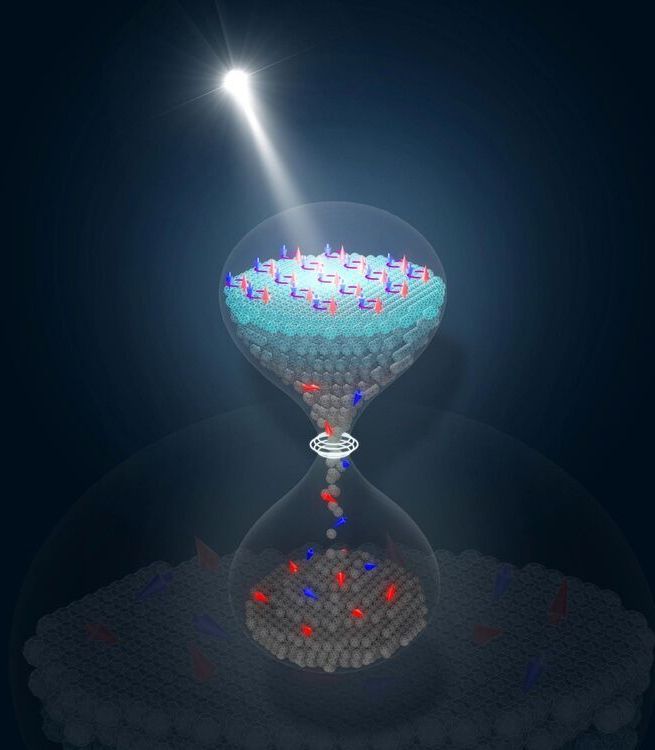

Although the concept of a “laser of sound” was predicted almost at the same time, only few implementations have so far been reported and none has attained technological maturity. Now, a collaboration between researchers from Instituto Balseiro and Centro Atómico in Bariloche (Argentina) and Paul-Drude-Institut in Berlin has introduced a novel approach for the efficient generation of coherent vibrations in the tens of GHz range using semiconductor structures. Interestingly, this approach to the generation of coherent phonons is based on another of Einstein’s predictions: that of the 5th state of matter, a Bose-Einstein condensate (BEC) of coupled light-matter particles (polaritons).