Single-purpose quantum computers are helping physicists build simulations of nature’s greatest hits and observe them up close.

Quantum Vacuum Plasma Thruster

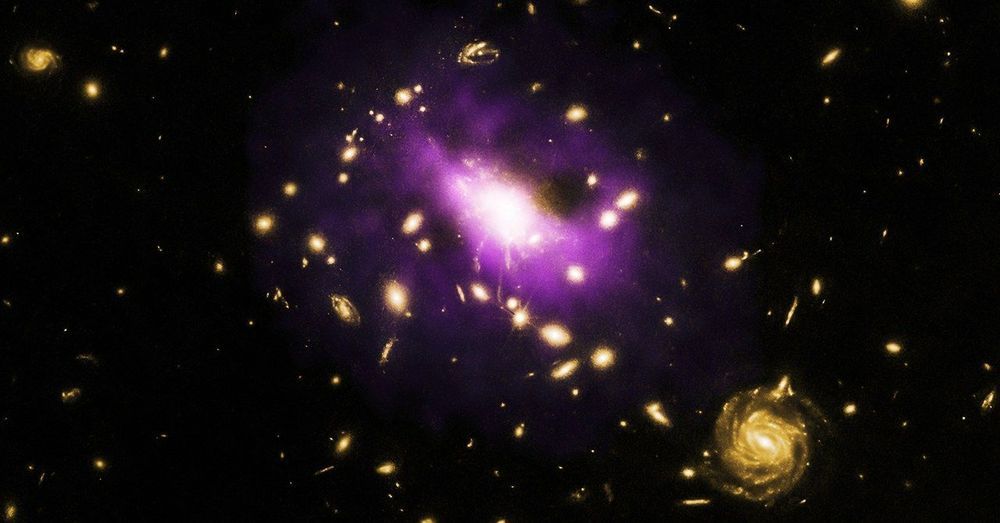

White shows me into the facility and ushers me past its central feature, something he calls a quantum vacuum plasma thruster (QVPT). The device looks like a large red velvet doughnut with wires tightly wound around a core, and it’s one of two initiatives Eagleworks is pursuing, along with warp drive. It’s also secret. When I ask about it, White tells me he can’t disclose anything other than that the technology is further along than warp drive … Yet when I ask how it would create the negative energy necessary to warp space-time he becomes evasive.

Molecule Rules

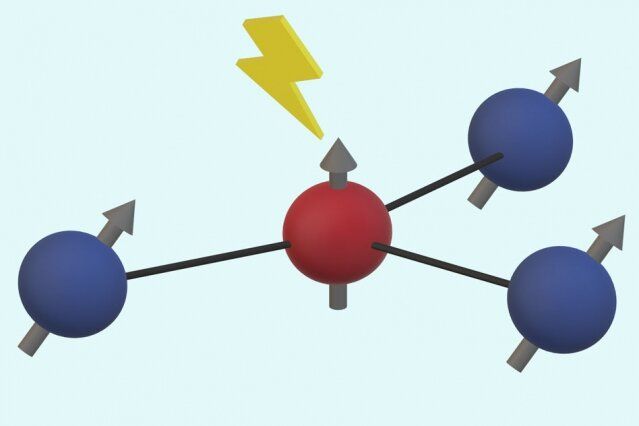

The team even managed to observe two of three atoms collide to form a molecule — a process that has never been observed on this scale before. They were surprised at how long it took compared to previous experiments and calculations.

“By working at this molecular level, we now know more about how atoms collide and react with one another,” lead author and postdoc researcher Marvin Weyland said in a statement. “With development, this technique could provide a way to build and control single molecules of particular chemicals.”

Circa 2016

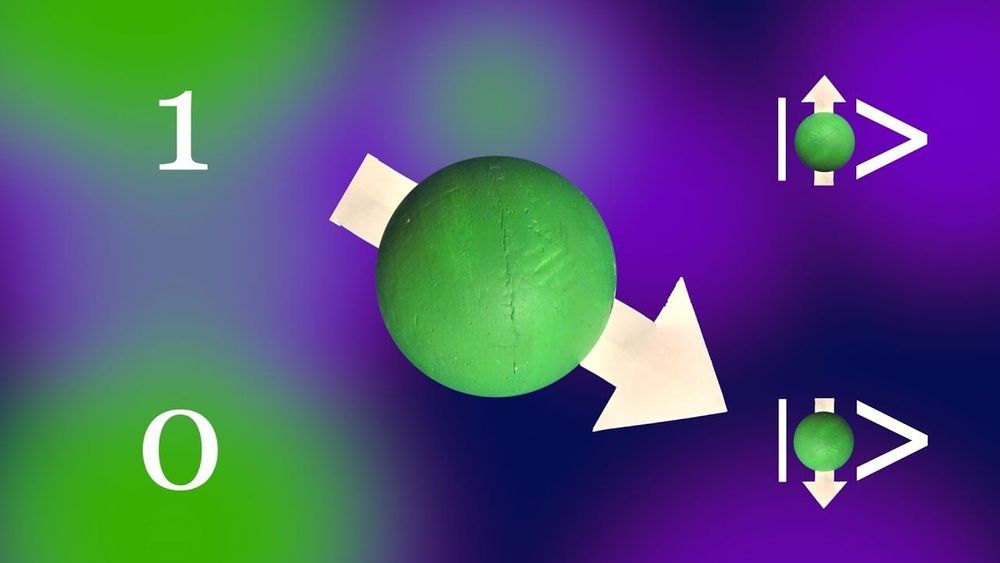

MIT scientists have developed a 5-atom quantum computer, one that is able to render traditional encryption obsolete.

In my previous post “Cryonics for uploaders: WTF is consciousness?” I didn’t elaborate on the spiritual implications of emerging theories of consciousness and reality. Here’s a unified theory of consciousness, physics, Deity, reincarnation, afterlife, eschatology, and theo/technological resurrection wink

By definition, posthumanism (I choose to call it ‘cyberhumanism’) is to replace transhumanism at the center stage circa 2035. By then, mind uploading could become a reality with gradual neuronal replacement, rapid advancements in Strong AI, massively parallel computing, and nanotechnology allowing us to directly connect our brains to the Cloud-based infrastructure of the Global Brain. Via interaction with our AI assistants, the GB will know us better than we know ourselves in all respects, so mind-transfer, or rather “mind migration,” for billions of enhanced humans would be seamless, sometime by mid-century.

I hear this mantra over and over again — we don’t know what consciousness is. Clearly, there’s no consensus here but in the context of topic discussed, I would summarize my views, as follows: Consciousness is non-local, quantum computational by nature. There’s only one Universal Consciousness. We individualize our conscious awareness through the filter of our nervous system, our “local” mind, our very inner subjectivity, but consciousness itself, the self in a big sense, our “core” self is universal, and knowing it through experience has been called enlightenment, illumination, awakening, or transcendence, through the ages.

Any container with a sufficiently integrated network of information patterns, with a certain optimal complexity, especially complex dynamical systems with biological or artificial brains (say, the coming AGIs) could be filled with consciousness at large in order to host an individual “reality cell,” “unit,” or a “node” of consciousness. This kind of individuated unit of consciousness is always endowed with free will within the constraints of the applicable set of rules (“physical laws”), influenced by the larger consciousness system dynamics. Isn’t too naïve to presume that Universal Consciousness would instantiate phenomenality only in the form of “bio”-logical avatars?

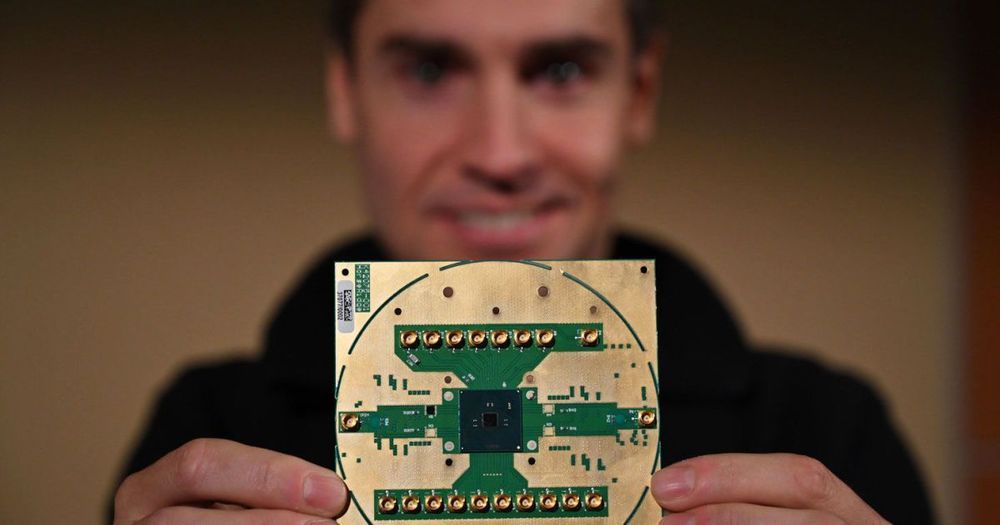

Labs around the world are racing to develop new computing and sensing devices that operate on the principles of quantum mechanics and could offer dramatic advantages over their classical counterparts. But these technologies still face several challenges, and one of the most significant is how to deal with “noise”—random fluctuations that can eradicate the data stored in such devices.

A new approach developed by researchers at MIT could provide a significant step forward in quantum error correction. The method involves fine-tuning the system to address the kinds of noise that are the most likely, rather than casting a broad net to try to catch all possible sources of disturbance.

The analysis is described in the journal Physical Review Letters, in a paper by MIT graduate student David Layden, postdoc Mo Chen, and professor of nuclear science and engineering Paola Cappellaro.