Here is our Christof Koch’s talk on studying consciousness in the mouse, shown during last week’s annual meeting of the Japan Neuroscience Society.

Grace LeClair had just finished eating dinner with friends when she got the phone call every parent dreads. The chaplain at the Medical College of Virginia was on the other end. “Your daughter has been in a serious accident. You should come to Richmond right away.” LeClair was in Virginia Beach at the time, a two-hour drive from 20-year-old Bess-Lyn, who was now lying in a coma in a Richmond hospital bed.

The friend who was with Bess-Lyn has since filled in the details of that day in March. The two women were bicycling down a steep hill, headed toward a busy intersection, when Bess-Lyn yelled that her brakes weren’t working and she couldn’t slow down. Her friend screamed for her to turn into an alley just before the intersection. But Bess-Lyn didn’t turn sharply enough and crashed, headfirst, into a concrete wall. She wasn’t wearing a helmet. By the time the ambulance reached the hospital, Bess-Lyn was officially counted among the 1.5 million Americans who will suffer a traumatic brain injury (TBI) this year.

Bess-Lyn’s mom was halfway to Richmond when she received a second call, this time from a doctor. “He was telling me that she had a very serious injury, that she had to have surgery to save her life and that if I would give permission, they would use this experimental, not-approved-by-the-FDA drug,” Grace LeClair recalls. “He said that it would increase the oxygen supply to her brain. To me that only made sense, so I said yes.”

Horizon Robotics, led by Yu Kai, Baidu’s former deep learning head, is developing AI chips and software to mimic how the human brain solves abstract tasks, such as voice and image recognition. The company believes that this will provide more consistent and reliable services than cloud based systems.

The goal is to enable fast and intelligent responses to user commands, with out an internet connection, to control appliances, cars, and other objects. Health applications are a logical next step, although not yet discussed.

Wearable Tech + Digital Health San Francisco – April 5, 2016 @ the Mission Bay Conference Center.

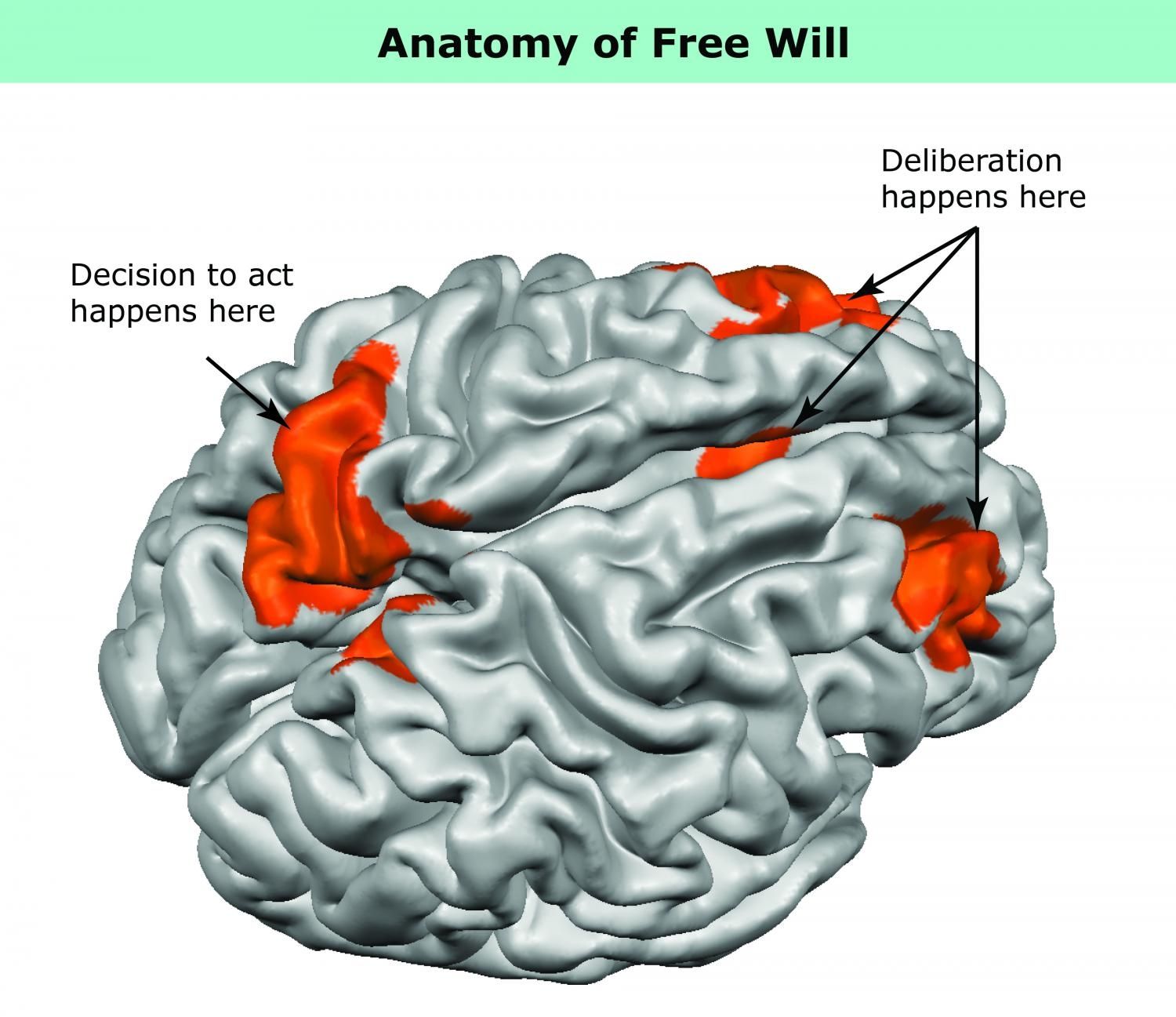

Johns Hopkins University researchers are the first to glimpse the human brain making a purely voluntary decision to act.

Unlike most brain studies where scientists watch as people respond to cues or commands, Johns Hopkins researchers found a way to observe people’s brain activity as they made choices entirely on their own. The findings, which pinpoint the parts of the brain involved in decision-making and action, are now online, and due to appear in a special October issue of the journal Attention, Perception, & Psychophysics.

“How do we peek into people’s brains and find out how we make choices entirely on our own?” asked Susan Courtney, a professor of psychological and brain sciences. “What parts of the brain are involved in free choice?”

Like this feature on QC.

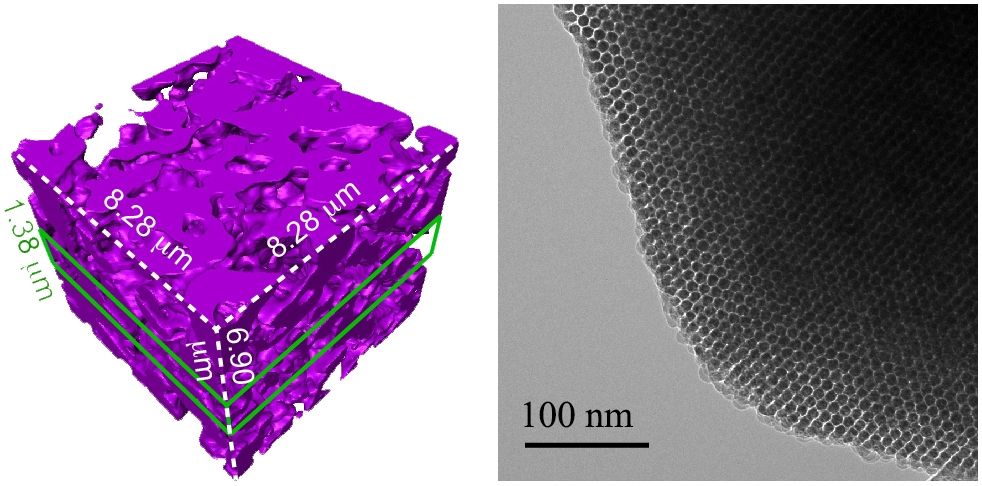

If you have trouble wrapping your mind around quantum physics, don’t worry — it’s even hard for supercomputers. The solution, according to researchers from Google, Harvard, Lawrence Berkeley National Laboratories and others? Why, use a quantum computer, of course. The team accurately predicted chemical reaction rates using a supercooled quantum circuit, a result that could lead to improved solar cells, batteries, flexible electronics and much more.

Chemical reactions are inherently quantum themselves — the team actually used a quote from Richard Feynman saying “nature isn’t classical, dammit.” The problem is that “molecular systems form highly entangled quantum superposition states, which require many classical computing resources in order to represent sufficiently high precision,” according to the Google Research blog. Computing the lowest energy state for propane, a relatively simple molecule, takes around ten days, for instance. That figure is required in order to get the reaction rate.

That’s where the “Xmon” supercooled qubit quantum computing circuit (shown above) comes in. The device, known as a “variational quantum eigensolver (VQE)” is the quantum equivalent of a classic neural network. The difference is that you train a classical neural circuit (like Google’s DeepMind AI) to model classical data, and train the VQE to model quantum data. “The quantum advantage of VQE is that quantum bits can efficiently represent the molecular wave function, whereas exponentially many classical bits would be required.”

Although BMI is nothing new; I never get tired of highlighting it.

Now the group has come up with a way for one person to control multiple robots.

The system works using one controller who watches the drones, while his thoughts are read using a computer.

The controller wears a skull cap fitted with 128 electrodes wired to a computer. The device records electrical brain activity. If the controller moves a hand or thinks of something, certain areas light up.

In the campy 1966 science fiction movie “Fantastic Voyage,” scientists miniaturize a submarine with themselves inside and travel through the body of a colleague to break up a potentially fatal blood clot. Right. Micro-humans aside, imagine the inflammation that metal sub would cause.

Ideally, injectable or implantable medical devices should not only be small and electrically functional, they should be soft, like the body tissues with which they interact. Scientists from two UChicago labs set out to see if they could design a material with all three of those properties.

The material they came up with, published online June 27, 2016, in Nature Materials, forms the basis of an ingenious light-activated injectable device that could eventually be used to stimulate nerve cells and manipulate the behavior of muscles and organs.