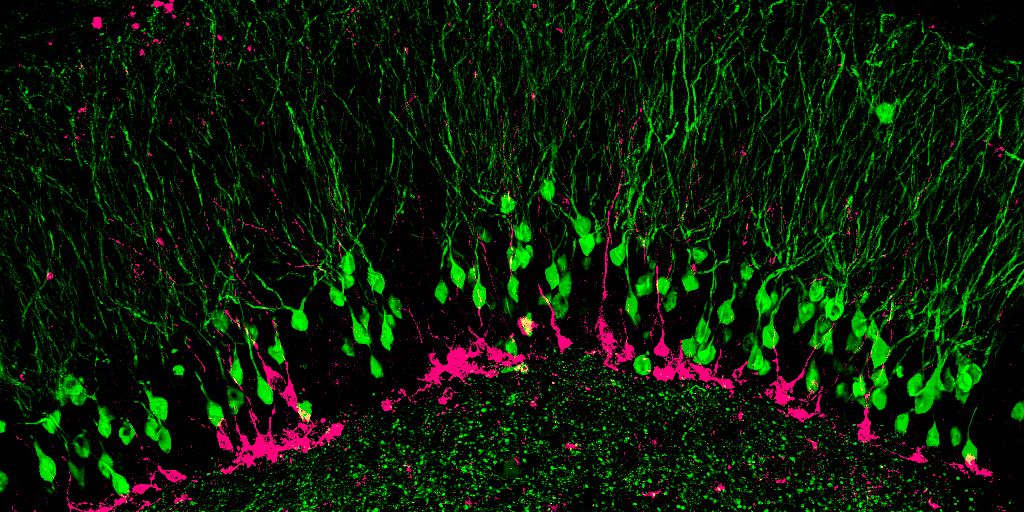

Brain is the most complex biological computing system and performs almost every activity with jet speed and precision. Despite the numerous advancements in the interaction of technology and science, there is no machine that functions as swift as a brain. Nevertheless, the recent experiment by the researchers of Okinawa Institute of Science and Technology Graduate University in Japan and Forschungszentrum Jülich in Germany is a milestone in the history of producing human brain simulations by a computer.

The team of researchers from Japan and Germany have managed to produce the most accurate simulation of a human brain in Japan’s computer. The single second worth of activity in the human brain from just one percent of the complex organ was able to be produced in 40 minutes by the world’s fourth largest computer.

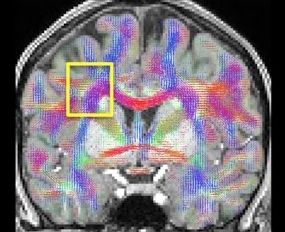

The computer used is the K computer in Japan to simulate human brain activity. The computer has 705,024 processor cores and 1.4 million GB of RAM, but still took 40 minutes to crunch the data for just one second of brain activity. The open-source Neural Simulation Technology (NEST) tool is used to replicate a network consisting of 1.73 billion nerve cells connected by 10.4 trillion synapses.