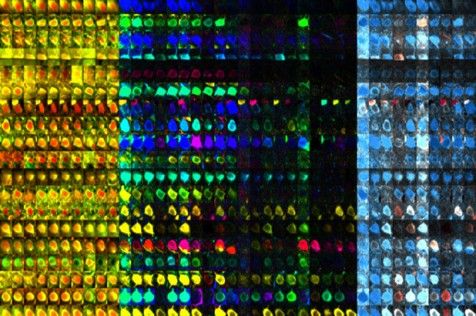

Monitoring their sleeping patterns, researchers identified an increase in the duration and continuity of REM sleep and specific brain oscillations characteristic of REM sleep, whereas ‘deep’ sleep, or non-REM sleep, did not change. The changes in REM sleep were very tightly linked to deficiency in the regulation of the stress hormone corticosterone. Mild stress also caused changes in gene expression in the brain.

The first and most distinct consequence of daily mild stress is an increase in rapid-eye-movement (REM) sleep, a new study in the journal PNAS reports. The research also demonstrated that this increase is associated with genes involved in cell death and survival.

REM sleep, also known as paradoxical sleep, is the sleep state during which we have most of our dreams and is involved in the regulation of emotions and memory consolidation. REM sleep disturbances are common in mood disorders, such as depression. However, little was known about how sleep changes are linked to molecular changes in the brain.

During this 9-week study, conducted by researchers from the Surrey Sleep Research Centre at the University of Surrey in collaboration with Eli Lilly, mice were intermittently exposed to a variety of mild stressors, such as the odour of a predator. Mice exposed to mild stressors developed signs of depression; they were less engaged in self-care activities; were less likely to participate in pleasurable activities such as eating appetising food, and became less social and interested in mice they hadn’t encountered before.