At ease on unsteady seas.

If SANTOSH Ostwal and his wife, Rajashree Otswal, were to calculate the number of electricity units saved or the amount of water conserved in terms of money, thanks to their invention, it would be enough to set up a small power plant or building a small dam. The end to a farmer’s daily drudgery and sleepless nights, though, is hard to convert into currency. But then money is something the Ostwal couple have not seen much of in their long journey of taking technology to the farms.

Much before information and communication technology (ICT) for agriculture and rural development became buzzwords and ‘e’ got hyphenated to everything, the Ostwals, both engineers, ventured into wireless irrigation and mobile-to-mobile (M2M) communication systems for agriculture. A remotely controlled pump using the mobile phone and combing it with some clever electronics was the innovation that has made the lives of farmers easier.

The Ostwals’ invention has impacted the lives of four lakh farmers with 50,000 installations in the last 12 years. A smart and affordable device, Nano Ganesh, saves farmers from making treacherous trips in pitch dark to their farms at midnight to access their water pumps and operate them, a daily reality, especially with erratic power supply. When the tired farmer fails to go out and switch off the water pump, there’s wastage of water and electricity. In addition, the excess water damages the soil and crop, hurting them further. If that is not enough, there is the theft of water pumps and cables to be dealt with. These are the problems that the couple set to solve.

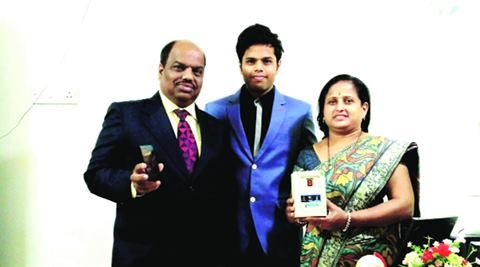

This kit by Royal College of Art graduate Heeju Kim uses sweets to recreate the tongue-tying experience of living with autism. Kim created three tools and a mobile application as part of the project, which is titled An Empathy Bridge for Autism.

A set of six awkwardly shaped lollipops and candies impede tongue movement in various ways. They make it hard for users to hold a conversation, conveying how unclear pronunciation has an impact on autistic individuals.

An augmented-reality headset is worn over the eyes and connects to a smartphone to alter the user’s perception of what’s in front of them. It restricts the view of their periphery, gives them double vision or obscures their focus with a patch of black.

In 1965, Intel co-founder Gordon Moore published a remarkably prescient paper which observed that the number of transistors on an integrated circuit was doubling every two years and predicted that this pace would lead to computers becoming embedded in homes, cars and communication systems.

That simple idea, known today as Moore’s Law, has helped power the digital revolution. As computing performance has become exponentially cheaper and more robust, we have been able to do a lot more with it. Even a basic smartphone today is more powerful than the supercomputers of past generations.

Yet the law has been fraying for years and experts predict that it will soon reach its limits. However, I spoke to Bernie Meyerson, IBM’s Chief Innovation Officer, and he feels strongly that the end of Moore’s Law doesn’t mean the end of progress. Not by a long shot. What we’ll see though is a shift in emphasis from the microchip to the system as a whole.

In Brief

Foxconn Electronics, the Taiwanese manufacturing company behind some of the biggest electronic brands’ devices, including Apple’s iPhone, has announced that it will ramp up automation processes at its Chinese factories. The goal is to eventually achieve full automation.

In an article published in Digitimes, General Manager Dai Jia-peng of Foxconn’s Automation Technology Development Committee explains that the process will unfold in three phases.

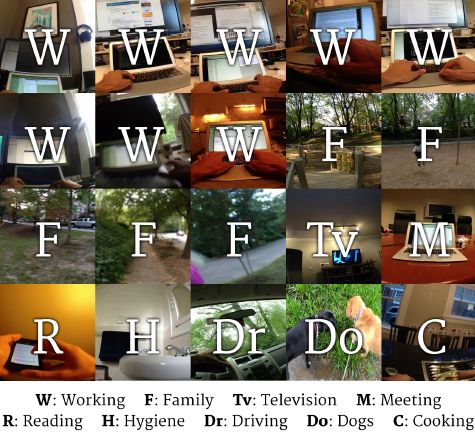

Apple’s first paper on artificial intelligence, published Dec. 22 on arXiv (open access), describes a method for improving the ability of a deep neural network to recognize images.

To train neural networks to recognize images, AI researchers have typically labeled (identified or described) each image in a dataset. For example, last year, Georgia Institute of Technology researchers developed a deep-learning method to recognize images taken at regular intervals on a person’s wearable smartphone camera.

Well before the family came in to the Batson Children’s Specialty Clinic in Jackson, Mississippi, they knew something was wrong. Their child was born with multiple birth defects, and didn’t look like any of its kin. A couple of tests for genetic syndromes came back negative, but Omar Abdul-Rahman, Chief of Medical Genetics at the University of Mississippi, had a strong hunch that the child had Mowat-Wilson syndrome, a rare disease associated with challenging life-long symptoms like speech impediments and seizures.

So he pulled out one of his most prized physicians’ tools: his cell phone.

Using an app called Face2Gene, Abdul-Rahman snapped a quick photo of the child’s face. Within a matter of seconds, the app generated a list of potential diagnoses — and corroborated his hunch. “Sure enough, Mowat-Wilson syndrome came up on the list,” Abdul-Rahman recalls.

A new smartphone add-on has been demonstrated to detect cancer with 99% accuracy in the lab. The breakthrough could have significant implications for diagnostic capabilities in remote areas or when limited medical services are available.

Very cool; I do look forward to see where we land in the next 5 years on mobile imaging systems.

Years ago I remember developing software for a mobile blood gas analyzer to help researchers and doctors in some of the world’s most remote locations. And, the technology then did improve survival rates for so many. And, I see advances like this one doing so much for many who do not have access or the luxury of centralize labs, or hospitals, etc.

Democratizing Cellular Time-Lapses with a Cell-Phone!

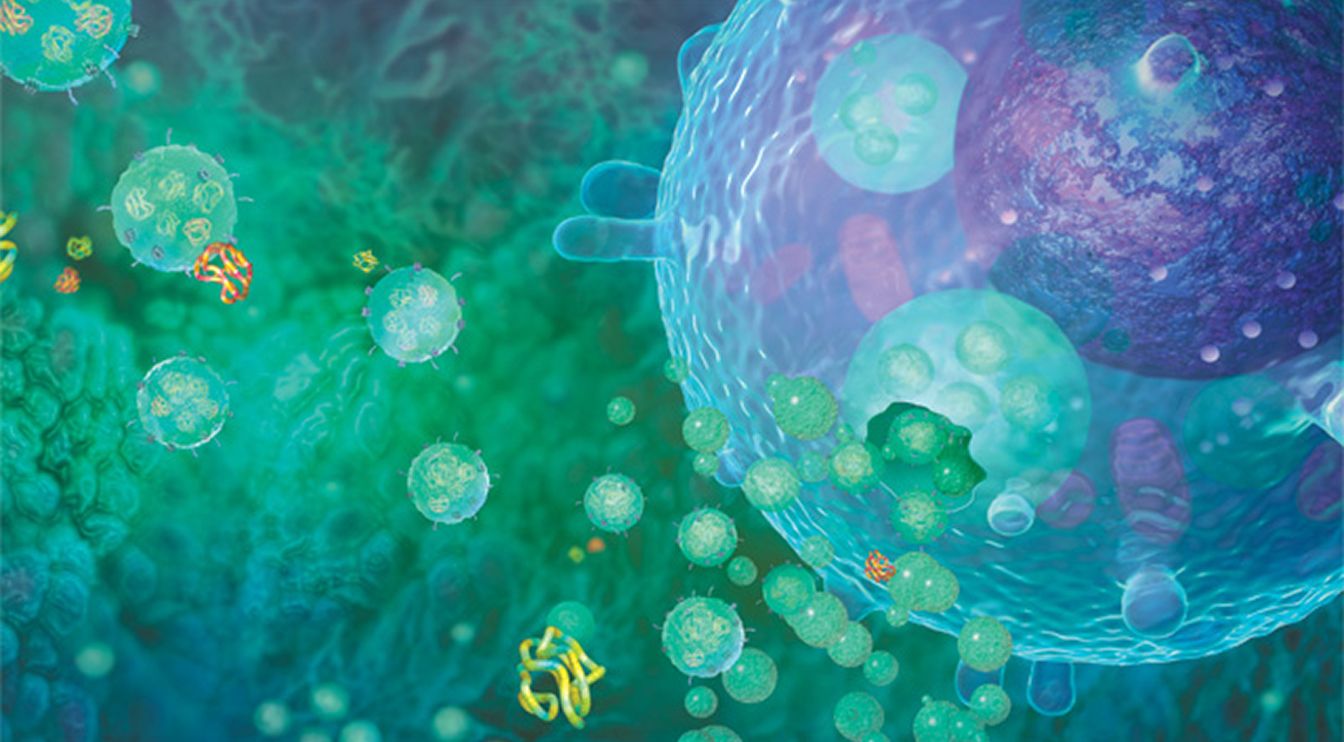

A group of researchers from Uppsala University have recently developed an affordable system capable of capturing time-lapse videos of living cells under various conditions. Dubbed the affordable time-lapse imaging and incubation systm (ATLIS), the system can be constructed out of off-the-shelf electronic components and 3D-printed parts while using a standard smartphone for imaging.

While there have been other microscope adapters for smartphones to enable easy image capturing, the ATLIS is much more than microscope smartphone adapters. It is optimised in order to convert old microscopes found in abundance in Universities and hospitals into full-fledged time-lapse systems to image cell dynamics. Such a system requires strict environmental control of temperature, pH, osmolarity and light exposure in order to maintain normal cell behaviour.