The Big Bang might never have existed as many cosmologists start to question the origin of the Universe. The Big Bang is a point in time defined by a mathematical extrapolation. The Big Bang theory tells us that something has to have changed around 13.7 billion years ago. So, there is no “point” where the Big Bang was, it was always an extended volume of space, according to the Eternal Inflation model. In light of Digital Physics, as an alternative view, it must have been the Digital Big Bang with the lowest possible entropy in the Universe — 1 bit of information — a coordinate in the vast information matrix. If you were to ask what happened before the first observer and the first moments after the Big Bang, the answer might surprise you with its straightforwardness: We extrapolate backwards in time and that virtual model becomes “real” in our minds as if we were witnessing the birth of the Universe.

In his theoretical work, Andrew Strominger of Harvard University speculates that the Alpha Point (the Big Bang) and the Omega Point form the so-called ‘Causal Diamond’ of the conscious observer where the Alpha Point has only 1 bit of entropy as opposed to the maximal entropy of some incredibly gigantic amount of bits at the Omega Point. While suggesting that we are part of the conscious Universe and time is holographic in nature, Strominger places the origin of the Universe in the infinite ultra-intelligent future, the Omega Singularity, rather than the Big Bang.

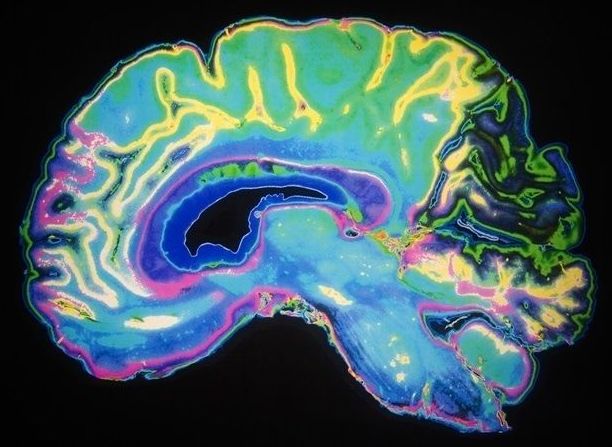

The Universe is not what textbook physics tells us except that we perceive it in this way — our instruments and measurement devices are simply extensions of our senses, after all. Reality is not what it seems. Deep down it’s pure information — waves of potentiality — and consciousness orchestrating it all. The Big Bang theory, drawing a lot of criticism as of late, uses a starting assumption of the “Universe from nothing,” (a proverbial miracle, a ‘quantum fluctuation’ christened by scientists), or the initial Cosmological Singularity. But aside from this highly improbable happenstance, we can just as well operate from a different set of assumptions and place the initial Cosmological Singularity at the Omega Point — the transcendental attractor, the Source, or the omniversal holographic projector of all possible timelines.